When Exadata X8M was released during the last Open World I made one post about the technical details about it. You can check it here: Exadata X8M (this post received some good shares and reviews). If you read it, you can see that I focused in more internal details (like torn blocks, one side path, two sides read/writes, and others), that differ from the normal analyses for Exadata X8M.

But recently I was invited by Oracle to participate exclusive workshop about Exadata X8M and I needed to share some details that picked me up. The workshop was done directly from Oracle Solution Center in Santa Clara Campus (it is an amazing place that I had an opportunity to visit in 2015, and have a rich history – if you have the opportunity, visit), and cover some technical details and with the hands-on part.

Unfortunately, I can’t share everything (I even don’t know if I can share something), but see the info below.

Exadata X8M

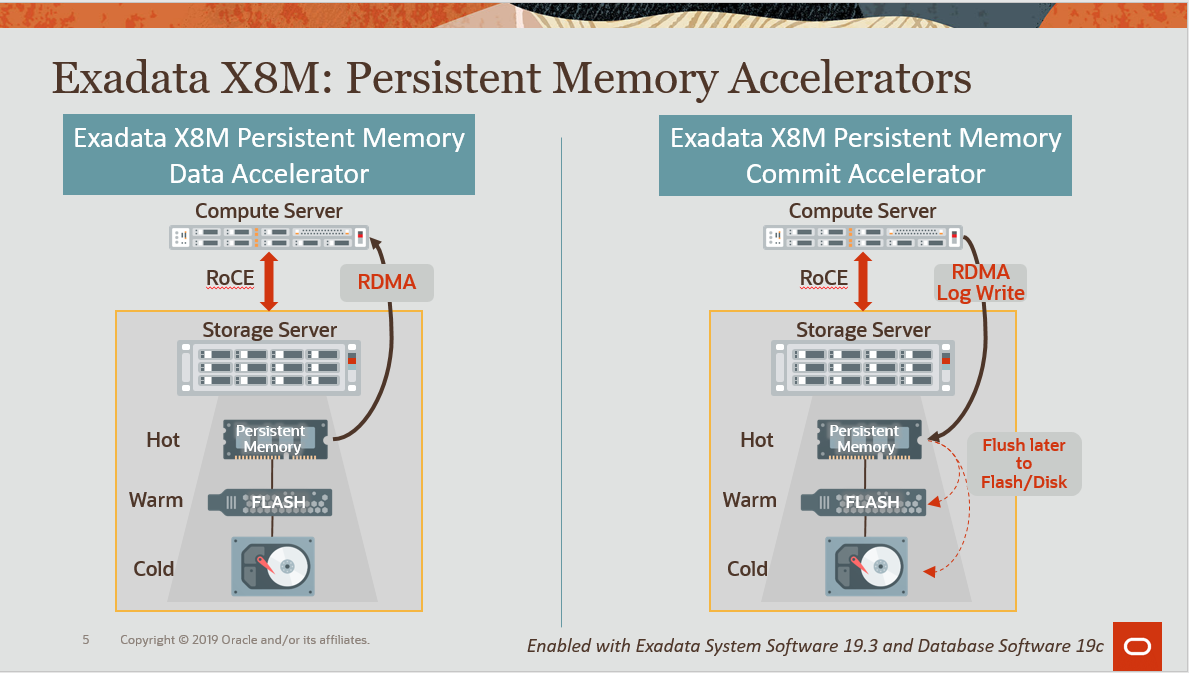

Little briefing before. If you don’t know, the big change for Exadata X8M was the add of RDMA memory directly at the storage server. Basically, added Intel Optane Memory (that it is nonvolatile by the way), in front of everything (including flashcache). Called PMEM (Persistent memory) as Exadata.

You can see more detail about Exadata at “Exadata Technical Deep Dive: Architecture and Internals” available at Oracle Web Site.

And just to add, the database server can access directly it, without passing operational system caches in the middle since the RDMA is ZDP (Zero-loss Zero-Copy Datagram Protocol). The data goes directly from database server memory to storage server memory. And if you think that now it uses RCoE (100GB/s network), it is fast.

Workshop

As told the workshop was more than a simple marketing presentation and had hands-on part. The focus was to show the PMEM gains over a “traditional” Exadata. Gains over log writes (PMEMLog) and gains over cache for reads (PMEMCache).

The test executed some loads (to test the log writes) and reads (to test the cache) for some defined period. Two runs were made, one with features disabled and other with enabled. And after that, we can compare the results.

Just to be clear that the focus of the tests was not to stress the machine and force to reach the limits. The focus was to see the difference between executions with and without PMEMCache and PMEMLog enabled. So, the numbers that you see here are below the limits for the Exadata.

Environment

Just to be more precise, the Workshop runs over Exadata X8M Extreme Flash Edition. This means that the disk was the flash driver. So, here we are comparing Flash against Memory. You will see the numbers below, but when read it remember that is flash, and think that they will be even slower in “normal” environment with disks instead of flash. Check the datasheet for more info.

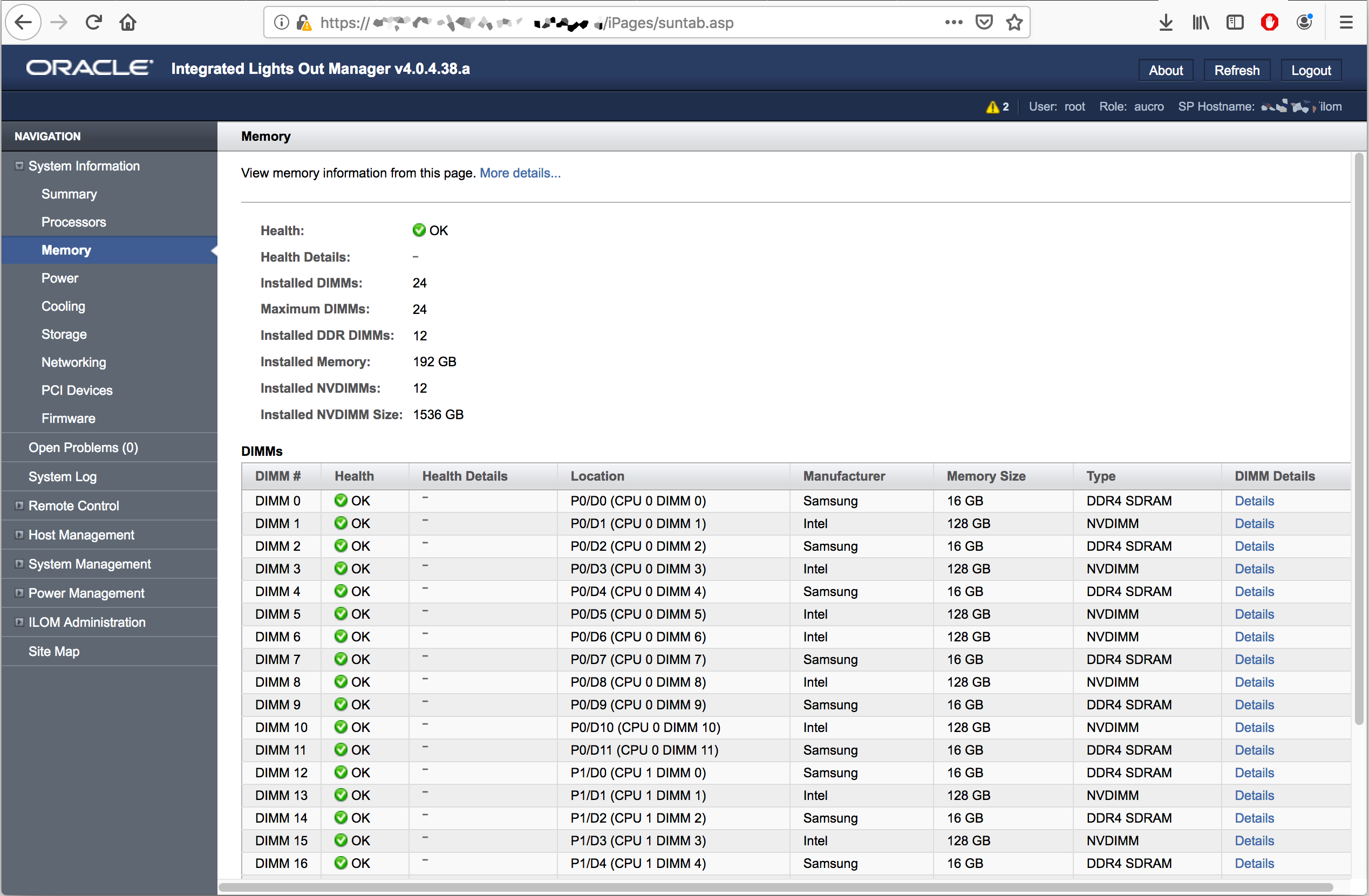

For PMEM, the Exadata had 1.5TB of Intel Optane Memory. Twelve modules attached in each storage:

PMEMLog

The PMEMlog works in the same way that flashlog. It will speed up the redo writes that came from LGWR. For the workshop was first executed one run without PMEMLog, and the numbers were:

- Execution per second: 26566

- Log file sync average time: 310.00 μs

- Log file parallel write average time: 129.00 μs

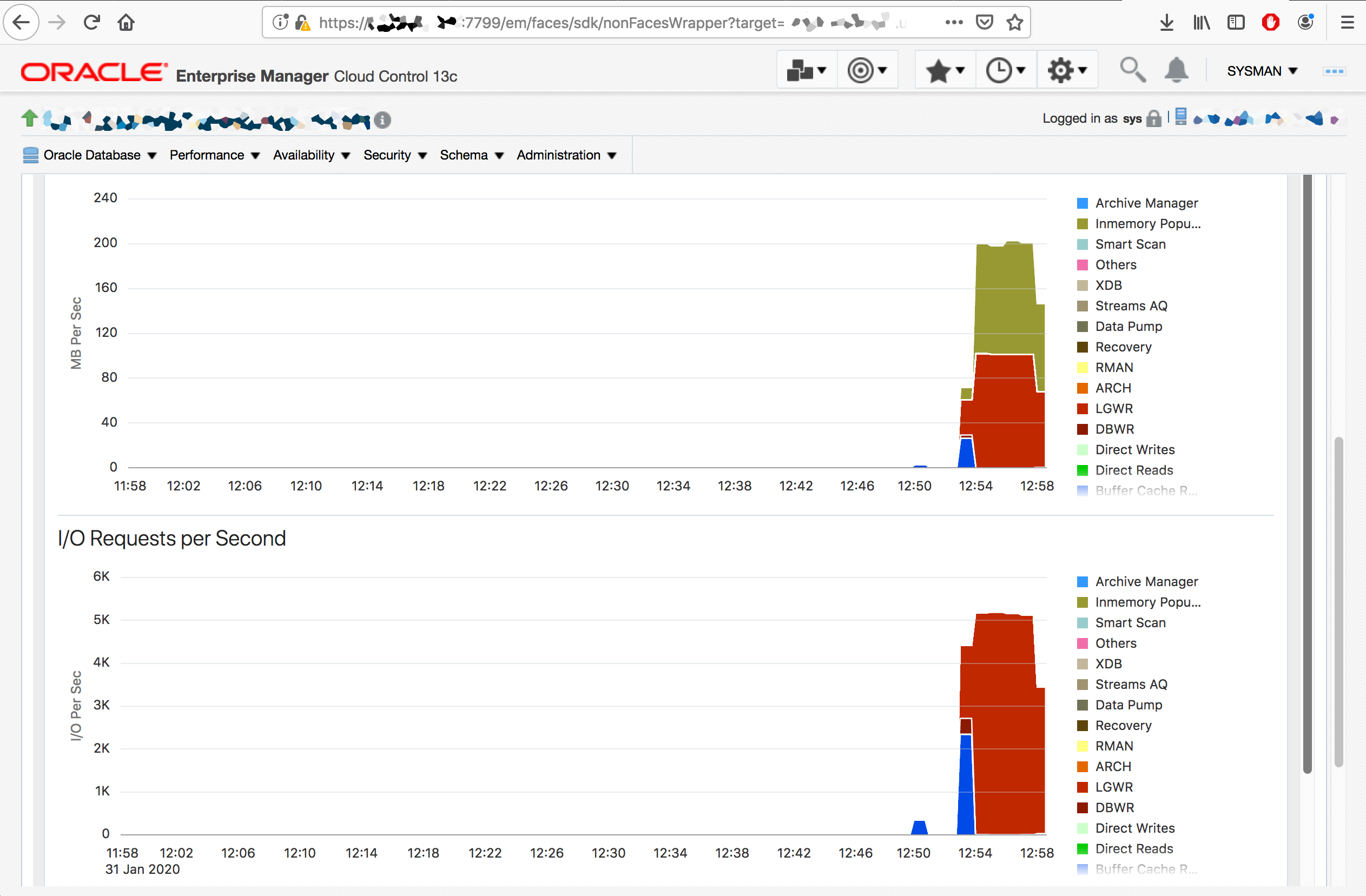

At CloudControl (CC):

And the same run was made with PMEMLog enabled. The results were:

- Execution per second: 73915

- Log file sync average time: 56.00 μs

- Log file parallel write average time: 14.00 μs

And for CC:

As you can see, the difference was:

- 7x more executions.

- 2x faster to do log switch.

- 2x faster to do log writes.

As you can see, good numbers and good savings. Note that the actual performance measured may vary based on your workload. As expected, the use of PMEM speed up the redologs writes executed over the same workload (the database was restarted between the runs).

PMEM Cache

PMEM Cache works as a cache in front of the flashcache for Exadata Software, in the same way that flashcache but using just the PMEM memory modules. There nothing much to explain, everything is controlled by Exadata Software (the blocks that will be at PMEMCache), but the results are pretty amazing.

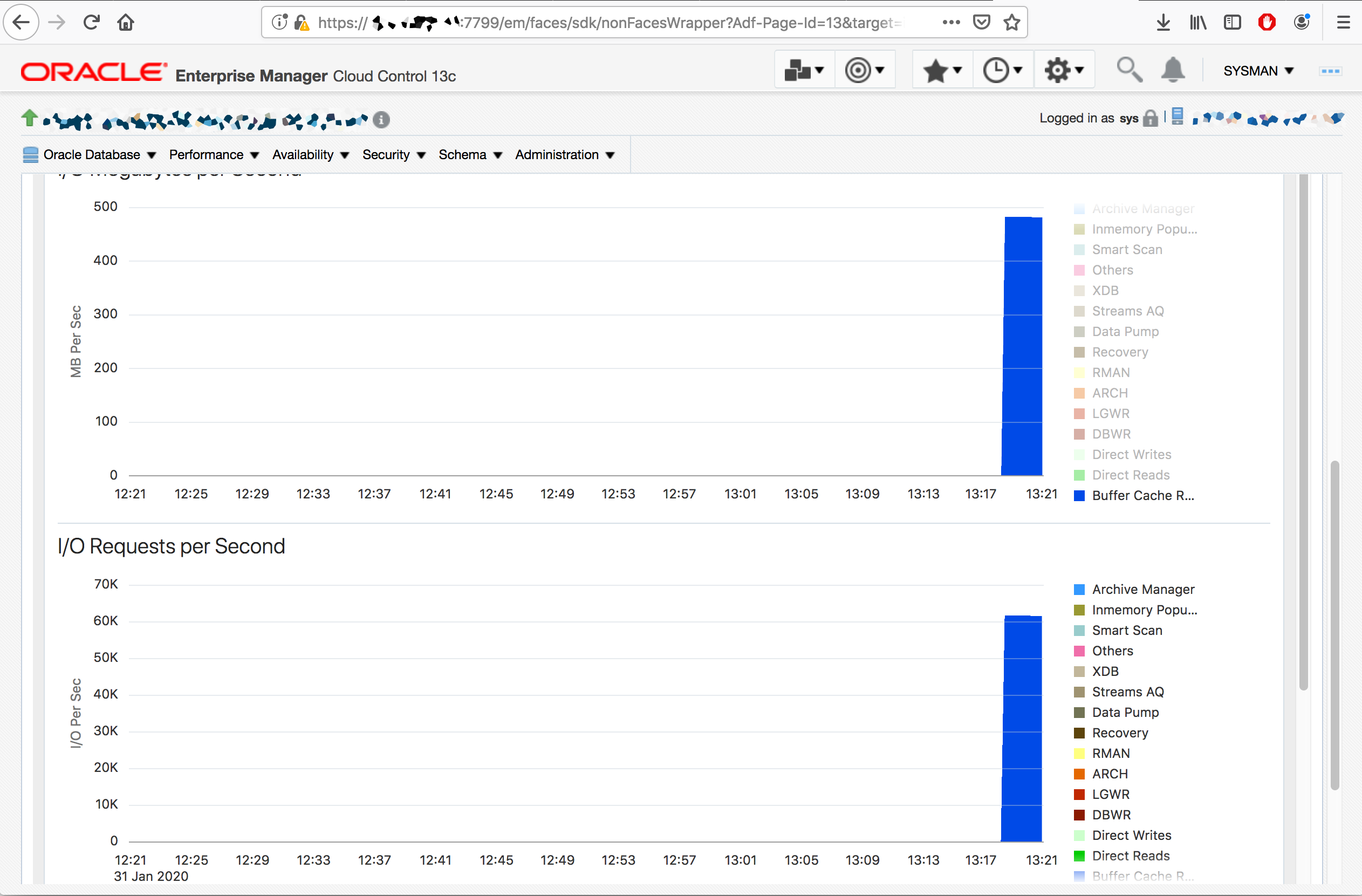

During the workshop one run was executed without PMEMCache enabled, the result was:

- Number of read IOPS: 125.688

- Average single block read latency: 233.00 μs

- Average MB/s: 500MB/s

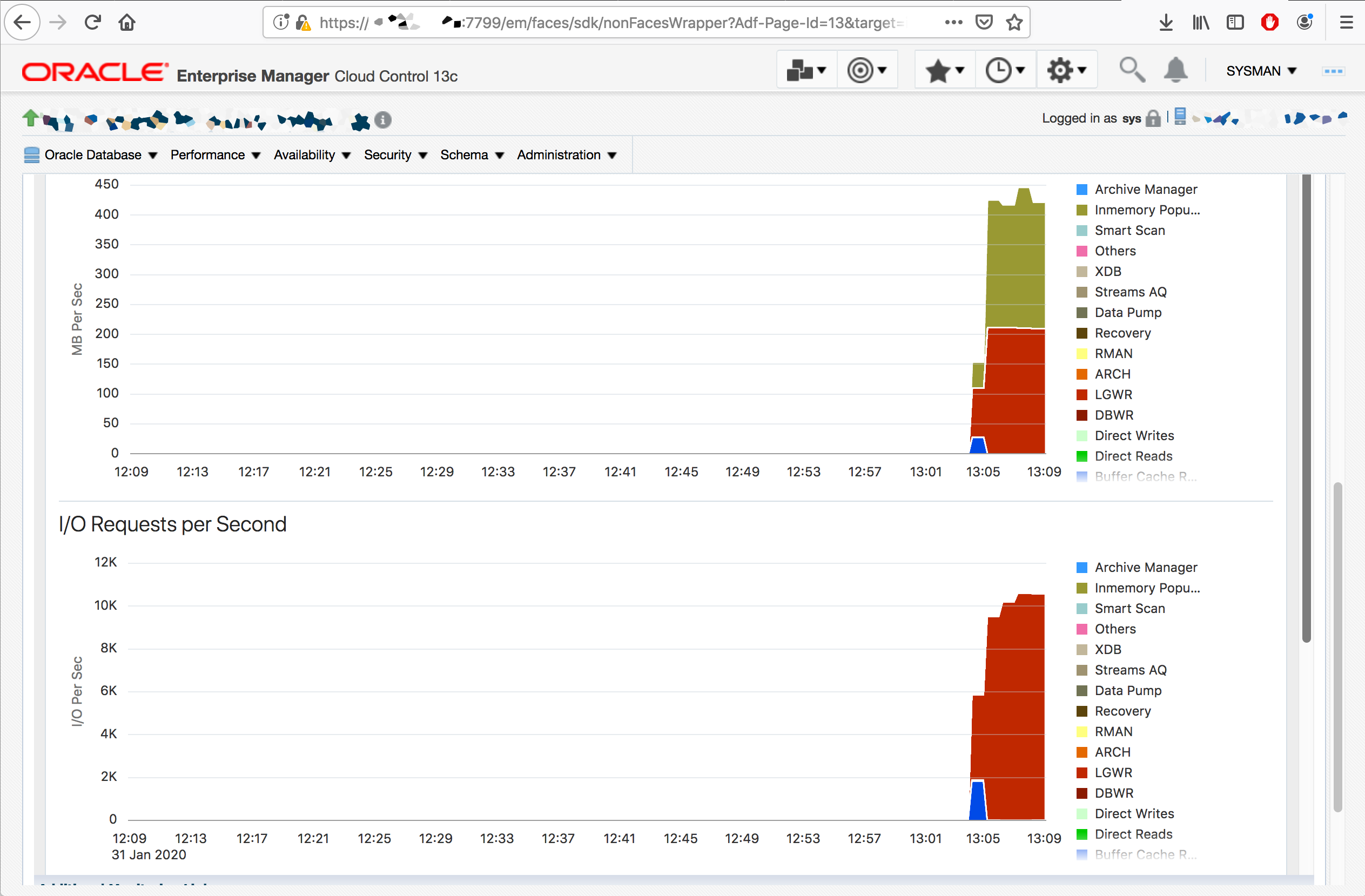

And form CC view:

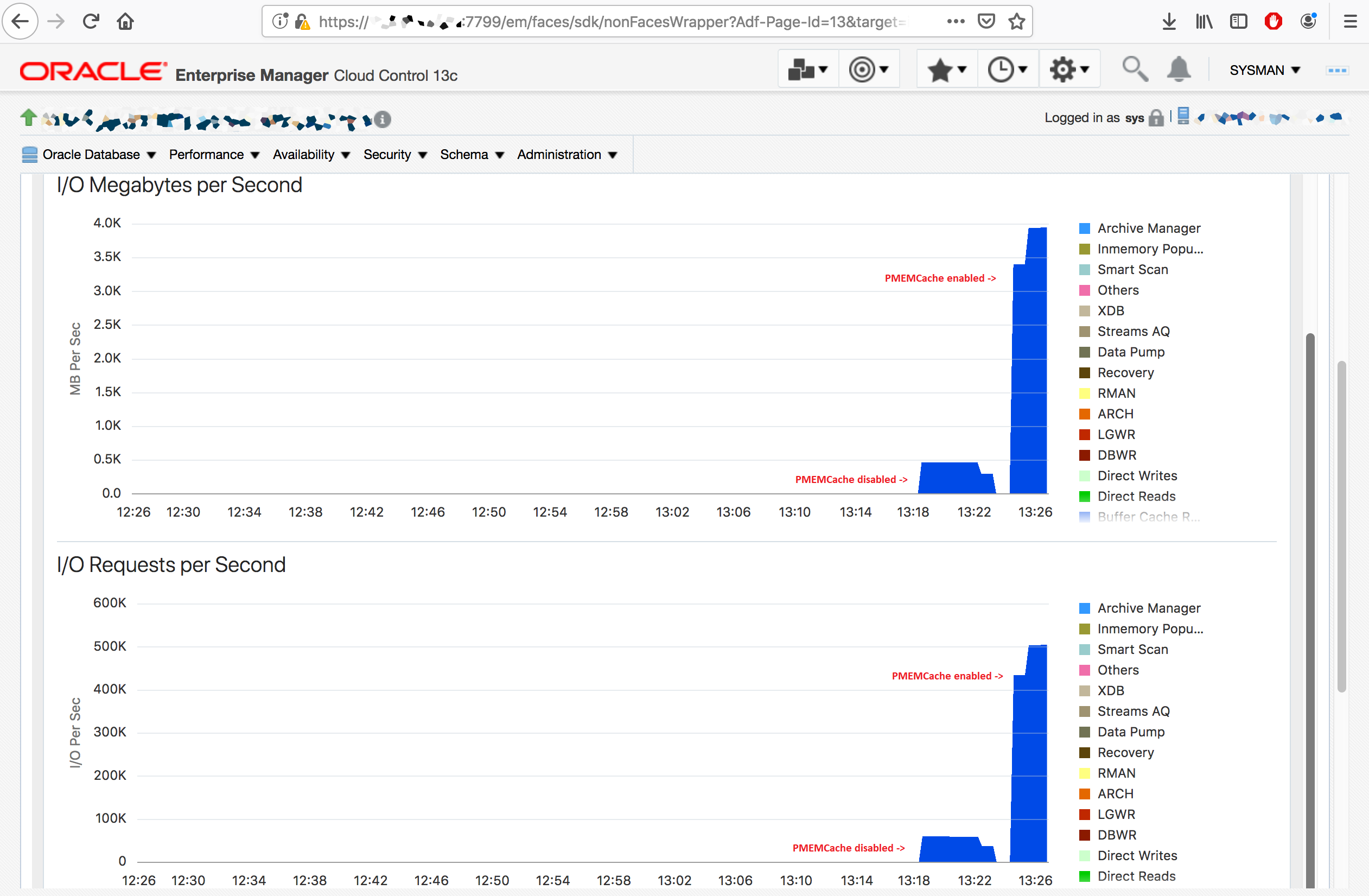

And after, the run with PMEMCache enabled:

- Number of read IOPS: 1020.731

- Average single block read latency (us): 16.00 μs

- Average MB/s: 4000 MB/s (4GB/s)

For CC you can see how huge the difference:

The test was the same over the database. Both runs were the same. And if you compare the results, with PMEMCache enable was:

- 8x time more IOPS.

- 14x time faster/less latency.

- 8x more throughput at MB/s.

And again, remember that the Exadata was an EF edition. So, we are comparing flash against memory. Think how huge the difference will be if we compare “normal” hard disk against memory.

Under the Hood

But under the hood, how the PMEM appear? It is simple (and tricky at the same time). The modules appear as normal celldisk for the cell (yes, dimm memory as celldisk):

CellCLI> list celldisk

FD_00_exa8cel01 normal

FD_01_exa8cel01 normal

FD_02_exa8cel01 normal

FD_03_exa8cel01 normal

FD_04_exa8cel01 normal

FD_05_exa8cel01 normal

FD_06_exa8cel01 normal

FD_07_exa8cel01 normal

PM_00_exa8cel01 normal

PM_01_exa8cel01 normal

PM_02_exa8cel01 normal

PM_03_exa8cel01 normal

PM_04_exa8cel01 normal

PM_05_exa8cel01 normal

PM_06_exa8cel01 normal

PM_07_exa8cel01 normal

PM_08_exa8cel01 normal

PM_09_exa8cel01 normal

PM_10_exa8cel01 normal

PM_11_exa8cel01 normal

CellCLI>

And the PMEMLog in the same way as flashlog:

CellCLI> list pmemlog detail

name: exa8cel01_PMEMLOG

cellDisk: PM_11_exa8cel01,PM_06_exa8cel01,PM_01_exa8cel01,PM_10_exa8cel01,PM_03_exa8cel01,PM_04_exa8cel01,PM_07_exa8cel01,PM_08_exa8cel01,PM_09_exa8cel01,PM_05_exa8cel01,PM_00_exa8cel01,PM_02_exa8cel01

creationTime: 2020-01-31T21:01:06-08:00

degradedCelldisks:

effectiveSize: 960M

efficiency: 100.0

id: 9ba61418-e8cc-43c6-ba55-c74e4b5bdec8

size: 960M

status: normal

CellCLI>

And PMEMCache too:

CellCLI> list pmemcache detail

name: exa8cel01_PMEMCACHE

cellDisk: PM_04_exa8cel01,PM_01_exa8cel01,PM_09_exa8cel01,PM_03_exa8cel01,PM_06_exa8cel01,PM_08_exa8cel01,PM_05_exa8cel01,PM_10_exa8cel01,PM_11_exa8cel01,PM_00_exa8cel01,PM_02_exa8cel01,PM_07_exa8cel01

creationTime: 2020-01-31T21:23:09-08:00

degradedCelldisks:

effectiveCacheSize: 1.474365234375T

id: d3c71ce8-9e4e-4777-9015-771a4e1a2376

size: 1.474365234375T

status: normal

CellCLI>

So, the administration at the cell side will be easy. We (as DMA) don’t need to concern about special administration details. It was not the point of the workshop, but I think that when the DIMM needs to be replaced, it will not be hot-swapped and after the change, some “warm” process needs to occur to load the cache.

Conclusion

For me, the workshop was a really nice surprise. I work with Exadata since 2010, started with Exadata V2 and passed over X2, X4 X5 EF and X7, and saw a lot of new features over these 10 years, but the addition of PMEM was a good adding. I was not expecting much difference for the numbers, but it was possible to see the real difference that PMEM can deliver. The notable point was the latency reduction.

Think about one environment that you need to be fast, these μs make the difference. Think in one DB that used Data Guard with Real-time apply for redo, where the primary waits for standby apply to release the commit. Think that these μs that you gain, you deduct for the total time.

Of course, that not everyone maybe needs the gains for PMEM, but for those that need it really make the difference.

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”