As you know, the 23ai was released for Cloud and Engineered Systems (Exadata and ExaCC) first, I already explored these in previous posts as well. And since the patches already started to be released, now with the patch for 23.6, we can re-test the feature Zero-Downtime Oracle Grid Infrastructure Patching (ZDOGIP). The steps here are not specific to the Exadata version and can be used for any 23ai version.

I already demonstrated how to use it for 21c (using graphical, and silent mode) and the same can be done for 19c as well.

But now, I will show how to do for 23ai, and this post includes:

- Install the Grid Infrastructure 23.6.0.24.10, using the Gold Image

- Upgrade the GI from 23.5.0.24.07 to 23.6.0.24.10 using the Zero-Downtime Oracle Grid Infrastructure Patching

This will be done while the database is running to show that we can patch the GI without downtime. I will show how to do this:

Current Environment

The running system is:

- OEL 8.9 Kernel 5.4.17-2136.324.5.3.el8uek.x86_64.

- Oracle GI 23ai, version 23.5.0.24.07 with no one-off or patches installed.

- Oracle Database 23ai (23.5.0.24.07) and 19c (19.23.0.0.0).

- Nodes are not using Transparent HugePages.

- It is a RAC installation, with two nodes.

You can see this information in detail here in this file. Since I am running the Oracle 19c, the compatibility for ASM diskgroups was set to 19.0.0.0.0.

Gold Image

One detail for this patch is that I am using the gold image provided for 23ai. Starting with 23ai Oracle will provide (for some HW/OS combinations) the version called Gold Image, this is aimed to facilitate installation and patch process because the image is already packed resulting in small size and fast installation.

One detail for the 23ai patches, the Engineered System version started with 23.4.0.24.05 and later with the release 23.5.0.24.07. Unfortunately, the upgrade from 23.4 to 23.5 was not possible to use the ZDOGIP process because 23.5 was considered a new release, and when I tried to apply the patch I received an error message telling me that the actual patch level was the same. And if we compare 23.4 and 23.5, they appear as the same patch level at CRS:

[grid@o23c1n1s1 ~]$ /u01/app/23.4.0.0/grid/bin/crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [23.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [0]. [grid@o23c1n1s1 ~]$ [grid@o23c1n1s1 ~]$ /u01/app/23.5.0.0/grid/bin/crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [23.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [0]. [grid@o23c1n1s1 ~]$

But using the provided gold image, we can use the ZDOGIP.

ACFS and AFD drivers

As you know, some installations use the ASM Filter and ACFS drivers compiled and attached as modules directly to the Linux Kernel. So, there is a well-known compatible matrix that you can see at MOS for these drivers and the Linux Kernel (link ACFS Support On OS Platforms (Certification Matrix – Doc ID 1369107.1)).

This verification is important because most of the time (99%) of the patches for GI include upgrades for the OS drivers. So, you need to validate if your actual kernel is compatible with the new version of drivers.

Besides that, it is important that BEFORE starting the patch you execute the command to check the current version of these drivers (execute in both nodes):

###################################### # #Checking Node 01 # ###################################### [grid@o23c1n1s2 ~]$ acfsdriverstate version ACFS-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. ACFS-9326: Driver build number = 240702.1. ACFS-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). ACFS-9547: Driver available build number = 240702.1. ACFS-9232: Driver available build version = 23.0.0.0.0 (23.5.0.24.07). [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ afddriverstate version AFD-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. AFD-9326: Driver build number = 240702.1. AFD-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). AFD-9547: Driver available build number = 240702.1. AFD-9232: Driver available build version = 23.0.0.0.0 (23.5.0.24.07). [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ ###################################### # #Checking Node 02 # ###################################### [grid@o23c1n2s2 ~]$ acfsdriverstate version ACFS-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. ACFS-9326: Driver build number = 240702.1. ACFS-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). ACFS-9547: Driver available build number = 240702.1. ACFS-9232: Driver available build version = 23.0.0.0.0 (23.5.0.24.07). [grid@o23c1n2s2 ~]$ [grid@o23c1n2s2 ~]$ afddriverstate version AFD-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. AFD-9326: Driver build number = 240702.1. AFD-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). AFD-9547: Driver available build number = 240702.1. AFD-9232: Driver available build version = 23.0.0.0.0 (23.5.0.24.07). [grid@o23c1n2s2 ~]$

And as well for CRS to check what is the current active drivers:

###################################### # #Checking Node 01 # ###################################### [grid@o23c1n1s2 ~]$ crsctl query driver activeversion -all Node Name : o23c1n1s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n1s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) [grid@o23c1n1s2 ~]$ crsctl query driver softwareversion -all Node Name : o23c1n1s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n1s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) [grid@o23c1n1s2 ~]$

A little detail about the ZDOGIP. If you need to upgrade the OS drivers, unfortunately, you will have downtime because the process stops all databases. But there is one way to do ZDOGIP without applying the ACFS and AFD drivers, and this is what I will use later.

Preparing for Patching

The files that we need are simple:

- Grid Infrastructure Gold Image, patch number is 37037934.

- Opatch, always the last version available. Download here in this link.

Creating the folders the files

The patch will use the ZDOGIP processes. So, it is out of place patching, with a switch of the current t Oracle Home folder for the GI. So, after downloading the patches and uploading to the first node of your RAC cluster we need to create the new folders in all nodes:

###################################### # #Checking Node 01 # ###################################### [root@o23c1n1s2 ~]# mkdir -p /u01/app/23.6.0.0/grid [root@o23c1n1s2 ~]# chown grid:oinstall /u01/app/23.6.0.0/grid [root@o23c1n1s2 ~]# ###################################### # #Checking Node 02 # ###################################### [root@o23c1n2s2 ~]# mkdir -p /u01/app/23.6.0.0/grid [root@o23c1n2s2 ~]# chown grid:oinstall /u01/app/23.6.0.0/grid [root@o23c1n2s2 ~]#

Unzipping the patches

The next step is executed only in the first node. We just need to unzip the GI gold image and the OPatch as the grid user:

[root@o23c1n1s2 ~]# su - grid [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ cd /u01/app/23.6.0.0/grid/ [grid@o23c1n1s2 grid]$ [grid@o23c1n1s2 grid]$ unzip -q /u01/install/Grid/p37037934_230000_Linux-x86-64.zip [grid@o23c1n1s2 grid]$ [grid@o23c1n1s2 grid]$ [grid@o23c1n1s2 grid]$ unzip -q /u01/install/p6880880_230000_Linux-x86-64.zip replace OPatch/opatchauto? [y]es, [n]o, [A]ll, [N]one, [r]ename: A [grid@o23c1n1s2 grid]$

Running systems

For now in my example scenario, what is running in the system is:

- 23.5 GI installed and running at /u01/app/23.5.0.0

- Oracle RAC Database 23.5 called o23ne

- Oracle RAC Database 19.23 called o19c

You can see below this (in both nodes):

###################################### # #Checking Node 01 # ###################################### [root@o23c1n1s2 ~]# ps -ef |grep smon root 5770 1 0 15:14 ? 00:00:53 /u01/app/23.5.0.0/grid/bin/osysmond.bin grid 6480 1 0 15:15 ? 00:00:00 asm_smon_+ASM1 oracle 7170 1 0 15:15 ? 00:00:00 ora_smon_o23ne1 oracle 7514 1 0 15:15 ? 00:00:00 ora_smon_o19c1 root 66017 3896 0 16:46 pts/0 00:00:00 grep --color=auto smon [root@o23c1n1s2 ~]# [root@o23c1n1s2 ~]# ps -ef |grep lsnr root 5872 5810 0 15:14 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/crfelsnr -n o23c1n1s2 grid 6209 1 0 15:15 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit grid 6259 1 0 15:15 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit grid 9078 1 0 15:16 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN1 -no_crs_notify -inherit grid 9084 1 0 15:16 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit root 66025 3896 0 16:46 pts/0 00:00:00 grep --color=auto lsnr [root@o23c1n1s2 ~]# ###################################### # #Checking Node 02 # ###################################### [root@o23c1n2s2 ~]# [root@o23c1n2s2 ~]# ps -ef |grep smon root 4795 1 0 15:19 ? 00:00:48 /u01/app/23.5.0.0/grid/bin/osysmond.bin grid 5727 1 0 15:19 ? 00:00:00 asm_smon_+ASM2 oracle 6300 1 0 15:20 ? 00:00:00 ora_smon_o23ne2 oracle 6679 1 0 15:20 ? 00:00:00 ora_smon_o19c2 root 57432 9393 0 16:46 pts/0 00:00:00 grep --color=auto smon [root@o23c1n2s2 ~]# [root@o23c1n2s2 ~]# ps -ef |grep lsnr root 4896 4838 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/crfelsnr -n o23c1n2s2 grid 5298 1 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit grid 5329 1 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit grid 5350 1 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit root 57436 9393 0 16:46 pts/0 00:00:00 grep --color=auto lsnr [root@o23c1n2s2 ~]# [root@o23c1n2s2 ~]#

And for the 19c database, we have one pdb called PDB19C1:

[oracle@o23c1n1s2 ~]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Sun Oct 20 16:51:48 2024

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB19C1 READ WRITE NO

SQL> show parameter list

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

forward_listener string

listener_networks string

local_listener string (ADDRESS=(PROTOCOL=TCP)(HOST=

o23c1n1s2-vip.oralocal)(PORT=1

521))

remote_listener string o23c1s2-scan:1521

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

[oracle@o23c1n1s2 ~]$

Testing the ZDOGIP

As you know, I like to test in detail what I post here. So, I created a simple table (T1) at the PDB19C and made two loops that were continuously inserted into it. In case of downtime or shutdown of the database, we will notice the error.

The first one is using a PLSQL connected from the node01. This will represent a connected session (that never disconnects) from your database:

[oracle@o23c1n1s2 ~]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Sun Oct 20 16:52:34 2024

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> alter session set container = PDB19C1;

Session altered.

SQL> SET SERVEROUTPUT ON

SQL> DECLARE

2 lDatMax DATE := (sysdate + 240/1440);

3 BEGIN

4 WHILE (sysdate <= (lDatMax)) LOOP

5 insert into simon.t1(c1, c2, c3) values (SYS_CONTEXT ('USERENV', 'INSTANCE'), 'Loop - Sqlplus', sysdate);

6 commit;

7 dbms_session.sleep(0.5);

8 END LOOP;

9 END;

10 /

The second is using EZCONNECT from a third machine that connects to the database using the scan. So, sometimes connection goes to instance 1, and others for instance 2:

[oracle@o8rpn1-19c ~]$ date

Sun Oct 20 16:51:03 CEST 2024

[oracle@o8rpn1-19c ~]$ for i in {1..1000000}

> do

> echo "Insert Data $i "`date +%d-%m-%Y-%H%M%S`

> sqlplus -s simon/simon23ai@o23c1s2-scan.oralocal/PDB19C1<<EOF

> set heading on feedback on;

> insert into t1(c1, c2, c3) values (SYS_CONTEXT ('USERENV', 'INSTANCE'), 'Loop - EZconnect', sysdate);

> commit;

> EOF

> done

Insert Data 1 20-10-2024-165359

1 row created.

Commit complete.

Insert Data 2 20-10-2024-165359

1 row created.

…

ZDOGIP using GOLD IMAGE

Differently of the ZDOGIP for 21c and 19c, which we call the installation and patch at the same time, for 23c using the gold image, we need to do two steps. This is needed because the gold image already provides a complete and patched 23.6 image that can be installed directly.

Step 01 – Installing the software

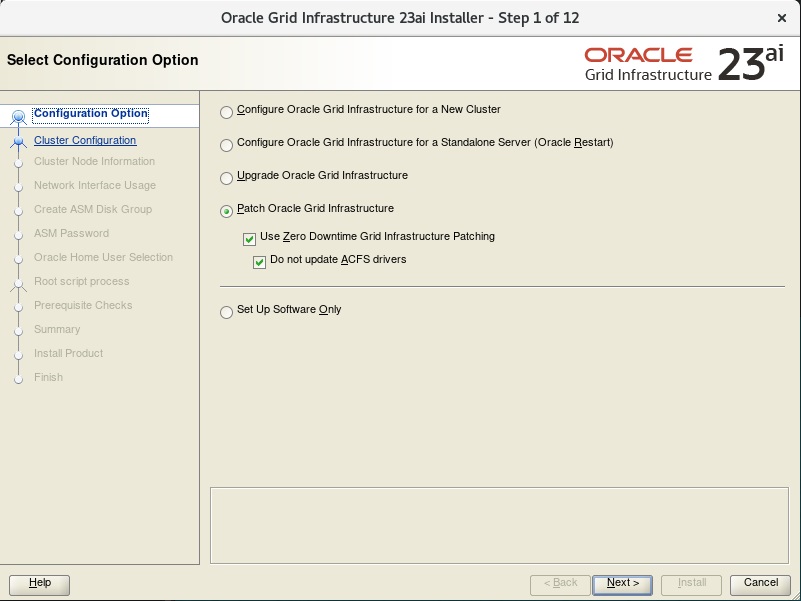

So, the first step is to call the gridSetup.sh script and select the option to just install the crs software:

[grid@o23c1n1s2 ~]$ export ORACLE_HOME=/u01/app/23.6.0.0/grid [grid@o23c1n1s2 ~]$ /u01/app/23.6.0.0/grid/gridSetup.sh ERROR: Unable to verify the graphical display setup. This application requires X display. Make sure that xdpyinfo exist under PATH variable. Launching Oracle Grid Infrastructure Setup Wizard... The response file for this session can be found at: /u01/app/23.6.0.0/grid/install/response/grid_2024-10-20_05-00-04PM.rsp You can find the log of this install session at: /u01/app/oraInventory/logs/GridSetupActions2024-10-20_05-00-04PM/gridSetupActions2024-10-20_05-00-04PM.log [grid@o23c1n1s2 ~]$

The gallery below shows all the steps that you need to do using the GUI. It is a simple next, next-finish process.

At the end, is needed to call the root.sh in both nodes:

######################################

#

# Node 01

#

######################################

[root@o23c1n1s2 ~]# /u01/app/23.6.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/23.6.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

To configure Grid Infrastructure for a Cluster execute the following command as grid user:

/u01/app/23.6.0.0/grid/gridSetup.sh

This command launches the Grid Infrastructure Setup Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media.

[root@o23c1n1s2 ~]#

######################################

#

# Node 02

#

######################################

[root@o23c1n2s2 ~]# /u01/app/23.6.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/23.6.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

To configure Grid Infrastructure for a Cluster execute the following command as grid user:

/u01/app/23.6.0.0/grid/gridSetup.sh

This command launches the Grid Infrastructure Setup Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media.

[root@o23c1n2s2 ~]#

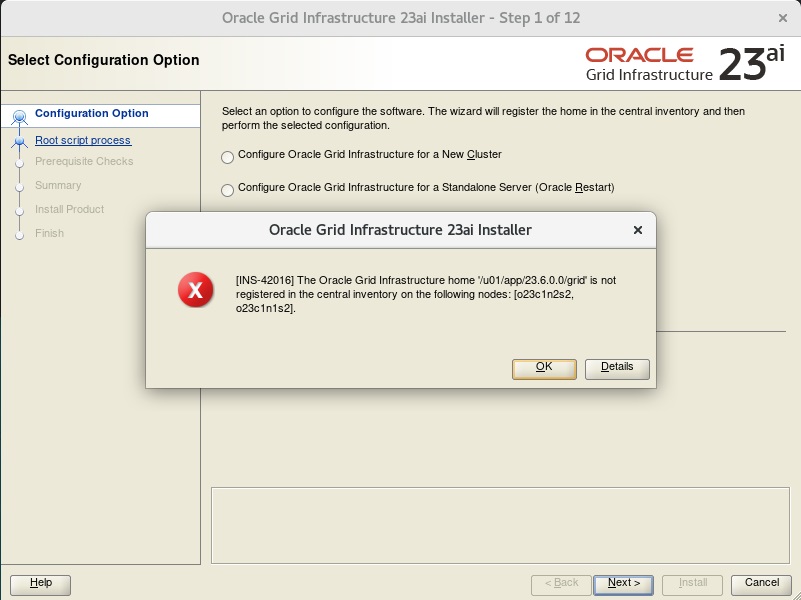

An interesting detail here is why we can’t call the installation and switch at the same time. This occurs because the unzipped gold image does not appear (in the oraInventory) as installed. It does not exist in both nodes and does not exist inside the inventory. And even if you try to call everything together, you will receive one error that your software is not installed:

Looking for the running inserts, we noticed that everything was ok while the installation was happening:

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

4846 1 Loop - EZconnect 20/10/2024 17:11:15 20/10/2024 16:53:59

677 2 Loop - EZconnect 20/10/2024 17:11:12 20/10/2024 16:53:59

2078 1 Loop - Sqlplus 20/10/2024 17:11:15 20/10/2024 16:53:53

SQL>

Step 02 – Doing the Home Switch

After the success of step 01, we can call the step 02. Here we will switch between the homes for the GI, and we will do this online. We will use the following parameters when calling the grtidSetup.sh:

- switchGridHome: This will tell the installer to switch between the old and new GI home.

- zeroDowntimeGIPatching: This will tell you to do the switch online, without stopping any database that is running in the nodes.

- skipDriverUpdate: This will not update the kernel drivers for the ACFS and AFD.

So, we just call this (as grid user) in the first node of the cluster:

[grid@o23c1n1s2 ~]$ /u01/app/23.6.0.0/grid/gridSetup.sh -switchGridHome -zeroDowntimeGIPatching -skipDriverUpdate ERROR: Unable to verify the graphical display setup. This application requires X display. Make sure that xdpyinfo exist under PATH variable. Launching Oracle Grid Infrastructure Setup Wizard... [grid@o23c1n1s2 ~]$

The gallery below shows the GUI steps that are needed:

Near of the end of the GUI installation, the run for the root.sh script is needed in all nodes. And it is here where the switch will officially occur, and that it is done online. So, running the root.sh at the first node with root user:

[root@o23c1n1s2 ~]# /u01/app/23.6.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/23.6.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

RAC option enabled on: Linux

Executing command '/u01/app/23.6.0.0/grid/perl/bin/perl -I/u01/app/23.6.0.0/grid/perl/lib -I/u01/app/23.6.0.0/grid/crs/install /u01/app/23.6.0.0/grid/crs/install/rootcrs.pl -dstcrshome /u01/app/23.6.0.0/grid -transparent -nodriverupdate -prepatch'

Using configuration parameter file: /u01/app/23.6.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/o23c1n1s2/crsconfig/crs_prepatch_apply_oop_o23c1n1s2_2024-10-20_05-36-08PM.log

Performing following verification checks ...

cluster upgrade state ...PASSED

OLR Integrity ...PASSED

Hosts File ...PASSED

Free Space: o23c1n1s2:/ ...PASSED

Software home: /u01/app/23.5.0.0/grid ...PASSED

Pre-check for Patch Application was successful.

CVU operation performed: stage -pre patch

Date: Oct 20, 2024, 5:36:38 PM

CVU version: 23.5.0.24.7 (070324x8664)

Clusterware version: 23.0.0.0.0

CVU home: /u01/app/23.5.0.0/grid

Grid home: /u01/app/23.5.0.0/grid

User: grid

Operating system: Linux5.4.17-2136.324.5.3.el8uek.x86_64

2024/10/20 17:37:03 CLSRSC-671: Pre-patch steps for patching GI home successfully completed.

Executing command '/u01/app/23.6.0.0/grid/perl/bin/perl -I/u01/app/23.6.0.0/grid/perl/lib -I/u01/app/23.6.0.0/grid/crs/install /u01/app/23.6.0.0/grid/crs/install/rootcrs.pl -dstcrshome /u01/app/23.6.0.0/grid -transparent -nodriverupdate -postpatch'

Using configuration parameter file: /u01/app/23.6.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/o23c1n1s2/crsconfig/crs_postpatch_apply_oop_o23c1n1s2_2024-10-20_05-37-03PM.log

2024/10/20 17:37:57 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd_dummy.service'

2024/10/20 17:38:51 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

2024/10/20 17:40:04 CLSRSC-4015: Performing install or upgrade action for Oracle Autonomous Health Framework (AHF).

2024/10/20 17:40:04 CLSRSC-4012: Shutting down Oracle Autonomous Health Framework (AHF).

2024/10/20 17:41:27 CLSRSC-4013: Successfully shut down Oracle Autonomous Health Framework (AHF).

2024/10/20 17:41:39 CLSRSC-672: Post-patch steps for patching GI home successfully completed.

[root@o23c1n1s2 ~]#

We can see that between 2024/10/20 17:37:57 and 2024/10/20 17:41:39 the switch occurred. Looking for the log we can notice the stop and start of crs (and some interesting messages):

2024-10-20 17:37:58: 2024-10-20 17:37:58: Successfully removed file: /etc/systemd/system/oracle-ohasd.service.d/00_oracle-ohasd.conf 2024-10-20 17:37:58: Executing cmd: /usr/bin/systemctl daemon-reload 2024-10-20 17:38:00: zip: stopping Oracle Clusterware stack ... 2024-10-20 17:38:00: Executing cmd: /u01/app/23.5.0.0/grid/bin/crsctl stop crs -tgip -f 2024-10-20 17:38:51: Command output: > CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'o23c1n1s2' > CRS-2673: Attempting to stop 'ora.crsd' on 'o23c1n1s2' > CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'o23c1n1s2' … … > CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'o23c1n1s2' has completed > CRS-4133: Oracle High Availability Services has been stopped. >End Command output 2024-10-20 17:38:51: The return value of stop of CRS: 0 2024-10-20 17:38:51: Executing cmd: /u01/app/23.5.0.0/grid/bin/crsctl check crs … … 2024-10-20 17:38:51: no change to oracle ohasd service, skip installing it in systemd 2024-10-20 17:38:51: zip: the oracle ohasd service, ohasd service, and slice files have not changed, skip daemon-reload but restart the service 2024-10-20 17:38:51: zip: restart ohasd service by killing init ohasd pid=2595 2024-10-20 17:38:51: Transparent GI patching, skip patching USM drivers 2024-10-20 17:38:51: Updating OLR with the new Patch Level 2024-10-20 17:38:51: Executing cmd: /u01/app/23.6.0.0/grid/bin/clscfg -localpatch 2024-10-20 17:38:55: Command output: > clscfg: EXISTING configuration version 0 detected. > Creating OCR keys for user 'root', privgrp 'root'.. > Operation successful. >End Command output 2024-10-20 17:38:55: Starting GI stack to set patch level in OCR and stop rolling patch 2024-10-20 17:38:55: Executing cmd: /u01/app/23.6.0.0/grid/bin/crsctl start crs -wait -tgip 2024-10-20 17:39:51: Command output: > CRS-4123: Starting Oracle High Availability Services-managed resources > CRS-2672: Attempting to start 'ora.cssd' on 'o23c1n1s2' … …

If you look the what was running during the patch we can notice that databases did not stopped, but ASM and listener were restarted:

[root@o23c1n1s2 ~]# ps -ef |grep smon root 5770 1 1 15:14 ? 00:01:27 /u01/app/23.5.0.0/grid/bin/osysmond.bin grid 6480 1 0 15:15 ? 00:00:00 asm_smon_+ASM1 oracle 7170 1 0 15:15 ? 00:00:00 ora_smon_o23ne1 oracle 7514 1 0 15:15 ? 00:00:00 ora_smon_o19c1 root 136141 134781 0 17:38 pts/6 00:00:00 grep --color=auto smon [root@o23c1n1s2 ~]# ps -ef |grep lsnr root 5872 5810 0 15:14 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/crfelsnr -n o23c1n1s2 grid 6209 1 0 15:15 ? 00:00:12 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit grid 6259 1 0 15:15 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit grid 9078 1 0 15:16 ? 00:00:03 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN1 -no_crs_notify -inherit grid 9084 1 0 15:16 ? 00:00:03 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit root 136164 134781 0 17:38 pts/6 00:00:00 grep --color=auto lsnr [root@o23c1n1s2 ~]# [root@o23c1n1s2 ~]# ps -ef |grep smon oracle 7170 1 0 15:15 ? 00:00:00 ora_smon_o23ne1 oracle 7514 1 0 15:15 ? 00:00:00 ora_smon_o19c1 root 140246 134781 0 17:38 pts/6 00:00:00 grep --color=auto smon [root@o23c1n1s2 ~]# ps -ef |grep lsnr grid 6209 1 0 15:15 ? 00:00:13 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit root 140438 134781 0 17:38 pts/6 00:00:00 grep --color=auto lsnr [root@o23c1n1s2 ~]# [root@o23c1n1s2 ~]# ps -ef |grep smon oracle 7170 1 0 15:15 ? 00:00:00 ora_smon_o23ne1 oracle 7514 1 0 15:15 ? 00:00:00 ora_smon_o19c1 root 142699 1 1 17:39 ? 00:00:03 /u01/app/23.6.0.0/grid/bin/osysmond.bin grid 146818 1 0 17:39 ? 00:00:00 asm_smon_+ASM1 root 167971 134781 0 17:43 pts/6 00:00:00 grep --color=auto smon [root@o23c1n1s2 ~]# ps -ef |grep lsnr root 143127 142839 0 17:39 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/crfelsnr -n o23c1n1s2 grid 144540 1 0 17:39 ? 00:00:01 /u01/app/23.6.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit grid 144582 1 0 17:39 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit grid 144700 1 0 17:39 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit root 167992 134781 0 17:43 pts/6 00:00:00 grep --color=auto lsnr [root@o23c1n1s2 ~]#

And our insert continues to be running at the same time (compare the columns for date/time with the log of the patch – stop, and start of crs):

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

11131 1 Loop - EZconnect 20/10/2024 17:38:06 20/10/2024 16:53:59

2248 2 Loop - EZconnect 20/10/2024 17:36:46 20/10/2024 16:53:59

5283 1 Loop - Sqlplus 20/10/2024 17:38:06 20/10/2024 16:53:53

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

11222 1 Loop - EZconnect 20/10/2024 17:38:36 20/10/2024 16:53:59

2270 2 Loop - EZconnect 20/10/2024 17:38:32 20/10/2024 16:53:59

5343 1 Loop - Sqlplus 20/10/2024 17:38:36 20/10/2024 16:53:53

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

11287 1 Loop - EZconnect 20/10/2024 17:39:14 20/10/2024 16:53:59

2370 2 Loop - EZconnect 20/10/2024 17:39:15 20/10/2024 16:53:59

5420 1 Loop - Sqlplus 20/10/2024 17:39:14 20/10/2024 16:53:53

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

11299 1 Loop - EZconnect 20/10/2024 17:39:21 20/10/2024 16:53:59

2375 2 Loop - EZconnect 20/10/2024 17:39:21 20/10/2024 16:53:59

5433 1 Loop - Sqlplus 20/10/2024 17:39:21 20/10/2024 16:53:53

SQL>

And at the time for the end of the root.sh at the first node, we can see those databases were not restarted, but the listener and ASM were.

When finished root.sh at node 01 is possible to execute in the remaining nodes (since it is two nodes, this is the last one and you can see that the checks were executed). Below you can see that I execute the ps to check the running services to pick up the time for the databases process, and after the patch finishes, I execute the same command to demonstrate that just crs services were restarted:

[root@o23c1n2s2 ~]# ps -ef |grep smon

root 4795 1 0 15:19 ? 00:01:20 /u01/app/23.5.0.0/grid/bin/osysmond.bin

grid 5727 1 0 15:19 ? 00:00:00 asm_smon_+ASM2

oracle 6300 1 0 15:20 ? 00:00:00 ora_smon_o23ne2

oracle 6679 1 0 15:20 ? 00:00:00 ora_smon_o19c2

root 121768 120925 0 17:45 pts/3 00:00:00 grep --color=auto smon

[root@o23c1n2s2 ~]# ps -ef |grep lsnr

root 4896 4838 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/crfelsnr -n o23c1n2s2

grid 5298 1 0 15:19 ? 00:00:03 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

grid 5329 1 0 15:19 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

grid 106916 1 0 17:38 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN1 -no_crs_notify -inherit

grid 106934 1 0 17:38 ? 00:00:00 /u01/app/23.5.0.0/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit

root 121881 120925 0 17:45 pts/3 00:00:00 grep --color=auto lsnr

[root@o23c1n2s2 ~]#

[root@o23c1n2s2 ~]# /u01/app/23.6.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/23.6.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

RAC option enabled on: Linux

Executing command '/u01/app/23.6.0.0/grid/perl/bin/perl -I/u01/app/23.6.0.0/grid/perl/lib -I/u01/app/23.6.0.0/grid/crs/install /u01/app/23.6.0.0/grid/crs/install/rootcrs.pl -dstcrshome /u01/app/23.6.0.0/grid -transparent -nodriverupdate -prepatch'

Using configuration parameter file: /u01/app/23.6.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/o23c1n2s2/crsconfig/crs_prepatch_apply_oop_o23c1n2s2_2024-10-20_05-44-11PM.log

Initializing ...

Performing following verification checks ...

cluster upgrade state ...PASSED

OLR Integrity ...PASSED

Hosts File ...PASSED

Free Space: o23c1n2s2:/ ...PASSED

Software home: /u01/app/23.5.0.0/grid ...PASSED

Pre-check for Patch Application was successful.

CVU operation performed: stage -pre patch

Date: Oct 20, 2024, 5:44:42 PM

CVU version: 23.5.0.24.7 (070324x8664)

Clusterware version: 23.0.0.0.0

CVU home: /u01/app/23.5.0.0/grid

Grid home: /u01/app/23.5.0.0/grid

User: grid

Operating system: Linux5.4.17-2136.324.5.3.el8uek.x86_64

2024/10/20 17:45:32 CLSRSC-671: Pre-patch steps for patching GI home successfully completed.

Executing command '/u01/app/23.6.0.0/grid/perl/bin/perl -I/u01/app/23.6.0.0/grid/perl/lib -I/u01/app/23.6.0.0/grid/crs/install /u01/app/23.6.0.0/grid/crs/install/rootcrs.pl -dstcrshome /u01/app/23.6.0.0/grid -transparent -nodriverupdate -postpatch'

Using configuration parameter file: /u01/app/23.6.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/o23c1n2s2/crsconfig/crs_postpatch_apply_oop_o23c1n2s2_2024-10-20_05-45-34PM.log

2024/10/20 17:46:24 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd_dummy.service'

2024/10/20 17:46:57 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

2024/10/20 17:48:03 CLSRSC-4015: Performing install or upgrade action for Oracle Autonomous Health Framework (AHF).

2024/10/20 17:48:03 CLSRSC-4012: Shutting down Oracle Autonomous Health Framework (AHF).

2024/10/20 17:49:24 CLSRSC-4013: Successfully shut down Oracle Autonomous Health Framework (AHF).

Initializing ...

Performing following verification checks ...

cluster upgrade state ...PASSED

Post-check for Patch Application was successful.

CVU operation performed: stage -post patch

Date: Oct 20, 2024, 5:49:55 PM

CVU version: 23.6.0.24.10 (100824x8664)

Clusterware version: 23.0.0.0.0

CVU home: /u01/app/23.6.0.0/grid

Grid home: /u01/app/23.6.0.0/grid

User: grid

Operating system: Linux5.4.17-2136.324.5.3.el8uek.x86_64

2024/10/20 17:51:53 CLSRSC-672: Post-patch steps for patching GI home successfully completed.

[root@o23c1n2s2 ~]# 2024/10/20 17:53:47 CLSRSC-4003: Successfully patched Oracle Autonomous Health Framework (AHF).

[root@o23c1n2s2 ~]# ps -ef |grep smon

oracle 6300 1 0 15:20 ? 00:00:00 ora_smon_o23ne2

oracle 6679 1 0 15:20 ? 00:00:00 ora_smon_o19c2

root 129794 1 1 17:47 ? 00:00:04 /u01/app/23.6.0.0/grid/bin/osysmond.bin

grid 133450 1 0 17:47 ? 00:00:00 asm_smon_+ASM2

root 155777 120925 0 17:53 pts/3 00:00:00 grep --color=auto smon

[root@o23c1n2s2 ~]# ps -ef |grep lsnr

root 130215 129930 0 17:47 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/crfelsnr -n o23c1n2s2

grid 131655 1 0 17:47 ? 00:00:01 /u01/app/23.6.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

grid 131683 1 0 17:47 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit

grid 131854 1 0 17:47 ? 00:00:00 /u01/app/23.6.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

root 155852 120925 0 17:53 pts/3 00:00:00 grep --color=auto lsnr

[root@o23c1n2s2 ~]#

So, is possible to see that databases were up and running for the whole time. Connections continue to insert data in both nodes:

SQL> /

COUNT(*) C1 C2 LAST_INS FIRST_INS

---------- ---------- -------------------- ------------------- -------------------

13117 1 Loop - EZconnect 20/10/2024 17:55:12 20/10/2024 16:53:59

4722 2 Loop - EZconnect 20/10/2024 17:54:35 20/10/2024 16:53:59

7328 1 Loop - Sqlplus 20/10/2024 17:55:12 20/10/2024 16:53:53

SQL>

This file is the full output from the SQLPLUS and PLSQL execution. You can notice that no downtime message was shown. Even the connected running session never stopped to insert.

If you would like to understand why, this is basically because the database detected the missing instance and registered as Flex Client to the remaining ASM node. This is ASM Flex:

2024-10-20T17:38:04.899942+02:00 ALTER SYSTEM SET _asm_asmb_rcvto=60 SCOPE=MEMORY; 2024-10-20T17:38:04.924714+02:00 ALTER SYSTEM SET _asm_asmb_max_wait_timeout=60 SCOPE=MEMORY; 2024-10-20T17:38:05.056174+02:00 ALTER SYSTEM RELOCATE CLIENT TO '+ASM2' 2024-10-20T17:38:15.061808+02:00 NOTE: ASMB (7543) relocating from ASM instance +ASM1 to +ASM2 (User initiated) 2024-10-20T17:38:16.999014+02:00 NOTE: ASMB (index:0) registering with ASM instance as Flex client 0xcde9b1707cdd5761 (reg:5540864) (startid:1182870941) (reconnect) NOTE: ASMB (index:0) (7543) connected to ASM instance +ASM2, osid: 106767 (Flex mode; client id 0xcde9b1707cdd5761) NOTE: ASMB (7543) rebuilding ASM server state for all pending groups NOTE: ASMB (7543) rebuilding ASM server state for group 1 (DATA) 2024-10-20T17:38:18.189032+02:00 NOTE: ASMB (7543) rebuilt 1 (of 1) groups NOTE: ASMB (7543) rebuilt 21 (of 26) allocated files NOTE: (7543) fetching new locked extents from server NOTE: ASMB (7543) 0 locks established; 0 pending writes sent to server SUCCESS: ASMB (7543) reconnected & completed ASM server state for disk group 1 NOTE: ASMB (7543) rebuilding ASM server state for group 2 (RECO) NOTE: ASMB (7543) rebuilt 1 (of 1) groups NOTE: ASMB (7543) rebuilt 5 (of 26) allocated files NOTE: (7543) fetching new locked extents from server NOTE: ASMB (7543) 0 locks established; 0 pending writes sent to server SUCCESS: ASMB (7543) reconnected & completed ASM server state for disk group 2 2024-10-20T17:39:21.216560+02:00 ALTER SYSTEM SET local_listener=' (ADDRESS=(PROTOCOL=TCP)(HOST=10.160.23.14)(PORT=1521))' SCOPE=MEMORY SID='o19c1'; 2024-10-20T17:39:21.434298+02:00 ALTER SYSTEM SET remote_listener=' o23c1s2-scan:1521' SCOPE=MEMORY SID='o19c1'; 2024-10-20T17:39:21.438054+02:00 ALTER SYSTEM SET listener_networks='' SCOPE=MEMORY SID='o19c1'; 2024-10-20T17:39:22.740276+02:00 ALTER SYSTEM SET _asm_asmb_rcvto=10 SCOPE=MEMORY; 2024-10-20T17:39:22.744926+02:00 ALTER SYSTEM SET _asm_asmb_max_wait_timeout=6 SCOPE=MEMORY; 2024-10-20T17:39:28.603041+02:00 ALTER SYSTEM SET remote_listener=' o23c1s2-scan:1521' SCOPE=MEMORY SID='o19c1'; 2024-10-20T17:46:34.397059+02:00

State of ACFS and AFD drivers

After the installation, we noticed that the drivers were not updated. That was the requested option during the installation, but the new versions of the drivers are available but not active:

[root@o23c1n1s2 ~]# su - grid [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ echo $ORACLE_HOME /u01/app/23.6.0.0/grid [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ $ORACLE_HOME/bin/crsctl query driver activeversion -all Node Name : o23c1n1s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n1s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : ACFS BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) Node Name : o23c1n2s2 Driver Name : AFD BuildNumber : 240702.1 BuildVersion : 23.0.0.0.0 (23.5.0.24.07) [grid@o23c1n1s2 ~]$ $ORACLE_HOME/bin/crsctl query driver softwareversion -all Node Name : o23c1n1s2 Driver Name : ACFS BuildNumber : 241006 BuildVersion : 23.0.0.0.0 (23.6.0.24.10) Node Name : o23c1n1s2 Driver Name : AFD BuildNumber : 241006 BuildVersion : 23.0.0.0.0 (23.6.0.24.10) Node Name : o23c1n2s2 Driver Name : ACFS BuildNumber : 241006 BuildVersion : 23.0.0.0.0 (23.6.0.24.10) Node Name : o23c1n2s2 Driver Name : AFD BuildNumber : 241006 BuildVersion : 23.0.0.0.0 (23.6.0.24.10) [grid@o23c1n1s2 ~]$ [grid@o23c1n1s2 ~]$ $ORACLE_HOME/bin/acfsdriverstate version ACFS-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. ACFS-9326: Driver build number = 240702.1. ACFS-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). ACFS-9547: Driver available build number = 241006. ACFS-9232: Driver available build version = 23.0.0.0.0 (23.6.0.24.10). [grid@o23c1n1s2 ~]$ $ORACLE_HOME/bin/afddriverstate version AFD-9325: Driver OS kernel version = 5.4.17-2011.0.7.el8uek.x86_64. AFD-9326: Driver build number = 240702.1. AFD-9231: Driver build version = 23.0.0.0.0 (23.5.0.24.07). AFD-9547: Driver available build number = 241006. AFD-9232: Driver available build version = 23.0.0.0.0 (23.6.0.24.10). [grid@o23c1n1s2 ~]$

In one dedicated post, I will show how to update the drivers. It is only one step executed in each node, but this requires downtime since it will stop all resources running in the node. Even I recommend restarting of machine to have a clean kernel start with the new drivers.

Conclusion

The patching using the Zero-Downtime Oracle Grid Infrastructure Patching (ZDOGIP) with the Gold Image still allows the upgrade of the GI without interruption at databases. This is important for environments where the remaining nodes can’t absorb the whole load of the database instance running in the patched node.

The important detail is linked with the AFD and ACFS kernel drivers. If the skipDriverUpdate is not specified, the root.sh will execute and shutdown all the databases, causing downtime. You need to be aware of the compatibility matrix between the new GI version and the OS kernel.

For the last, is important to notice that when using Gold Image we need two steps. The first is the installation, and the second is the switch. But this is good because allows us to prepare the installation without any impact on the running databases, and later do the switch in a maintenance window. This can reduce even more the possibility of impact on the environment.

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies, or opinions. The information here was edited to be useful for general purposes, and specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Pingback: 23ai, Zero-Downtime Oracle Grid Infrastructure Patching – GOLD IMAGE with Silent Install - Fernando Simon

Thank you for the detailed step-by-step instructions. This was exactly what I was looking for!

Please don’t forget to check this post (case are you testing in non-Engineered System) https://www.fernandosimon.com/blog/asmca_args/