The process to patch Exadata stack and software changed in the last years and it became easier. Now, with patchmgr to be used for all (database servers, storage cells, and switches) the process is much easier to control the steps. Here I will show the steps that are involved in this process.

Independent if it is ZDLRA or Exadata, the process for Engineering System is the same. So, this post can be used as a guide for the Exadata patch apply as well. In 2018 I already made a similar process about how to patch/upgrade Exadata to 18c (you can access here) and even made a partial/incomplete post for 12c in 2015.

The process will be very similar and can be done in rolling and non-rolling mode. In the first, the services continue to run and you don’t need to shutdown databases, but will take more time because the patchmgr applies server by server. At the second, you need to shutdown the entire GI and the patch is applied in parallel and will be faster.

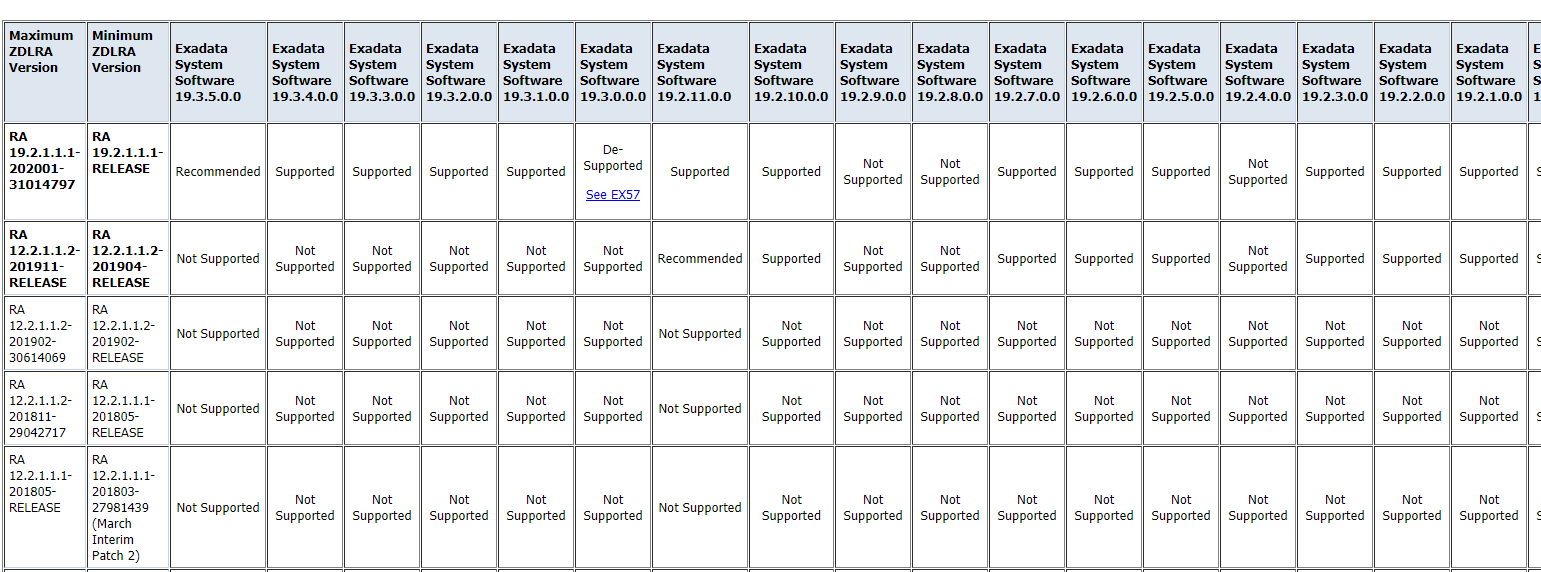

Exadata Version for ZDLRA

The Exadata version that can be used for ZDLRA needs to be marked as supported by the ZDLRA team and needs to be compatible with the version that you are running. This compatibility matrix is found at note Zero Data Loss Recovery Appliance Supported Versions (Doc ID 1927416.1).

I posted how to upgrade ZDLRA here, and the first thing to download the correct version is discovering which version of ZDLRA you are running. To do that, the racli version is used:

[root@zerosita01 ~]# racli version

Recovery Appliance Version:

exadata image: 19.2.3.0.0.190621

rarpm version: ra_automation-19.2.1.1.1.202001-RELEASE.x86_64

rdbms version: RDBMS_19.3.0.0.190416DBRU_LINUX.X64_RELEASE

transaction :

zdlra version: ZDLRA_19.2.1.1.1.202001_LINUX.X64_RELEASE

[root@zerosita01 ~]#

With that, check what version is compatible at the matrix:

So, we can see that version 19.2.1.1.1.202001 is compatible with several Exadata version. Here, in this example, I will use the version 19.3.2.0.0.

Prechecks

Now, we start to patch whatever the Engineering System you are using. Exadata, ZDLRA, SuperCluster. The process follows almost the same to all.

I recommend doing some prechecks before start the patch itself. The first it checks the actual Exadata version that you are running at database servers, storage servers, and switches. Doing this you can check if you need to apply intermediate patches (usually for switches this can occurs) because you are in an old version.

[root@zerosita01 radump]# dcli -l root -g /root/cell_group "imageinfo" |grep "Active image version" zerocell01: Active image version: 19.2.3.0.0.190621 zerocell02: Active image version: 19.2.3.0.0.190621 zerocell03: Active image version: 19.2.3.0.0.190621 zerocell04: Active image version: 19.2.3.0.0.190621 zerocell05: Active image version: 19.2.3.0.0.190621 zerocell06: Active image version: 19.2.3.0.0.190621 [root@zerosita01 radump]# [root@zerosita01 radump]# [root@zerosita01 radump]# [root@zerosita01 radump]# dcli -l root -g /root/db_group "imageinfo" |grep "Image version" zerosita01: Image version: 19.2.3.0.0.190621 zerosita02: Image version: 19.2.3.0.0.190621 [root@zerosita01 radump]# [root@zerosita01 radump]# dcli -l root -g /root/ib_group "version" |grep version zdls-iba01: SUN DCS 36p version: 2.2.12-2 zdls-iba01: BIOS version: NUP1R918 zdls-ibb01: SUN DCS 36p version: 2.2.12-2 zdls-ibb01: BIOS version: NUP1R918 [root@zerosita01 radump]# dcli -l root -g /root/ib_group "version" |grep "36p" zdls-iba01: SUN DCS 36p version: 2.2.12-2 zdls-ibb01: SUN DCS 36p version: 2.2.12-2 [root@zerosita01 radump]#

After that, I recommend the restart of ILOM from database and storage (if you do for the switch, the entire switch will restart). This is needed to avoid fsck of ILOM filesystem due longtime online (in old machines like V2, X2, the FSCK can take a lot of time and trigger the timeout and rollback of patchmgr), and to show if some hardware error was not triggered yet:

[root@zerosita01 radump]# dcli -g /root/cell_group -l root 'ipmitool mc reset cold' zerocell01: Sent cold reset command to MC zerocell02: Sent cold reset command to MC zerocell03: Sent cold reset command to MC zerocell04: Sent cold reset command to MC zerocell05: Sent cold reset command to MC zerocell06: Sent cold reset command to MC [root@zerosita01 radump]# [root@zerosita01 radump]# [root@zerosita01 radump]# dcli -g /root/db_group -l root 'ipmitool mc reset cold' zerosita01: Sent cold reset command to MC zerosita02: Sent cold reset command to MC [root@zerosita01 radump]# [root@zerosita01 radump]# ###################################################### After some time ###################################################### [root@zerosita01 radump]# dcli -l root -g /root/cell_group "ipmitool sunoem led get" |grep ": SERVICE" zerocell01: SERVICE | OFF zerocell02: SERVICE | OFF zerocell03: SERVICE | OFF zerocell04: SERVICE | OFF zerocell05: SERVICE | OFF zerocell06: SERVICE | OFF [root@zerosita01 radump]# [root@zerosita01 radump]# [root@zerosita01 radump]# [root@zerosita01 radump]# dcli -l root -g /root/db_group "ipmitool sunoem led get" |grep ": SERVICE" zerosita01: SERVICE | OFF zerosita02: SERVICE | OFF [root@zerosita01 radump]#

As you can see, after the ILOM restart, there is no HW error (service leds are off).

Downloading the patch

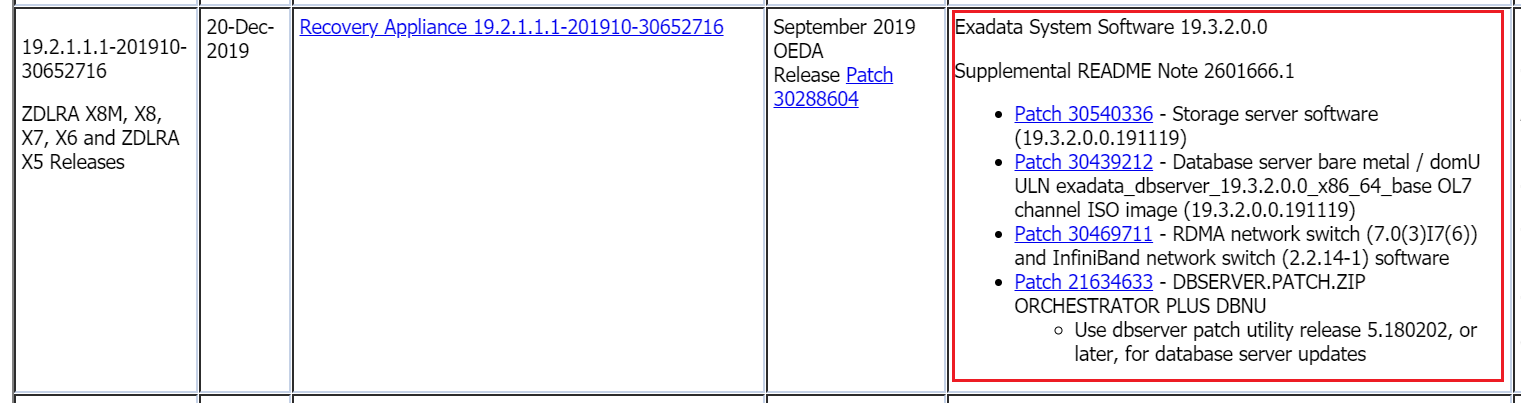

ZDLRA

To download the Exadata patch, the correct place is the note Zero Data Loss Recovery Appliance Supported Versions (Doc ID 1927416.1). And we can match the ZDLRA version that we are using with the Exadata:

If the link does not work (it’s common) the patch can be downloaded at note 888828.1 from Exadata. Be careful to download the same patch numbers.

I recommend downloading the patches do /radump folder. If you want, you can create one specific folder for that:

[root@zerosita01 radump]# mkdir exa_stack [root@zerosita01 radump]# cd exa_stack/ [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/patchmgr/p21634633_193100_Linux-x86-64.zip ./ [root@zerosita01 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/dbnode/p30439212_193000_Linux-x86-64.zip ./ [root@zerosita01 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/switch/p30469711_193000_Linux-x86-64.zip ./ [root@zerosita01 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/storage/p30540336_193000_Linux-x86-64.zip ./ [root@zerosita01 exa_stack]#

And after download, we can unzip the patches from Infiniband/RCoE, Storage, and database patch manager. The patch from database server it is not needed to be unzipped:

[root@zerosita01 exa_stack]# unzip p21634633_193100_Linux-x86-64.zip Archive: p21634633_193100_Linux-x86-64.zip creating: dbserver_patch_19.191113/ ... ... inflating: dbserver_patch_19.191113/patchmgr_functions inflating: dbserver_patch_19.191113/imageLogger inflating: dbserver_patch_19.191113/ExaXMLNode.pm [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# unzip p30439212_193000_Linux-x86-64.zip Archive: p30439212_193000_Linux-x86-64.zip inflating: exadata_ol7_base_repo_19.3.2.0.0.191119.iso inflating: README.html [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# unzip p30469711_193000_Linux-x86-64.zip Archive: p30469711_193000_Linux-x86-64.zip creating: patch_switch_19.3.2.0.0.191119/ inflating: patch_switch_19.3.2.0.0.191119/nxos.7.0.3.I7.6.bin ... ... inflating: patch_switch_19.3.2.0.0.191119/patchmgr_functions [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# unzip p30540336_193000_Linux-x86-64.zip Archive: p30540336_193000_Linux-x86-64.zip creating: patch_19.3.2.0.0.191119/ inflating: patch_19.3.2.0.0.191119/dostep.sh ... ... inflating: patch_19.3.2.0.0.191119/19.3.2.0.0.191119.iso creating: patch_19.3.2.0.0.191119/linux.db.rpms/ inflating: patch_19.3.2.0.0.191119/README.html [root@zerosita01 exa_stack]#

Exadata

Specific for Exadata, the right place it is download from the note 888828.1. There, you can choose the desired version that you want.

After that, you can put in one specific folder and unzip (as shown for ZDLRA above) the files.

Shutdown ZDLRA

This step is needed just for ZDLRA to shutdown it (the “software” and the database). Just login with the sqlplus and execute dbms_ra.shutdown as rasys user:

root@zerosita01 exa_stack]# su - oracle Last login: Wed Mar 4 14:07:44 CET 2020 from 99.234.123.138 on ssh [oracle@zerosita01 ~]$ [oracle@zerosita01 ~]$ sqlplus rasys/xxxxxx SQL*Plus: Release 19.0.0.0.0 - Production on Wed Mar 4 14:10:07 2020 Version 19.3.0.0.0 Copyright (c) 1982, 2019, Oracle. All rights reserved. Last Successful login time: Wed Mar 04 2020 14:00:02 +01:00 Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.3.0.0.0 SQL> SELECT state FROM ra_server; STATE ------------- ON SQL> exec dbms_ra.shutdown; PL/SQL procedure successfully completed. SQL> SELECT state FROM ra_server; STATE ------------- OFF SQL> exit Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.3.0.0.0 [oracle@zerosita01 ~]$ [oracle@zerosita01 ~]$ srvctl stop database -d zdlras [oracle@zerosita01 ~]$

Patching

Pre-Patch

I recommend using the screen to avoid that your session being killed by a firewall or network issues during the patch. With this, a session that simulates a console connection is created and you can reconnect it if needed. You can download from https://linux.oracle.com/.

[root@zerosita01 exa_stack]# rpm -Uvh /zfs/ZDLRA_PATCHING/19.2.1.1.1/screen-4.1.0-0.25.20120314git3c2946.el7.x86_64.rpm Preparing... ################################# [100%] Updating / installing... 1:screen-4.1.0-0.25.20120314git3c29################################# [100%] [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# screen -L -RR Patch-Exadata-Stack [root@zerosita01 exa_stack]# pwd /radump/exa_stack [root@zerosita01 exa_stack]#

Another detail is unmounting all NSF that you are using (and remove/comment from fstab too to avoid mounting during the boot). If not, you will receive a warning about that. This is important for Exadata because you can have long mount time during the startup and this can force the rollback because the time to do the patch reaches the limit. If you don’t want to unmount (because you are patching in rolling mode), there is one flag that can be passed at patchmgr to avoid mount during the reboot:

[root@zerosita01 exa_stack]# umount /zfs

[root@zerosita01 exa_stack]#

SUMMARY OF WARNINGS AND ERRORS FOR zerosita01:

zerosita01: WARNING: Active network mounts found on this DB node.

zerosita01: The following known issues will be checked for but require manual follow-up:

zerosita01: (*) - Yum rolling update requires fix for 11768055 when Grid Infrastructure is below 11.2.0.2 BP12

It is needed to create files with the hostname for the switches, dbnodes, and storage servers.

1 – Shutdown

This step depends on the chosen mode that you are patching. If it is in rolling mode, you can skip. If no, continue with the shutdown.

Since the patch will be in non-rolling mode, it is needed to shutdown all the services running in the machine. To avoid any kind of service running, I shutdown the cluster services and CRS in all nodes:

[root@zerosita01 exa_stack]# /u01/app/19.0.0.0/grid/bin/crsctl stop cluster -all CRS-2673: Attempting to stop 'ora.crsd' on 'zerosita01' CRS-2673: Attempting to stop 'ora.crsd' on 'zerosita02' ... ... CRS-2673: Attempting to stop 'ora.diskmon' on 'zerosita02' CRS-2677: Stop of 'ora.diskmon' on 'zerosita02' succeeded [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# /u01/app/19.0.0.0/grid/bin/crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'zerosita01' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'zerosita01' ... ... CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'zerosita01' has completed CRS-4133: Oracle High Availability Services has been stopped. [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# ssh -q zerosita02 Last login: Wed Mar 4 14:15:09 CET 2020 from 99.234.123.138 on ssh Last login: Wed Mar 4 14:15:57 2020 from zerosita01.zero.flisk.net [root@zerosita02 ~]# [root@zerosita02 ~]# /u01/app/19.0.0.0/grid/bin/crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'zerosita02' CRS-2673: Attempting to stop 'ora.mdnsd' on 'zerosita02' ... ... CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'zerosita02' has completed CRS-4133: Oracle High Availability Services has been stopped. [root@zerosita02 ~]# [root@zerosita02 ~]# [root@zerosita02 ~]# logout [root@zerosita01 exa_stack]#

2 – Patch Switches

Stopping cells services

This part of the process is not a requirement but can be done only in non-rolling executions. The idea is to stop all services running at storage to avoid IB port errors. And doing this, the “alerthistory” from cell doesn’t report error:

[root@zerosita01 patch_switch_19.3.2.0.0.191119]# dcli -l root -g /root/cell_group "cellcli -e list cell" zerocell01: zerocell01 online zerocell02: zerocell02 online zerocell03: zerocell03 online zerocell04: zerocell04 online zerocell05: zerocell05 online zerocell06: zerocell06 online [root@zerosita01 patch_switch_19.3.2.0.0.191119]# [root@zerosita01 patch_switch_19.3.2.0.0.191119]# [root@zerosita01 patch_switch_19.3.2.0.0.191119]# dcli -l root -g /root/cell_group "cellcli -e alter cell shutdown services all" zerocell01: zerocell01: Stopping the RS, CELLSRV, and MS services... zerocell01: The SHUTDOWN of services was successful. zerocell02: zerocell02: Stopping the RS, CELLSRV, and MS services... zerocell02: The SHUTDOWN of services was successful. zerocell03: zerocell03: Stopping the RS, CELLSRV, and MS services... zerocell03: The SHUTDOWN of services was successful. zerocell04: zerocell04: Stopping the RS, CELLSRV, and MS services... zerocell04: The SHUTDOWN of services was successful. zerocell05: zerocell05: Stopping the RS, CELLSRV, and MS services... zerocell05: The SHUTDOWN of services was successful. zerocell06: zerocell06: Stopping the RS, CELLSRV, and MS services... zerocell06: The SHUTDOWN of services was successful. [root@zerosita01 patch_switch_19.3.2.0.0.191119]#

After that, we can start the patch for Infiniband switches.

ibswitch_precheck

This step checks if it is possible to apply the patch over ibswitch. Some versions have requirements that need to be matched, and if not, we need to fix (one example is hosts file that is wrong or space for FS).

To call the process we enter in the folder that corresponds to an unzipped patch from switches (patch 30469711 in this scenario):

[root@zerosita01 exa_stack]# cd patch_switch_19.3.2.0.0.191119

[root@zerosita01 patch_switch_19.3.2.0.0.191119]#

[root@zerosita01 patch_switch_19.3.2.0.0.191119]#

[root@zerosita01 patch_switch_19.3.2.0.0.191119]# ./patchmgr -ibswitches /root/ib_group -upgrade -ibswitch_precheck

2020-03-04 14:19:21 +0100 :Working: Verify SSH equivalence for the root user to node(s)

2020-03-04 14:19:26 +0100 :SUCCESS: Verify SSH equivalence for the root user to node(s)

2020-03-04 14:19:29 +0100 1 of 1 :Working: Initiate pre-upgrade validation check on InfiniBand switch(es).

----- InfiniBand switch update process started 2020-03-04 14:19:29 +0100 -----

[NOTE ] Log file at /radump/exa_stack/patch_switch_19.3.2.0.0.191119/upgradeIBSwitch.log

[INFO ] List of InfiniBand switches for upgrade: ( zdls-iba01 zdls-ibb01 )

[SUCCESS ] Verifying Network connectivity to zdls-iba01

[SUCCESS ] Verifying Network connectivity to zdls-ibb01

[SUCCESS ] Validating verify-topology output

[INFO ] Master Subnet Manager is set to "zdls-iba01" in all Switches

[INFO ] ---------- Starting with InfiniBand Switch zdls-iba01

[WARNING ] Infiniband switch meets minimal version requirements, but downgrade is only available to 2.2.13-2 with the current package.

To downgrade to other versions:

- Manually download the InfiniBand switch firmware package to the patch directory

- Set export variable "EXADATA_IMAGE_IBSWITCH_DOWNGRADE_VERSION" to the appropriate version

- Run patchmgr command to initiate downgrade.

[SUCCESS ] Verify SSH access to the patchmgr host zerosita01.zero.flisk.net from the InfiniBand Switch zdls-iba01.

[INFO ] Starting pre-update validation on zdls-iba01

[SUCCESS ] Verifying that /tmp has 150M in zdls-iba01, found 492M

[SUCCESS ] Verifying that / has 20M in zdls-iba01, found 26M

[SUCCESS ] NTP daemon is running on zdls-iba01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 14:26:40

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-iba01

[SUCCESS ] Verifying that the patchmgr host zerosita01.zero.flisk.net is recognized on the InfiniBand Switch zdls-iba01 through getHostByName

[SUCCESS ] Execute plugin check for Patch Check Prereq on zdls-iba01

[INFO ] Finished pre-update validation on zdls-iba01

[SUCCESS ] Pre-update validation on zdls-iba01

[SUCCESS ] Prereq check on zdls-iba01

[INFO ] ---------- Starting with InfiniBand Switch zdls-ibb01

[WARNING ] Infiniband switch meets minimal version requirements, but downgrade is only available to 2.2.13-2 with the current package.

To downgrade to other versions:

- Manually download the InfiniBand switch firmware package to the patch directory

- Set export variable "EXADATA_IMAGE_IBSWITCH_DOWNGRADE_VERSION" to the appropriate version

- Run patchmgr command to initiate downgrade.

[SUCCESS ] Verify SSH access to the patchmgr host zerosita01.zero.flisk.net from the InfiniBand Switch zdls-ibb01.

[INFO ] Starting pre-update validation on zdls-ibb01

[SUCCESS ] Verifying that /tmp has 150M in zdls-ibb01, found 492M

[SUCCESS ] Verifying that / has 20M in zdls-ibb01, found 26M

[SUCCESS ] NTP daemon is running on zdls-ibb01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 14:27:13

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-ibb01

[SUCCESS ] Verifying that the patchmgr host zerosita01.zero.flisk.net is recognized on the InfiniBand Switch zdls-ibb01 through getHostByName

[SUCCESS ] Execute plugin check for Patch Check Prereq on zdls-ibb01

[INFO ] Finished pre-update validation on zdls-ibb01

[SUCCESS ] Pre-update validation on zdls-ibb01

[SUCCESS ] Prereq check on zdls-ibb01

[SUCCESS ] Overall status

----- InfiniBand switch update process ended 2020-03-04 14:20:54 +0100 -----

2020-03-04 14:20:54 +0100 1 of 1 :SUCCESS: Initiate pre-upgrade validation check on InfiniBand switch(es).

2020-03-04 14:20:54 +0100 :SUCCESS: Completed run of command: ./patchmgr -ibswitches /root/ib_group -upgrade -ibswitch_precheck

2020-03-04 14:20:54 +0100 :INFO : upgrade attempted on nodes in file /root/ib_group: [zdls-iba01 zdls-ibb01]

2020-03-04 14:20:54 +0100 :INFO : For details, check the following files in /radump/exa_stack/patch_switch_19.3.2.0.0.191119:

2020-03-04 14:20:54 +0100 :INFO : - upgradeIBSwitch.log

2020-03-04 14:20:54 +0100 :INFO : - upgradeIBSwitch.trc

2020-03-04 14:20:54 +0100 :INFO : - patchmgr.stdout

2020-03-04 14:20:54 +0100 :INFO : - patchmgr.stderr

2020-03-04 14:20:54 +0100 :INFO : - patchmgr.log

2020-03-04 14:20:54 +0100 :INFO : - patchmgr.trc

2020-03-04 14:20:54 +0100 :INFO : Exit status:0

2020-03-04 14:20:55 +0100 :INFO : Exiting.

[root@zerosita01 patch_switch_19.3.2.0.0.191119]#

If the status is a success, we can continue to the next step.

upgrade

After the precheck, we can call the upgrade. The call is the same, just changing the parameter to upgrade:

[root@zerosita01 patch_switch_19.3.2.0.0.191119]# ./patchmgr -ibswitches /root/ib_group -upgrade

2020-03-04 14:30:46 +0100 :Working: Verify SSH equivalence for the root user to node(s)

2020-03-04 14:30:51 +0100 :SUCCESS: Verify SSH equivalence for the root user to node(s)

2020-03-04 14:30:54 +0100 1 of 1 :Working: Initiate upgrade of InfiniBand switches to 2.2.14-1. Expect up to 40 minutes for each switch

----- InfiniBand switch update process started 2020-03-04 14:30:54 +0100 -----

[NOTE ] Log file at /radump/exa_stack/patch_switch_19.3.2.0.0.191119/upgradeIBSwitch.log

[INFO ] List of InfiniBand switches for upgrade: ( zdls-iba01 zdls-ibb01 )

[SUCCESS ] Verifying Network connectivity to zdls-iba01

[SUCCESS ] Verifying Network connectivity to zdls-ibb01

[SUCCESS ] Validating verify-topology output

[INFO ] Proceeding with upgrade of InfiniBand switches to version 2.2.14_1

[INFO ] Master Subnet Manager is set to "zdls-iba01" in all Switches

[INFO ] ---------- Starting with InfiniBand Switch zdls-iba01

[WARNING ] Infiniband switch meets minimal version requirements, but downgrade is only available to 2.2.13-2 with the current package.

To downgrade to other versions:

- Manually download the InfiniBand switch firmware package to the patch directory

- Set export variable "EXADATA_IMAGE_IBSWITCH_DOWNGRADE_VERSION" to the appropriate version

- Run patchmgr command to initiate downgrade.

[SUCCESS ] Verify SSH access to the patchmgr host zerosita01.zero.flisk.net from the InfiniBand Switch zdls-iba01.

[INFO ] Starting pre-update validation on zdls-iba01

[SUCCESS ] Verifying that /tmp has 150M in zdls-iba01, found 492M

[SUCCESS ] Verifying that / has 20M in zdls-iba01, found 26M

[SUCCESS ] Service opensmd is running on InfiniBand Switch zdls-iba01

[SUCCESS ] NTP daemon is running on zdls-iba01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 14:38:06

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-iba01

[SUCCESS ] Verifying that the patchmgr host zerosita01.zero.flisk.net is recognized on the InfiniBand Switch zdls-iba01 through getHostByName

[SUCCESS ] Execute plugin check for Patch Check Prereq on zdls-iba01

[INFO ] Finished pre-update validation on zdls-iba01

[SUCCESS ] Pre-update validation on zdls-iba01

[INFO ] Package will be downloaded at firmware update time via scp

[SUCCESS ] Execute plugin check for Patching on zdls-iba01

[INFO ] Starting upgrade on zdls-iba01 to 2.2.14_1. Please give upto 15 mins for the process to complete. DO NOT INTERRUPT or HIT CTRL+C during the upgrade

[INFO ] Rebooting zdls-iba01 to complete the firmware update. Wait for 15 minutes before continuing. DO NOT MANUALLY REBOOT THE INFINIBAND SWITCH

[SUCCESS ] Load firmware 2.2.14_1 onto zdls-iba01

[SUCCESS ] Verify that /conf/configvalid is set to 1 on zdls-iba01

[INFO ] Set SMPriority to 5 on zdls-iba01

[INFO ] Starting post-update validation on zdls-iba01

[SUCCESS ] Service opensmd is running on InfiniBand Switch zdls-iba01

[SUCCESS ] NTP daemon is running on zdls-iba01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 14:54:47

[INFO ] /conf/configvalid is 1

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-iba01

[SUCCESS ] Execute plugin check for Post Patch on zdls-iba01

[INFO ] Finished post-update validation on zdls-iba01

[SUCCESS ] Post-update validation on zdls-iba01

[SUCCESS ] Update InfiniBand switch zdls-iba01 to 2.2.14_1

[INFO ] ---------- Starting with InfiniBand Switch zdls-ibb01

[WARNING ] Infiniband switch meets minimal version requirements, but downgrade is only available to 2.2.13-2 with the current package.

To downgrade to other versions:

- Manually download the InfiniBand switch firmware package to the patch directory

- Set export variable "EXADATA_IMAGE_IBSWITCH_DOWNGRADE_VERSION" to the appropriate version

- Run patchmgr command to initiate downgrade.

[SUCCESS ] Verify SSH access to the patchmgr host zerosita01.zero.flisk.net from the InfiniBand Switch zdls-ibb01.

[INFO ] Starting pre-update validation on zdls-ibb01

[SUCCESS ] Verifying that /tmp has 150M in zdls-ibb01, found 492M

[SUCCESS ] Verifying that / has 20M in zdls-ibb01, found 26M

[SUCCESS ] Service opensmd is running on InfiniBand Switch zdls-ibb01

[SUCCESS ] NTP daemon is running on zdls-ibb01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 14:55:24

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-ibb01

[SUCCESS ] Verifying that the patchmgr host zerosita01.zero.flisk.net is recognized on the InfiniBand Switch zdls-ibb01 through getHostByName

[SUCCESS ] Execute plugin check for Patch Check Prereq on zdls-ibb01

[INFO ] Finished pre-update validation on zdls-ibb01

[SUCCESS ] Pre-update validation on zdls-ibb01

[INFO ] Package will be downloaded at firmware update time via scp

[SUCCESS ] Execute plugin check for Patching on zdls-ibb01

[INFO ] Starting upgrade on zdls-ibb01 to 2.2.14_1. Please give upto 15 mins for the process to complete. DO NOT INTERRUPT or HIT CTRL+C during the upgrade

[INFO ] Rebooting zdls-ibb01 to complete the firmware update. Wait for 15 minutes before continuing. DO NOT MANUALLY REBOOT THE INFINIBAND SWITCH

[SUCCESS ] Load firmware 2.2.14_1 onto zdls-ibb01

[SUCCESS ] Verify that /conf/configvalid is set to 1 on zdls-ibb01

[INFO ] Set SMPriority to 5 on zdls-ibb01

[INFO ] Starting post-update validation on zdls-ibb01

[SUCCESS ] Service opensmd is running on InfiniBand Switch zdls-ibb01

[SUCCESS ] NTP daemon is running on zdls-ibb01.

[INFO ] Manually validate the following entries Date:(YYYY-MM-DD) 2020-03-04 Time:(HH:MM:SS) 15:12:05

[INFO ] /conf/configvalid is 1

[INFO ] Validating the current firmware on the InfiniBand Switch

[SUCCESS ] Firmware verification on InfiniBand switch zdls-ibb01

[SUCCESS ] Execute plugin check for Post Patch on zdls-ibb01

[INFO ] Finished post-update validation on zdls-ibb01

[SUCCESS ] Post-update validation on zdls-ibb01

[SUCCESS ] Update InfiniBand switch zdls-ibb01 to 2.2.14_1

[INFO ] InfiniBand Switches ( zdls-iba01 zdls-ibb01 ) updated to 2.2.14_1

[SUCCESS ] Overall status

----- InfiniBand switch update process ended 2020-03-04 15:05:48 +0100 -----

2020-03-04 15:05:48 +0100 1 of 1 :SUCCESS: Upgrade InfiniBand switch(es) to 2.2.14-1.

2020-03-04 15:05:48 +0100 :SUCCESS: Completed run of command: ./patchmgr -ibswitches /root/ib_group -upgrade

2020-03-04 15:05:48 +0100 :INFO : upgrade attempted on nodes in file /root/ib_group: [zdls-iba01 zdls-ibb01]

2020-03-04 15:05:48 +0100 :INFO : For details, check the following files in /radump/exa_stack/patch_switch_19.3.2.0.0.191119:

2020-03-04 15:05:48 +0100 :INFO : - upgradeIBSwitch.log

2020-03-04 15:05:48 +0100 :INFO : - upgradeIBSwitch.trc

2020-03-04 15:05:48 +0100 :INFO : - patchmgr.stdout

2020-03-04 15:05:48 +0100 :INFO : - patchmgr.stderr

2020-03-04 15:05:48 +0100 :INFO : - patchmgr.log

2020-03-04 15:05:48 +0100 :INFO : - patchmgr.trc

2020-03-04 15:05:48 +0100 :INFO : Exit status:0

2020-03-04 15:05:48 +0100 :INFO : Exiting.

You have new mail in /var/spool/mail/root

[root@zerosita01 patch_switch_19.3.2.0.0.191119]# cd ..

[root@zerosita01 exa_stack]# dcli -l root -g /root/ib_group "version" |grep version

zdls-iba01: SUN DCS 36p version: 2.2.14-1

zdls-iba01: BIOS version: NUP1R918

zdls-ibb01: SUN DCS 36p version: 2.2.14-1

zdls-ibb01: BIOS version: NUP1R918

[root@zerosita01 exa_stack]#

As you can see, the patch uses the file “/root/ib_group” that contains the switches hostname and the patchmgr patched one by one. This mode to patch (one by one) is needed because of the architecture of the IB network. One switch is the “master” of the network.

The patch process is simple, copy the new version (through scp) to the switch and call the upgrade script. After that, reboot to load the new version.

Startup cells services

After the success of the patch, we can start the cell services:

[root@zerosita01 exa_stack]# dcli -l root -g /root/cell_group "cellcli -e alter cell startup services all" zerocell01: zerocell01: Starting the RS, CELLSRV, and MS services... zerocell01: Getting the state of RS services... running zerocell01: Starting CELLSRV services... zerocell01: The STARTUP of CELLSRV services was successful. zerocell01: Starting MS services... zerocell01: The STARTUP of MS services was successful. zerocell02: zerocell02: Starting the RS, CELLSRV, and MS services... zerocell02: Getting the state of RS services... running zerocell02: Starting CELLSRV services... zerocell02: The STARTUP of CELLSRV services was successful. zerocell02: Starting MS services... zerocell02: The STARTUP of MS services was successful. zerocell03: zerocell03: Starting the RS, CELLSRV, and MS services... zerocell03: Getting the state of RS services... running zerocell03: Starting CELLSRV services... zerocell03: The STARTUP of CELLSRV services was successful. zerocell03: Starting MS services... zerocell03: The STARTUP of MS services was successful. zerocell04: zerocell04: Starting the RS, CELLSRV, and MS services... zerocell04: Getting the state of RS services... running zerocell04: Starting CELLSRV services... zerocell04: The STARTUP of CELLSRV services was successful. zerocell04: Starting MS services... zerocell04: The STARTUP of MS services was successful. zerocell05: zerocell05: Starting the RS, CELLSRV, and MS services... zerocell05: Getting the state of RS services... running zerocell05: Starting CELLSRV services... zerocell05: The STARTUP of CELLSRV services was successful. zerocell05: Starting MS services... zerocell05: The STARTUP of MS services was successful. zerocell06: zerocell06: Starting the RS, CELLSRV, and MS services... zerocell06: Getting the state of RS services... running zerocell06: Starting CELLSRV services... zerocell06: The STARTUP of CELLSRV services was successful. zerocell06: Starting MS services... zerocell06: The STARTUP of MS services was successful. [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# dcli -l root -g /root/cell_group "cellcli -e list cell" zerocell01: zerocell01 online zerocell02: zerocell02 online zerocell03: zerocell03 online zerocell04: zerocell04 online zerocell05: zerocell05 online zerocell06: zerocell06 online [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# dcli -l root -g /root/cell_group "cellcli -e list alerthistory" [root@zerosita01 exa_stack]#

In the end, you can see that there is no error for cells.

3 – Patch Storage Cells

To patch the Storage Cell the process is similar, we use the patchmgr that it is inside of patch that was downloaded. The patch_19.3.2.0.0.191119 that we unzipped before.

[root@zerosita01 exa_stack]# ls -l total 7577888 drwxrwxr-x 3 root root 4096 Nov 13 16:11 dbserver_patch_19.191113 -rw-rw-r-- 1 root root 1505257472 Nov 19 19:23 exadata_ol7_base_repo_19.3.2.0.0.191119.iso -rw-r--r-- 1 root root 438739135 Mar 4 13:40 p21634633_193100_Linux-x86-64.zip -rw-r--r-- 1 root root 1460815642 Mar 4 13:46 p30439212_193000_Linux-x86-64.zip -rw-r--r-- 1 root root 2872128400 Mar 4 13:47 p30469711_193000_Linux-x86-64.zip -rw-r--r-- 1 root root 1474890484 Mar 4 13:48 p30540336_193000_Linux-x86-64.zip drwxrwxr-x 5 root root 4096 Nov 19 19:29 patch_19.3.2.0.0.191119 drwxrwxr-x 6 root root 4096 Mar 4 15:05 patch_switch_19.3.2.0.0.191119 -rw-rw-r-- 1 root root 264228 Nov 19 19:29 README.html -rw-r--r-- 1 root root 31912 Mar 4 15:05 screenlog.0 [root@zerosita01 exa_stack]#

cleanup

I always like to start the patch with the cleanup option for patchmgr. This connects in every cell and removes all possible old files (from the previous patch). It is a good start to avoid errors:

[root@zerosita01 exa_stack]# cd patch_19.3.2.0.0.191119 [root@zerosita01 patch_19.3.2.0.0.191119]# [root@zerosita01 patch_19.3.2.0.0.191119]# ./patchmgr -cells /root/cell_group -cleanup 2020-03-04 16:08:06 +0100 :Working: Cleanup 2020-03-04 16:08:08 +0100 :SUCCESS: Cleanup 2020-03-04 16:08:09 +0100 :SUCCESS: Completed run of command: ./patchmgr -cells /root/cell_group -cleanup 2020-03-04 16:08:09 +0100 :INFO : Cleanup attempted on nodes in file /root/cell_group: [zerocell01 zerocell02 zerocell03 zerocell04 zerocell05 zerocell06] 2020-03-04 16:08:09 +0100 :INFO : Current image version on cell(s) is: 2020-03-04 16:08:09 +0100 :INFO : zerocell01: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : zerocell02: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : zerocell03: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : zerocell04: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : zerocell05: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : zerocell06: 19.2.3.0.0.190621 2020-03-04 16:08:09 +0100 :INFO : For details, check the following files in /radump/exa_stack/patch_19.3.2.0.0.191119: 2020-03-04 16:08:09 +0100 :INFO : - <cell_name>.log 2020-03-04 16:08:09 +0100 :INFO : - patchmgr.stdout 2020-03-04 16:08:09 +0100 :INFO : - patchmgr.stderr 2020-03-04 16:08:09 +0100 :INFO : - patchmgr.log 2020-03-04 16:08:09 +0100 :INFO : - patchmgr.trc 2020-03-04 16:08:09 +0100 :INFO : Exit status:0 2020-03-04 16:08:09 +0100 :INFO : Exiting. [root@zerosita01 patch_19.3.2.0.0.191119]#

patch_check_prereq

The next is check the prereqs to do the patch (this test space and if the current version is compatible to do the direct upgrade):

[root@zerosita01 patch_19.3.2.0.0.191119]# ./patchmgr -cells /root/cell_group -patch_check_prereq 2020-03-04 16:09:22 +0100 :Working: Check cells have ssh equivalence for root user. Up to 10 seconds per cell ... 2020-03-04 16:09:24 +0100 :SUCCESS: Check cells have ssh equivalence for root user. 2020-03-04 16:09:30 +0100 :Working: Initialize files. Up to 1 minute ... 2020-03-04 16:09:32 +0100 :Working: Setup work directory 2020-03-04 16:09:34 +0100 :SUCCESS: Setup work directory 2020-03-04 16:09:37 +0100 :SUCCESS: Initialize files. 2020-03-04 16:09:37 +0100 :Working: Copy, extract prerequisite check archive to cells. If required start md11 mismatched partner size correction. Up to 40 minutes ... 2020-03-04 16:09:52 +0100 :INFO : Wait correction of degraded md11 due to md partner size mismatch. Up to 30 minutes. 2020-03-04 16:09:53 +0100 :SUCCESS: Copy, extract prerequisite check archive to cells. If required start md11 mismatched partner size correction. 2020-03-04 16:09:53 +0100 :Working: Check space and state of cell services. Up to 20 minutes ... 2020-03-04 16:11:10 +0100 :SUCCESS: Check space and state of cell services. 2020-03-04 16:11:10 +0100 :Working: Check prerequisites on all cells. Up to 2 minutes ... 2020-03-04 16:11:13 +0100 :SUCCESS: Check prerequisites on all cells. 2020-03-04 16:11:13 +0100 :Working: Execute plugin check for Patch Check Prereq ... 2020-03-04 16:11:14 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22909764 v1.0. 2020-03-04 16:11:14 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:11:14 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 17854520 v1.3. 2020-03-04 16:11:14 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:11:14 +0100 :SUCCESS: No exposure to bug 17854520 with non-rolling patching 2020-03-04 16:11:14 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22468216 v1.0. 2020-03-04 16:11:14 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:11:14 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 22468216 2020-03-04 16:11:14 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 24625612 v1.0. 2020-03-04 16:11:14 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:11:14 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 24625612 2020-03-04 16:11:14 +0100 :SUCCESS: No exposure to bug 22896791 with non-rolling patching 2020-03-04 16:11:14 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22651315 v1.0. 2020-03-04 16:11:14 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:11:16 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 22651315 2020-03-04 16:11:17 +0100 :SUCCESS: Execute plugin check for Patch Check Prereq. 2020-03-04 16:11:17 +0100 :Working: Check ASM deactivation outcome. Up to 1 minute ... 2020-03-04 16:11:28 +0100 :SUCCESS: Check ASM deactivation outcome. 2020-03-04 16:11:28 +0100 :Working: check if MS SOFTWAREUPDATE is scheduled. up to 5 minutes... 2020-03-04 16:11:29 +0100 :NO ACTION NEEDED: No cells found with SOFTWAREUPDATE scheduled by MS 2020-03-04 16:11:30 +0100 :SUCCESS: check if MS SOFTWAREUPDATE is scheduled 2020-03-04 16:11:32 +0100 :SUCCESS: Completed run of command: ./patchmgr -cells /root/cell_group -patch_check_prereq 2020-03-04 16:11:32 +0100 :INFO : patch_prereq attempted on nodes in file /root/cell_group: [zerocell01 zerocell02 zerocell03 zerocell04 zerocell05 zerocell06] 2020-03-04 16:11:32 +0100 :INFO : Current image version on cell(s) is: 2020-03-04 16:11:32 +0100 :INFO : zerocell01: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : zerocell02: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : zerocell03: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : zerocell04: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : zerocell05: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : zerocell06: 19.2.3.0.0.190621 2020-03-04 16:11:32 +0100 :INFO : For details, check the following files in /radump/exa_stack/patch_19.3.2.0.0.191119: 2020-03-04 16:11:32 +0100 :INFO : - <cell_name>.log 2020-03-04 16:11:32 +0100 :INFO : - patchmgr.stdout 2020-03-04 16:11:32 +0100 :INFO : - patchmgr.stderr 2020-03-04 16:11:32 +0100 :INFO : - patchmgr.log 2020-03-04 16:11:32 +0100 :INFO : - patchmgr.trc 2020-03-04 16:11:32 +0100 :INFO : Exit status:0 2020-03-04 16:11:32 +0100 :INFO : Exiting. [root@zerosita01 patch_19.3.2.0.0.191119]#

patch

After successfully prereqs check, we can do the patch. For rolling mode, you need to add the parameter “rolling” when you call the patchmgr. Doing that, it will patch one by one and just continue with the other cell if the resync from disks at ASM it is finished. And you need to be aware of the EX54 error. If you hit this error, the best is to apply in rolling mode.

The process to patch it is interesting because the patch itself copy the image in a different MD device (the one that it is not running), and change the boot to mount this MD. So, you do not upgrade the current system but just change the running image.

[root@zerosita01 patch_19.3.2.0.0.191119]# ./patchmgr -cells /root/cell_group -patch ******************************************************************************** NOTE Cells will reboot during the patch or rollback process. NOTE For non-rolling patch or rollback, ensure all ASM instances using NOTE the cells are shut down for the duration of the patch or rollback. NOTE For rolling patch or rollback, ensure all ASM instances using NOTE the cells are up for the duration of the patch or rollback. WARNING Do not interrupt the patchmgr session. WARNING Do not alter state of ASM instances during patch or rollback. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot cells or alter cell services during patch or rollback. WARNING Do not open log files in editor in write mode or try to alter them. NOTE All time estimates are approximate. ******************************************************************************** 2020-03-04 16:11:57 +0100 :Working: Check cells have ssh equivalence for root user. Up to 10 seconds per cell ... 2020-03-04 16:11:59 +0100 :SUCCESS: Check cells have ssh equivalence for root user. 2020-03-04 16:12:05 +0100 :Working: Initialize files. Up to 1 minute ... 2020-03-04 16:12:07 +0100 :Working: Setup work directory 2020-03-04 16:12:41 +0100 :SUCCESS: Setup work directory 2020-03-04 16:12:44 +0100 :SUCCESS: Initialize files. 2020-03-04 16:12:44 +0100 :Working: Copy, extract prerequisite check archive to cells. If required start md11 mismatched partner size correction. Up to 40 minutes ... 2020-03-04 16:12:58 +0100 :INFO : Wait correction of degraded md11 due to md partner size mismatch. Up to 30 minutes. 2020-03-04 16:13:00 +0100 :SUCCESS: Copy, extract prerequisite check archive to cells. If required start md11 mismatched partner size correction. 2020-03-04 16:13:00 +0100 :Working: Check space and state of cell services. Up to 20 minutes ... 2020-03-04 16:14:15 +0100 :SUCCESS: Check space and state of cell services. 2020-03-04 16:14:15 +0100 :Working: Check prerequisites on all cells. Up to 2 minutes ... 2020-03-04 16:14:18 +0100 :SUCCESS: Check prerequisites on all cells. 2020-03-04 16:14:18 +0100 :Working: Copy the patch to all cells. Up to 3 minutes ... 2020-03-04 16:15:36 +0100 :SUCCESS: Copy the patch to all cells. 2020-03-04 16:15:38 +0100 :Working: Execute plugin check for Patch Check Prereq ... 2020-03-04 16:15:38 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22909764 v1.0. 2020-03-04 16:15:38 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:15:38 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 17854520 v1.3. 2020-03-04 16:15:38 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:15:38 +0100 :SUCCESS: No exposure to bug 17854520 with non-rolling patching 2020-03-04 16:15:38 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22468216 v1.0. 2020-03-04 16:15:38 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:15:39 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 22468216 2020-03-04 16:15:39 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 24625612 v1.0. 2020-03-04 16:15:39 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:15:39 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 24625612 2020-03-04 16:15:39 +0100 :SUCCESS: No exposure to bug 22896791 with non-rolling patching 2020-03-04 16:15:39 +0100 :INFO : Patchmgr plugin start: Prereq check for exposure to bug 22651315 v1.0. 2020-03-04 16:15:39 +0100 :INFO : Details in logfile /radump/exa_stack/patch_19.3.2.0.0.191119/patchmgr.stdout. 2020-03-04 16:15:41 +0100 :SUCCESS: Patchmgr plugin complete: Prereq check passed for the bug 22651315 2020-03-04 16:15:42 +0100 :SUCCESS: Execute plugin check for Patch Check Prereq. 2020-03-04 16:15:42 +0100 :Working: check if MS SOFTWAREUPDATE is scheduled. up to 5 minutes... 2020-03-04 16:15:43 +0100 :NO ACTION NEEDED: No cells found with SOFTWAREUPDATE scheduled by MS 2020-03-04 16:15:44 +0100 :SUCCESS: check if MS SOFTWAREUPDATE is scheduled 2020-03-04 16:15:52 +0100 1 of 5 :Working: Initiate patch on cells. Cells will remain up. Up to 5 minutes ... 2020-03-04 16:15:58 +0100 1 of 5 :SUCCESS: Initiate patch on cells. 2020-03-04 16:15:58 +0100 2 of 5 :Working: Waiting to finish pre-reboot patch actions. Cells will remain up. Up to 45 minutes ... 2020-03-04 16:16:58 +0100 :INFO : Wait for patch pre-reboot procedures 2020-03-04 16:18:46 +0100 2 of 5 :SUCCESS: Waiting to finish pre-reboot patch actions. 2020-03-04 16:18:47 +0100 :Working: Execute plugin check for Patching ... 2020-03-04 16:18:47 +0100 :SUCCESS: Execute plugin check for Patching. 2020-03-04 16:18:47 +0100 3 of 5 :Working: Finalize patch on cells. Cells will reboot. Up to 5 minutes ... 2020-03-04 16:18:59 +0100 3 of 5 :SUCCESS: Finalize patch on cells. 2020-03-04 16:19:16 +0100 4 of 5 :Working: Wait for cells to reboot and come online. Up to 120 minutes ... 2020-03-04 16:20:16 +0100 :INFO : Wait for patch finalization and reboot 2020-03-04 16:51:25 +0100 4 of 5 :SUCCESS: Wait for cells to reboot and come online. 2020-03-04 16:51:26 +0100 5 of 5 :Working: Check the state of patch on cells. Up to 5 minutes ... 2020-03-04 16:52:40 +0100 5 of 5 :SUCCESS: Check the state of patch on cells. 2020-03-04 16:52:40 +0100 :Working: Execute plugin check for Pre Disk Activation ... 2020-03-04 16:52:41 +0100 :SUCCESS: Execute plugin check for Pre Disk Activation. 2020-03-04 16:52:41 +0100 :Working: Activate grid disks... 2020-03-04 16:52:42 +0100 :INFO : Wait for checking and activating grid disks 2020-03-04 16:54:27 +0100 :SUCCESS: Activate grid disks. 2020-03-04 16:54:31 +0100 :Working: Execute plugin check for Post Patch ... 2020-03-04 16:54:32 +0100 :SUCCESS: Execute plugin check for Post Patch. 2020-03-04 16:54:33 +0100 :Working: Cleanup 2020-03-04 16:55:08 +0100 :SUCCESS: Cleanup 2020-03-04 16:55:10 +0100 :SUCCESS: Completed run of command: ./patchmgr -cells /root/cell_group -patch 2020-03-04 16:55:10 +0100 :INFO : patch attempted on nodes in file /root/cell_group: [zerocell01 zerocell02 zerocell03 zerocell04 zerocell05 zerocell06] 2020-03-04 16:55:10 +0100 :INFO : Current image version on cell(s) is: 2020-03-04 16:55:10 +0100 :INFO : zerocell01: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : zerocell02: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : zerocell03: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : zerocell04: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : zerocell05: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : zerocell06: 19.3.2.0.0.191119 2020-03-04 16:55:10 +0100 :INFO : For details, check the following files in /radump/exa_stack/patch_19.3.2.0.0.191119: 2020-03-04 16:55:10 +0100 :INFO : - <cell_name>.log 2020-03-04 16:55:10 +0100 :INFO : - patchmgr.stdout 2020-03-04 16:55:10 +0100 :INFO : - patchmgr.stderr 2020-03-04 16:55:10 +0100 :INFO : - patchmgr.log 2020-03-04 16:55:10 +0100 :INFO : - patchmgr.trc 2020-03-04 16:55:10 +0100 :INFO : Exit status:0 2020-03-04 16:55:10 +0100 :INFO : Exiting. [root@zerosita01 patch_19.3.2.0.0.191119]#

Post-patch

After patch successfully we can check the running version and if every cell is up.

[root@zerosita01 patch_19.3.2.0.0.191119]# dcli -l root -g /root/cell_group "imageinfo" |grep "Active image version" zerocell01: Active image version: 19.3.2.0.0.191119 zerocell02: Active image version: 19.3.2.0.0.191119 zerocell03: Active image version: 19.3.2.0.0.191119 zerocell04: Active image version: 19.3.2.0.0.191119 zerocell05: Active image version: 19.3.2.0.0.191119 zerocell06: Active image version: 19.3.2.0.0.191119 [root@zerosita01 patch_19.3.2.0.0.191119]# [root@zerosita01 patch_19.3.2.0.0.191119]# dcli -l root -g /root/cell_group "cellcli -e list cell" zerocell01: zerocell01 online zerocell02: zerocell02 online zerocell03: zerocell03 online zerocell04: zerocell04 online zerocell05: zerocell05 online zerocell06: zerocell06 online [root@zerosita01 patch_19.3.2.0.0.191119]#

Exit the screen session and remove the screen package (to avoid the error when applying the patch for database server):

[root@zerosita01 patch_19.3.2.0.0.191119]# cd .. [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# exit [screen is terminating] [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# rpm -qa |grep screen screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# rpm -e screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita01 exa_stack]#

4 – Patch Database Servers

For Database server, I always prefer to patch the node 1 first, and after the other nodes. To do that, we copy the files to another node that has the ssh key for node 1 (if no, you can use another machine or configure now). You can still do this in one external machine if you want.

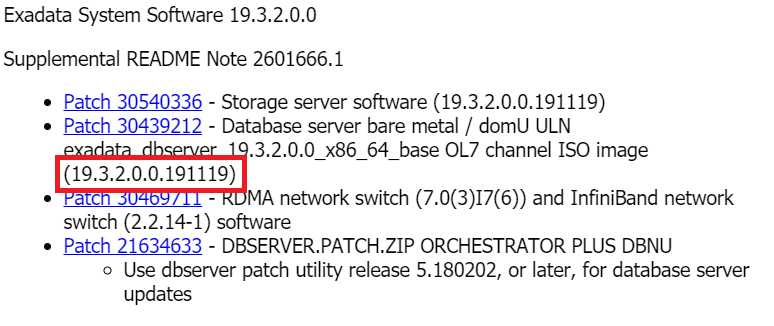

Before starting the patch is important to show some details. First, the patch is applied by database server patch orchestrator (basically it is a solo patchmgr). The second, it is for database server we need to inform the target version (the version that we want to patch), this is the Exadata Channel version and can be found in the link description of the patch (marked in red):

From Node 2 to Node 1

Preparing the other node

We need to prepare the other node to patch the node 1. We copy the patches, unzip it, install screen (and start the session), and stop all NFS (if mounted).

[root@zerosita02 ~]# cd /radump/ [root@zerosita02 radump]# [root@zerosita02 radump]# mkdir exa_stack [root@zerosita02 radump]# [root@zerosita02 radump]# cd exa_stack/ [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/patchmgr/p21634633_193100_Linux-x86-64.zip ./ [root@zerosita02 exa_stack]# cp /zfs/ZDLRA_PATCHING/19.2.1.1.1/19.2.1.1.1-201910-30614042/EXA-STACK/dbnode/p30439212_193000_Linux-x86-64.zip ./ [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# unzip p21634633_193100_Linux-x86-64.zip Archive: p21634633_193100_Linux-x86-64.zip creating: dbserver_patch_19.191113/ inflating: dbserver_patch_19.191113/README.txt inflating: dbserver_patch_19.191113/md5sum_files.lst inflating: dbserver_patch_19.191113/patchReport.py inflating: dbserver_patch_19.191113/cellboot_usb_pci_path inflating: dbserver_patch_19.191113/dcli inflating: dbserver_patch_19.191113/exadata.img.env extracting: dbserver_patch_19.191113/dbnodeupdate.zip creating: dbserver_patch_19.191113/linux.db.rpms/ inflating: dbserver_patch_19.191113/exadata.img.hw inflating: dbserver_patch_19.191113/patchmgr inflating: dbserver_patch_19.191113/ExadataSendNotification.pm inflating: dbserver_patch_19.191113/ExadataImageNotification.pl inflating: dbserver_patch_19.191113/patchmgr_functions inflating: dbserver_patch_19.191113/imageLogger inflating: dbserver_patch_19.191113/ExaXMLNode.pm [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# ls -l total 1856868 drwxrwxr-x 3 root root 4096 Mar 5 12:30 dbserver_patch_19.191113 -rw-r--r-- 1 root root 438739135 Mar 5 12:20 p21634633_193100_Linux-x86-64.zip -rw-r--r-- 1 root root 1460815642 Mar 5 12:20 p30439212_193000_Linux-x86-64.zip [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# rpm -Uvh /zfs/ZDLRA_PATCHING/19.2.1.1.1/screen-4.1.0-0.25.20120314git3c2946.el7.x86_64.rpm Preparing... ################################# [100%] Updating / installing... 1:screen-4.1.0-0.25.20120314git3c29################################# [100%] [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# screen -L -RR NODE1-From-Node2-Upgrade [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# umount /zfs [root@zerosita02 exa_stack]#

Precheck

So, from node 2 we check if node 1 is ok with the prechecks (the zip, is the patch that was downloaded, and the target version is the desired for the chosen patch):

[root@zerosita02 dbserver_patch_19.191113]# ./patchmgr -dbnodes /root/db_node01 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -precheck ************************************************************************************************************ NOTE patchmgr release: 19.191113 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2020-03-05 12:41:59 +0100 :Working: Verify SSH equivalence for the root user to zeroadm01 2020-03-05 12:41:59 +0100 :SUCCESS: Verify SSH equivalence for the root user to zeroadm01 2020-03-05 12:42:00 +0100 :Working: Initiate precheck on 1 node(s) 2020-03-05 12:45:34 +0100 :Working: Check free space on zeroadm01 2020-03-05 12:45:37 +0100 :SUCCESS: Check free space on zeroadm01 2020-03-05 12:46:07 +0100 :Working: dbnodeupdate.sh running a precheck on node(s). 2020-03-05 12:47:49 +0100 :SUCCESS: Initiate precheck on node(s). 2020-03-05 12:47:49 +0100 :SUCCESS: Completed run of command: ./patchmgr -dbnodes /root/db_node01 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -precheck 2020-03-05 12:47:50 +0100 :INFO : Precheck attempted on nodes in file /root/db_node01: [zeroadm01] 2020-03-05 12:47:50 +0100 :INFO : Current image version on dbnode(s) is: 2020-03-05 12:47:50 +0100 :INFO : zeroadm01: 19.2.3.0.0.190621 2020-03-05 12:47:50 +0100 :INFO : For details, check the following files in /radump/exa_stack/dbserver_patch_19.191113: 2020-03-05 12:47:50 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2020-03-05 12:47:50 +0100 :INFO : - patchmgr.log 2020-03-05 12:47:50 +0100 :INFO : - patchmgr.trc 2020-03-05 12:47:50 +0100 :INFO : Exit status:0 2020-03-05 12:47:50 +0100 :INFO : Exiting. [root@zerosita02 dbserver_patch_19.191113]#

upgrade

If it is ok with the prereqs, we patch node 1 (from node 2):

[root@zerosita02 dbserver_patch_19.191113]# ./patchmgr -dbnodes /root/db_node01 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -upgrade ************************************************************************************************************ NOTE patchmgr release: 19.191113 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE NOTE Database nodes will reboot during the update process. NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2020-03-05 12:52:12 +0100 :Working: Verify SSH equivalence for the root user to zeroadm01 2020-03-05 12:52:13 +0100 :SUCCESS: Verify SSH equivalence for the root user to zeroadm01 2020-03-05 12:52:14 +0100 :Working: Initiate prepare steps on node(s). 2020-03-05 12:52:14 +0100 :Working: Check free space on zeroadm01 2020-03-05 12:52:18 +0100 :SUCCESS: Check free space on zeroadm01 2020-03-05 12:52:50 +0100 :SUCCESS: Initiate prepare steps on node(s). 2020-03-05 12:52:50 +0100 :Working: Initiate update on 1 node(s). 2020-03-05 12:52:50 +0100 :Working: dbnodeupdate.sh running a backup on 1 node(s). 2020-03-05 12:57:34 +0100 :SUCCESS: dbnodeupdate.sh running a backup on 1 node(s). 2020-03-05 12:57:34 +0100 :Working: Initiate update on node(s) 2020-03-05 12:57:35 +0100 :Working: Get information about any required OS upgrades from node(s). 2020-03-05 12:57:45 +0100 :SUCCESS: Get information about any required OS upgrades from node(s). 2020-03-05 12:57:45 +0100 :Working: dbnodeupdate.sh running an update step on all nodes. 2020-03-05 13:12:17 +0100 :INFO : zeroadm01 is ready to reboot. 2020-03-05 13:12:17 +0100 :SUCCESS: dbnodeupdate.sh running an update step on all nodes. 2020-03-05 13:12:26 +0100 :Working: Initiate reboot on node(s) 2020-03-05 13:12:28 +0100 :SUCCESS: Initiate reboot on node(s) 2020-03-05 13:12:28 +0100 :Working: Waiting to ensure zeroadm01 is down before reboot. 2020-03-05 13:12:52 +0100 :SUCCESS: Waiting to ensure zeroadm01 is down before reboot. 2020-03-05 13:12:52 +0100 :Working: Waiting to ensure zeroadm01 is up after reboot. 2020-03-05 13:15:46 +0100 :SUCCESS: Waiting to ensure zeroadm01 is up after reboot. 2020-03-05 13:15:46 +0100 :Working: Waiting to connect to zeroadm01 with SSH. During Linux upgrades this can take some time. 2020-03-05 13:35:10 +0100 :SUCCESS: Waiting to connect to zeroadm01 with SSH. During Linux upgrades this can take some time. 2020-03-05 13:35:10 +0100 :Working: Wait for zeroadm01 is ready for the completion step of update. 2020-03-05 13:35:10 +0100 :SUCCESS: Wait for zeroadm01 is ready for the completion step of update. 2020-03-05 13:35:10 +0100 :Working: Initiate completion step from dbnodeupdate.sh on node(s) 2020-03-05 13:42:37 +0100 :SUCCESS: Initiate completion step from dbnodeupdate.sh on zeroadm01 2020-03-05 13:42:50 +0100 :SUCCESS: Initiate update on node(s). 2020-03-05 13:42:51 +0100 :SUCCESS: Initiate update on 0 node(s). [INFO ] Collected dbnodeupdate diag in file: Diag_patchmgr_dbnode_upgrade_050320125211.tbz -rw-r--r-- 1 root root 2397370 Mar 5 13:42 Diag_patchmgr_dbnode_upgrade_050320125211.tbz 2020-03-05 13:42:52 +0100 :SUCCESS: Completed run of command: ./patchmgr -dbnodes /root/db_node01 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -upgrade 2020-03-05 13:42:52 +0100 :INFO : Upgrade attempted on nodes in file /root/db_node01: [zeroadm01] 2020-03-05 13:42:52 +0100 :INFO : Current image version on dbnode(s) is: 2020-03-05 13:42:52 +0100 :INFO : zeroadm01: 19.3.2.0.0.191119 2020-03-05 13:42:52 +0100 :INFO : For details, check the following files in /radump/exa_stack/dbserver_patch_19.191113: 2020-03-05 13:42:52 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2020-03-05 13:42:52 +0100 :INFO : - patchmgr.log 2020-03-05 13:42:52 +0100 :INFO : - patchmgr.trc 2020-03-05 13:42:52 +0100 :INFO : Exit status:0 2020-03-05 13:42:52 +0100 :INFO : Exiting. You have new mail in /var/spool/mail/root [root@zerosita02 dbserver_patch_19.191113]#

Post-patch of Node 1

If the patch was Ok, we can finish the patch at node 2. This means exist screen session and remove it:

[root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# rpm -qa |grep screen screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# [root@zerosita02 exa_stack]# rpm -e screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita02 exa_stack]#

From Node 1 to Node 2 (or others)

Preparing node 1

Since we upgraded node 1 in the above we need to reinstall screen and create the session. Check that maybe you need a new rpm version if you upgraded (as an example) from OEL 6 to OEL 7:

[root@zerosita01 ~]# cd /radump/exa_stack/ [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# rpm -Uvh /zfs/ZDLRA_PATCHING/19.2.1.1.1/screen-4.1.0-0.25.20120314git3c2946.el7.x86_64.rpm Preparing... ################################# [100%] Updating / installing... 1:screen-4.1.0-0.25.20120314git3c29################################# [100%] [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# umount /zfs/ [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# screen -L -RR NODE2-From-Node1-Upgrade [root@zerosita01 exa_stack]#

Precheck

We need to precheck the other nodes to check inconsistencies. In this scenario, the file has the list for other nodes (just one):

[root@zerosita01 dbserver_patch_19.191113]# ./patchmgr -dbnodes /root/db_group_zeroadm02 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -precheck ************************************************************************************************************ NOTE patchmgr release: 19.191113 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2020-03-05 13:57:04 +0100 :Working: Verify SSH equivalence for the root user to zeroadm02 2020-03-05 13:57:05 +0100 :SUCCESS: Verify SSH equivalence for the root user to zeroadm02 2020-03-05 13:57:06 +0100 :Working: Initiate precheck on 1 node(s) 2020-03-05 14:00:39 +0100 :Working: Check free space on zeroadm02 2020-03-05 14:00:42 +0100 :SUCCESS: Check free space on zeroadm02 2020-03-05 14:01:12 +0100 :Working: dbnodeupdate.sh running a precheck on node(s). 2020-03-05 14:02:54 +0100 :SUCCESS: Initiate precheck on node(s). 2020-03-05 14:02:54 +0100 :SUCCESS: Completed run of command: ./patchmgr -dbnodes /root/db_group_zeroadm02 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -precheck 2020-03-05 14:02:55 +0100 :INFO : Precheck attempted on nodes in file /root/db_group_zeroadm02: [zeroadm02] 2020-03-05 14:02:55 +0100 :INFO : Current image version on dbnode(s) is: 2020-03-05 14:02:55 +0100 :INFO : zeroadm02: 19.2.3.0.0.190621 2020-03-05 14:02:55 +0100 :INFO : For details, check the following files in /radump/exa_stack/dbserver_patch_19.191113: 2020-03-05 14:02:55 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2020-03-05 14:02:55 +0100 :INFO : - patchmgr.log 2020-03-05 14:02:55 +0100 :INFO : - patchmgr.trc 2020-03-05 14:02:55 +0100 :INFO : Exit status:0 2020-03-05 14:02:55 +0100 :INFO : Exiting. You have new mail in /var/spool/mail/root [root@zerosita01 dbserver_patch_19.191113]#

upgrade

If it is OK, we can do the upgrade:

[root@zerosita01 dbserver_patch_19.191113]# ./patchmgr -dbnodes /root/db_group_zeroadm02 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -upgrade ************************************************************************************************************ NOTE patchmgr release: 19.191113 (always check MOS 1553103.1 for the latest release of dbserver.patch.zip) NOTE NOTE Database nodes will reboot during the update process. NOTE WARNING Do not interrupt the patchmgr session. WARNING Do not resize the screen. It may disturb the screen layout. WARNING Do not reboot database nodes during update or rollback. WARNING Do not open logfiles in write mode and do not try to alter them. ************************************************************************************************************ 2020-03-05 14:14:24 +0100 :Working: Verify SSH equivalence for the root user to zeroadm02 2020-03-05 14:14:25 +0100 :SUCCESS: Verify SSH equivalence for the root user to zeroadm02 2020-03-05 14:14:26 +0100 :Working: Initiate prepare steps on node(s). 2020-03-05 14:14:26 +0100 :Working: Check free space on zeroadm02 2020-03-05 14:14:30 +0100 :SUCCESS: Check free space on zeroadm02 2020-03-05 14:15:02 +0100 :SUCCESS: Initiate prepare steps on node(s). 2020-03-05 14:15:02 +0100 :Working: Initiate update on 1 node(s). 2020-03-05 14:15:02 +0100 :Working: dbnodeupdate.sh running a backup on 1 node(s). 2020-03-05 14:19:56 +0100 :SUCCESS: dbnodeupdate.sh running a backup on 1 node(s). 2020-03-05 14:19:56 +0100 :Working: Initiate update on node(s) 2020-03-05 14:19:56 +0100 :Working: Get information about any required OS upgrades from node(s). 2020-03-05 14:20:06 +0100 :SUCCESS: Get information about any required OS upgrades from node(s). 2020-03-05 14:20:06 +0100 :Working: dbnodeupdate.sh running an update step on all nodes. 2020-03-05 14:34:37 +0100 :INFO : zeroadm02 is ready to reboot. 2020-03-05 14:34:37 +0100 :SUCCESS: dbnodeupdate.sh running an update step on all nodes. 2020-03-05 14:34:44 +0100 :Working: Initiate reboot on node(s) 2020-03-05 14:34:47 +0100 :SUCCESS: Initiate reboot on node(s) 2020-03-05 14:34:47 +0100 :Working: Waiting to ensure zeroadm02 is down before reboot. 2020-03-05 14:35:11 +0100 :SUCCESS: Waiting to ensure zeroadm02 is down before reboot. 2020-03-05 14:35:11 +0100 :Working: Waiting to ensure zeroadm02 is up after reboot. 2020-03-05 14:38:04 +0100 :SUCCESS: Waiting to ensure zeroadm02 is up after reboot. 2020-03-05 14:38:04 +0100 :Working: Waiting to connect to zeroadm02 with SSH. During Linux upgrades this can take some time. 2020-03-05 15:05:13 +0100 :SUCCESS: Waiting to connect to zeroadm02 with SSH. During Linux upgrades this can take some time. 2020-03-05 15:05:13 +0100 :Working: Wait for zeroadm02 is ready for the completion step of update. 2020-03-05 15:05:13 +0100 :SUCCESS: Wait for zeroadm02 is ready for the completion step of update. 2020-03-05 15:05:14 +0100 :Working: Initiate completion step from dbnodeupdate.sh on node(s) 2020-03-05 15:12:39 +0100 :SUCCESS: Initiate completion step from dbnodeupdate.sh on zeroadm02 2020-03-05 15:12:53 +0100 :SUCCESS: Initiate update on node(s). 2020-03-05 15:12:53 +0100 :SUCCESS: Initiate update on 0 node(s). [INFO ] Collected dbnodeupdate diag in file: Diag_patchmgr_dbnode_upgrade_050320141423.tbz -rw-r--r-- 1 root root 2351811 Mar 5 15:12 Diag_patchmgr_dbnode_upgrade_050320141423.tbz 2020-03-05 15:12:54 +0100 :SUCCESS: Completed run of command: ./patchmgr -dbnodes /root/db_group_zeroadm02 -iso_repo /radump/exa_stack/p30439212_193000_Linux-x86-64.zip -target_version 19.3.2.0.0.191119 -upgrade 2020-03-05 15:12:54 +0100 :INFO : Upgrade attempted on nodes in file /root/db_group_zeroadm02: [zeroadm02] 2020-03-05 15:12:54 +0100 :INFO : Current image version on dbnode(s) is: 2020-03-05 15:12:54 +0100 :INFO : zeroadm02: 19.3.2.0.0.191119 2020-03-05 15:12:54 +0100 :INFO : For details, check the following files in /radump/exa_stack/dbserver_patch_19.191113: 2020-03-05 15:12:54 +0100 :INFO : - <dbnode_name>_dbnodeupdate.log 2020-03-05 15:12:54 +0100 :INFO : - patchmgr.log 2020-03-05 15:12:54 +0100 :INFO : - patchmgr.trc 2020-03-05 15:12:54 +0100 :INFO : Exit status:0 2020-03-05 15:12:54 +0100 :INFO : Exiting. You have new mail in /var/spool/mail/root [root@zerosita01 dbserver_patch_19.191113]#

Post-patch of other nodes

After success, we can exit the session and remove the screen:

[root@zerosita01 dbserver_patch_19.191113]# exit [screen is terminating] [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# rpm -qa |grep screen screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# rpm -e screen-4.1.0-0.25.20120314git3c2946.el7.x86_64 [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]#

5 – Post-Patch

The post-patch is simple, we can just check the versions that we are running. If the upgrade was ok for all servers and switched (if the version is the desired one):

[root@zerosita01 exa_stack]# dcli -l root -g /root/cell_group "imageinfo" |grep "Active image version" zerocell01: Active image version: 19.3.2.0.0.191119 zerocell02: Active image version: 19.3.2.0.0.191119 zerocell03: Active image version: 19.3.2.0.0.191119 zerocell04: Active image version: 19.3.2.0.0.191119 zerocell05: Active image version: 19.3.2.0.0.191119 zerocell06: Active image version: 19.3.2.0.0.191119 [root@zerosita01 exa_stack]# dcli -l root -g /root/db_group "imageinfo" |grep "Active image version" [root@zerosita01 exa_stack]# dcli -l root -g /root/db_group "imageinfo" |grep "Image version" zerosita01: Image version: 19.3.2.0.0.191119 zerosita02: Image version: 19.3.2.0.0.191119 [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# dcli -l root -g /root/ib_group "version" |grep "36p" zdls-iba01: SUN DCS 36p version: 2.2.14-1 zdls-ibb01: SUN DCS 36p version: 2.2.14-1 [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# dcli -l root -g /root/cell_group "ipmitool sunoem led get" |grep ": SERVICE" zerocell01: SERVICE | OFF zerocell02: SERVICE | OFF zerocell03: SERVICE | OFF zerocell04: SERVICE | OFF zerocell05: SERVICE | OFF zerocell06: SERVICE | OFF [root@zerosita01 exa_stack]# dcli -l root -g /root/db_group "ipmitool sunoem led get" |grep ": SERVICE" zerosita01: SERVICE | OFF zerosita02: SERVICE | OFF [root@zerosita01 exa_stack]#

6 – Restart CRS and Cluster

Since at step 1 we stopped the cluster, we can now start CRS and GI:

[root@zerosita01 exa_stack]# /u01/app/19.0.0.0/grid/bin/crsctl start crs CRS-4123: Oracle High Availability Services has been started. [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# ssh -q zerosita02 Last login: Thu Mar 5 15:17:03 CET 2020 from 99.234.123.138 on ssh Last login: Thu Mar 5 15:17:10 2020 from zerosita01.zero.flisk.net [root@zerosita02 ~]# [root@zerosita02 ~]# /u01/app/19.0.0.0/grid/bin/crsctl start crs CRS-4123: Oracle High Availability Services has been started. [root@zerosita02 ~]# [root@zerosita02 ~]# [root@zerosita02 ~]# logout [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# /u01/app/19.0.0.0/grid/bin/crsctl start cluster -all [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]# /u01/app/19.0.0.0/grid/bin/crsctl start cluster -all CRS-4690: Oracle Clusterware is already running on 'zerosita01' CRS-4690: Oracle Clusterware is already running on 'zerosita02' CRS-4000: Command Start failed, or completed with errors. [root@zerosita01 exa_stack]# [root@zerosita01 exa_stack]#

Finishing Patch Apply

After we check that everything is fine, all the patches were applied (at Storage, Database server, and InfiniBand switches), and there is no HW error (or alerts at storage side), we can finish the patch.

For ZDLRA

If you are patching ZDLRA we need to check the OS system in this case. Since ZDLRA needs some special parameters and the OS was upgraded to the vanilla Exadata version, we need to fix it.

This is done using the script /opt/oracle.RecoveryAppliance/os/setup_os.sh executed in both nodes: