Recently I made a tweet about a new project with Oracle Engineered System (X9M) that remembered me about what I made with these systems until now. So, this opened the opportunity to tell my background and history until now working with these systems. Is not a show-off of ego boost post.

Early Days

I started at DBA between 2002 and 2003 working with Oracle 9i/10G during the University degree, and my first contact with Oracle was programming directly with OCI (Oracle Call Interface) with oci.h. There I worked with Oracle Spatial for an open-source project called MapServer and you can still find my code kicking around. The Mapserver + Oracle Spatial was used by USACE during Hurricane Katrina in 2005. Around 2004 I started with more Oracle DBA stuffs like modeling, SQL tuning, and administration tasks like Oracle RAC. And there I worked with PostgreSQL and PostGIS too.

Later in 2007 I started my Master and in 2008 I joined Softplan as a DBA and I worked with several databases, including Oracle, Microsoft SQL Server, and DB2. I participate in several projects for several customers, with a focus on High Availability environments (RAC, and Data Guard). I worked full-time until 2010 and as an external specialist consultant until the end of 2015.

Oracle Engineered Systems

Exadata V2

On 10 September of 2010, I joined at Court of Justice of Santa Catarina (TJSC) as an official/public employee and there my work with Engineered Systems started. The nice part is that TJSC was the third customer of Exadata of Brazil, and the first purely OLTP/DSS at that time. We got Exadata V2 (HP, half-rack) 15 days before I arrived (and was not even energized when I arrived).

The amazing part of that history is because Oracle Brazil at that time (and Oracle as well) don’t know exactly what to do. I mean, the ACS team was not prepared, and the internal support team for support at Oracle was not prepared too. So, basically, received the machine a big “do it yourself”. Since the DBA team was me, my manager, and other DBA (dealing with Caché Database) I was in charge of the Exadata project.

The project for TJSC was related with a huge consolidated movement from around 100 databases in 8 databases (4 for Lower Courts, and 4 for Upper Courts), all the paperwork done in each city court was moved to a paperless system and this allowed the society + lawyers have direct access for the process and even do appeals by internet (lawyers usually).

Because of the nature of the project, the consolidation, and the number of applications accessing the databases, the idea of IORM was fundamental. But remember that I talked before that was basically DIY in 2010 (for everything). So, I remember that I passed the first two months reading all Exadata official docs, all notes at Metalink related to Exadata, and a small number of people publish that time about Exadata (Tanel Poder as you can imagine).

I will put in this perspective, for me, the Exadata has three pillars: IORM, Smart Scan, and HCC. Besides these features, you can do everything with other vendors/software. Smart scan as an example is clear that only Exadata software and understand Oracle queries and there is nothing that others vendor can do about that. For HCC the principle is the same, compression done by Exadata software “reading” the content of Oracle database blocs, so, only Oracle can do that. And for IORM, particularly for me, is THE underestimate feature (I will write a post just for that in the future) because when you use CATPLAN there is nothing at the market that you can prioritize the I/O at that level.

For the V2 project as an example, with IORM plan using catplan (together with dbplan) was possible to define that everything coming from Desktop application (as an example, everything coming from Court workers) have more priority of I/O that come from Web, but the point is that was possible to prioritize the desktop access whatever the database that was coming. Think about the 4 databases that I had before, the Desktop and Web users can access all databases at the same time, using IORM + RAC Services was possible to designate one category for each access (mapping one service_name to one consumer group and each consumer group to one category, and this category with one respective at IORM).

Using IORM with catplan was possible to meet the requirement “the internal users can’t stop, and they need to have less possible latency”. If you compare with the traditional environment (db+storage) the better thing that you can do is prioritize the whole database between them (like putting one database in flash drivers, other in SAS, and others in SATA), but never based on access (or within the database at storage level). And to be honest, I still do not believe that Oracle is removing catplan option. Oracle is killing the feature.

So, the Exadata V2 project delivered more than expected, we got a lot of saving due to the smart scan and we can meet the project requirements for access prioritization. But was not just that, we got one machine with no single point of failure (SPOF) too.

Exadata X2

Since the success of the Exadata V2, we started to think about other databases that are not part of core business (like HR, Legal Notice Archiving, and several others). For this reason, was used Exadata X2 (HP, half-rack).

The gains related to smart scan and reliability of environment (no SPOF compared with precious one) show that continue with Engineered System was the best.

Exadata X4

Around 2014 the core databases were demanding more (performance and space) since the increased application usage (all migrations finished and all previous data imported). These databases were serving around 8.000 unique users per day (for internal/desktop users), plus all web access (more than 100.000 requests per day).

Besides that, new regulations and system natural evolution for the next years were triggered. For this reason, a full Exadata X4 was put in place. Since you are inside of Engineered System environment is difficult to move (or return) to a traditional environment and not have a huge drawback or impact. Think about the IORM, Smart scan, and all other small features that you left behind. Remember, Exadata is not just hardware, is software. is not a lock-in solution, it simply works very well.

Exadata X5

One detail that you maybe missed in the post is that about the paperless/digital idea of the project. Think about the previous “way to work”: all the judges and internal users crate documents at Word (as an example), and later import at application and inside of the database. The application was just a repository because all the data exists on paper. If something is missing inside the application they just need to check physically the page. But now, since everything was digital inside the application, how you can audit or even recovery the information? If you need to crosscheck some data, how do you do that since there is no paper? Thinking about an outage, in case of a data center problem, how do you protect your data?

For Oracle, the only way to protect the environment and decrease the RPO to zero (smoothly) is using Data Guard. And if you want to sustain the same level of performance (in case of switchover) the only way is to put it in the same environment. So, using this principle, Exadata X5 EF (Extreme Flash, full rack) was put in place.

The environment at that time was being accessed for more than 8.0000 internal users and more than 100.000 web access, daily. The numbers that time was around 120.000 IOPS (collected on the database side), but with a BIG hidden detail, with more than 95% of saving by the smart scan. So, if we needed to return to the traditional environment, was needed to acquire one storage that delivers more than 1.000.000 IOPS just hit the same required performance for ONE database (still have others 7). And I will tell you that Brazil prices for this kind of storage are not cheaper. And now, if you pick up numbers for a full Exadata X4 about IOPS, you can see the room space for usage that was available yet.

So, in begin of 2015, the Exadata X5 (EF, full rack) was put in place (first EF of Brazil) to serve as the primary database. Exadata X4 was put on standby at secondary DC as a standby database. This meets the requirement that (by law) “all data related with the judicial process needed to be sync and have an external second copy”.

Remember the numbers above, thousands of IOPS save by smart scan, what do you think will be the impact over primary if the standby can’t handle the same performance? This is one of the reasons that architecture in this kind of environment is (most of the time) not trivial. You need to check and understand the small details of the solutions, read the small lines.

Just to add, later in 2017 was added Exadata X6 (HC, quarter rack) as a multi-cable for Exadata X5 and all databases from Exadata X2 were migrated to this X5+X6. The idea was to replace the old X2 (passed it to Dev/TST/UAT environment) with the new Exadata X6 and provide additional space for core databases due archiving process for some historic tables (HCC + others secure files deduplication details).

ZDLRA

I remember that I was at Oracle Open World 2014 and saw the presentation/debut of Zero Data Loss Recovery Appliance (ZDLRA) and understood how it was game-changing. Think today, what are the main “problems” that you have at your backup today. What is the size of your database? What is the impact to do full backup regularly? How much time do you need to store your backups? How do you manage long-term retention? How do you validate these backups? They are ok again corruption (logically and physically)?

So, at that time (2014/2015) the core databases were using around 60TB of space and the backup solution was not providing the best performance. And was not a bad technology, I was using EMC Data Domain with VTL and deduplication, but was not reaching the expected performance anymore (size and deduplication). The effort to sustain the backup was HUGE, the endless testing, monthly usage (more than 14 LTO6 tapes per week for backups and archive logs – the user’s behavior was updated same data/process while working over the judicial process life and generate a lot of binary data like pdf) and lower deduplication (due to the application behavior).

So, the release of ZDLRA came at a good time because we are studying a new backup solution. Talking with ZDLRA PM and engineers we purposed a POC and after that, the solution was chosen. If you don’t know, ZDLRA provides a virtual full backup that works differently than deduplication because can see/read/understand what is since of RMAN backup sets. And merging full level 0 with level 1 generates for you one full that is validated against corruption (logically and physically at database block level). And at the next day, when you do a new level 1, it will generate a new level 0 automatically. So, think about the gains for your TB size database. Using small LV1 you can have a daily level 0 database. You can read more here.

But you know, backup is backup. If you made it yesterday your RPO and data loss will be 1 day. But ZDLRA has another feature (that for me is the most important) called Real-Time Redo Transport, which means that ZDLRA can be log_archive_dest for your database allowing you to have zero RPO. Even for databases without Data Guard (and you don’t need to pay a license of DG to use this feature).

So, remember about my environment, Exadata X5 (primary and Exadata X4 as standby) for core databases, Exadata X2 with “satellites” databases (that are not core but consume data from there), and add all the law requirements and monthly growth. With two ZDLRA, one per each DC working replicated was possible to have zero RPO for all databases even in case of complete site outage and including databases without DG. For DG databases each side was protected by the same site ZDLRA, and for non-DG databases, the real-time redo provided zero RPO (and the replication for ZDLRA provided multi-site protection).

This project was really nice because allowed us to work together with Oracle and provide the data to be used to improve MAA architectures. This project was elaborated in 2014/2015 and at that time MAA architecture was not even considered ZDLRA (check the old MAA reference here). The link for the OOW 2015 presentation about this project can be found here.

Luxembourg

In October of 2017, I moved from Brazil to Luxembourg. Here I started as a consultant and helped some customers. And some of them are really strict about security and HA. Of course, that all my previous background with Exadata, ZDLRA, MAA, RAC, and Data Guard helped in this process (was the reason for the move).

Here I continued linked with the same kind of environment, multiple sites running with Exadata and ZDLRA as well. And I added ODA to this list too. The requirements are different but the idea behind is the same, zero RPO and zero RTO.

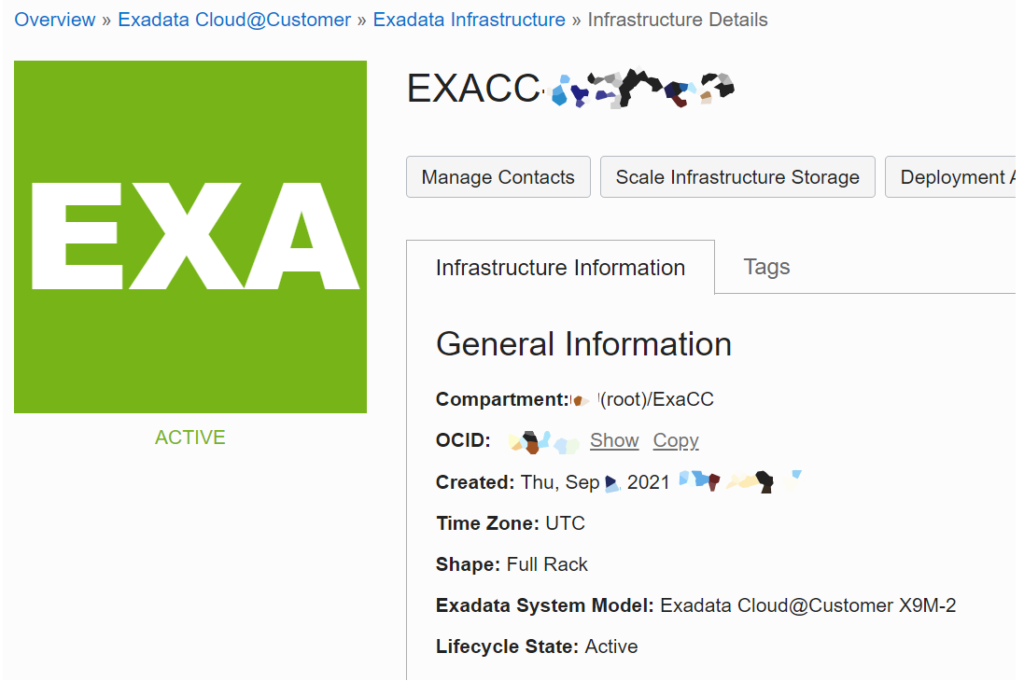

But (as you saw in my tweet) the things are moving, the world is changing and now Cloud is there to everyone, so, Exadata Cloud@Customer X9M arrived. And new ZDLRA X9M too. As you can imagine will be multi-site for all of them as well. I can’t share more information due to the regulations regarding the area and as you can imagine, the environment is quite secure using MAA at its finest, but I will try to post something (stay tuned for that).

Architecture and what you need to understand

Working with Exadata is more than just create databases and use smart scan. Is not like a traditional environment where you don’t have technology and intelligence evolved. I agree that I was lucky to be able to work with Exadata from V2 to X9M, ODA, and ZDLRA. Is not being show-off but the idea was to share some points that maybe you have not noticed.

For Exadata you need to understand what is possible to do, you need to understand the features that you can use, and most importantly, how to integrate them with your databases. IORM with catplan is one example, there is nothing outside that can be compared. But is one feature that is not easy (I will say that IORM with only dbplan or cluster plan is easy), and when you link with database resource manager and RAC services you see the potential. Technically is easy to implement it (few commands you have everything in place), but how it works and how you correctly define it, takes time. And this is more, even more, architectural design and understanding than just normal Senior DBA life.

But is not just that, you can use the Exadata storage metrics to have a full view of what is happening. And again, reading metrics is easy, understand what the numbers mean is the key. I already explained them here in some previous posts (here, and here).

At ZDLRA, for me, the most important is not even the backup. As I wrote before, if you rely just on backup, and if you made it just yesterday you will have one day of data loss. Understand how to use real-time redo together with databases+replication+data guard is the key to good architectural design. I already wrote about that here previously.

The point that I would like to show here is that you need to understand how to integrate the features. This will differentiate you from others. Just connect at Exadata and call cellcli will not make you DMA (Database Machine Administrator), you can even be OCM and not understand how to use Exadata or Engineered System. So, read the documentation, read MOS notes, read books about how to use Engineered System (and now read about OCI), but remember that they are Hardware + Software. Understand how to use the Exadata software is more important than know what is the CPU speed for the X9M version.

Lessons Learned

Just because is Engineered Systems this not means that is perfect all the time, there are a lot of things that occurred with me during these 11 years. If you read everything until now you can imagine that the beginning was not an easy road.

As I wrote before at the beginning, we needed to open SR to get a new version of the official docs. The IORM was not the best solution. I remember opening SR because I defined more than 9 categories for catplan and all cells generated errors. This occurred because no one at Oracle imagined that someone will use more than 9 categories. (By the way, the bug was solved a long time ago).

Hugepages usage for 11.2 (11.2.0.2 that time) and the report (“Large Pages Information” at alertlog when you start database) was completely wrong. Bug opened and ER filled.

When I patched the Exadata X4 to the new 12.2 that time and since the patchmgr changed the default pattern (undocumented at that time) and started to remove libraries (rpms) during the pre-check phase. I patched one day after the new release. The history was simple, the precheck changed and removed the rpms automatically to meet the default “exact”. For sure was a mess.

The installation/upgrade of GI 12.2 is freezing due “NOREPAIR” for disk check. Basically, the grid (without patch 25556203) check byte a byte each disk during rootupgrade script. And for Full Exadata X4 with 168 disks took a long time. If you go at note 2111010.1 and search for “March 14, 2017”, this entry is due to mine SR/Bug.

I had others as well, but don’t worry all was solved right now. The point is that Exadata/ZDLRA are always evolving. The patch process at the beginning was really different than it is done now, everything evolved for good. A lot of features were added as well, PMEM is the most recent example. And if you compare what was done in the traditional environment (db+storage), is the same that was made 20 years ago (some new small features and faster hardware, but the logic is the same).

ExaCC is evolving too. A new feature like node subset/subpartition and multi VLAN will arrive. I see ExaCC now as Exadata at the beginning, with a lot of room for improvement. But again, is more important to understand how correctly integrate (as an example) CPU scaling at your monitoring procedure than what it is cpu_count.

And not just Exadata, if you think about the MAA and ZDLRA, that was a significant improvement. Think about your DC right now. Where are your databases are running? Maybe some at Exadata, some at HP storage, others at EMC. But if you need quickly (by law change) provide zero RPO for everyone, what you will need to do? Buy Exadata+HP+EMC to use Data Guard and Storage replication? Or new DC? But if you put ZDLRA there, all can be/have zero RPO. One single solution that protects you and you change nothing at your side (rman is the same, archivelogs as well), and everything MAA compliance. Understand this is the key. You can check more about that here.

To Finish

With this post I have not tried to be a show-off or selfish, I wanted to share with you my experience with Engineered Systems, since 2010 with Exadata, and since 2014 with ZDLRA. If you look at the entire post above, I not showed you how to use ASM, smart scan, IORM, HCC, or how to do backup at ZDLRA. My idea was show to you that the key is to understand how to integrate all the features.

Another thing is never afraid to use anything. You will learn doing. I have several histories about bugs, patches, and errors. But just because I tried to use and to understand how they work. Never be afraid to open logs from commands and always review what are you made (and will do).

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Hello Simon,

I watched your presentation on ZDLRA and came away with some questions.

Does the ZDLRA machine have any oracle software installed on it?

Is the inbuilt recovery Catalog configured in a database?

I noticed you used a scan to create the wallet and add the credentials. Can you explain the syntax of that wallet creation command

How do you create the vpc user? Is it done through command line

Hi,

Sorry for the late reply.

Yes, there is Oracle software running at ZDLRA. And it is not just the Oracle database, but a real library running on the ZDLRA side that can “understand and deconstruct” rman backupsets to read the database blocks inside it.

The database running there is also the rman catalog that can be used for all protected databases. It is ready for use, you just add it and later “register database” with the rman command.

Yes, you can use a scan for your wallet credential creation. I have no post directly for wallet, but you can read this one: https://www.fernandosimon.com/blog/how-to-use-zdlra-and-enroll-a-database/

The VPC change at 19.x version of zdlra, now you use the “/opt/oracle.RecoveryAppliance/bin/racli add vpc_user -username=USERNAME” the create the VPC user.

Feel free to check my posts about ZDLRA: https://www.fernandosimon.com/blog/category/engineeredsystems/zdlra/

Best regards.

Fernando Simon

Hi Fernando , i sent you a tweeted you yesterday too,

I have prospect looking from veritas netabackup 8 , 9 or 10 configuration of ZDLRA installed client, Veritas seems to say that ZDLRA is not on their matrix…I’m working on that in order to promote an on site customer certification…

actually the ZDLRA are on allocated budget only …. prospect is checking if their solution will be really implemented …next months.

Can you help with a detalied config of netbackup client on zdlra ( what I mean is that you probably experienced a similar config …you probaly have some notes or advices to do that ) , thank you

Enrico

Pingback: Exadata version 23.1.0.0.0 – Part 02 - Fernando Simon