This is the second part of the ODA patch series, from 18.3 to 19.8. I separate in multiple parts and you can use this second as a direct guide to patch ODA from 18.8 to 19.6. Each part can be used alone since they cover all the needed steps. Parts of this post are similar to the upgrade from 18.3 to 18,8 that I described in my previous post.

The process of patch ODA is not complicated but requires attention over some steps. The 19.6 version was the first that was possible to patch from 18.8 version, and the version that allows upgrades to newer. If you want to go directly to 19.5 you need to reimage of the appliance. In this post, I will cover the process that I made recently to patch from 18.3 to 19.8 version.

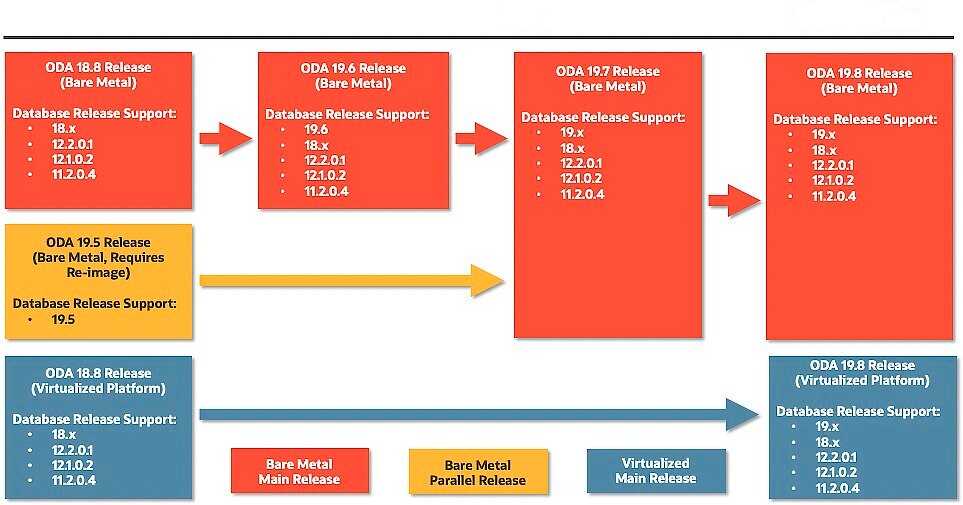

Patch Matrix

The matrix of what can be done can be found at this post from ODA blog, and you can check below:

Another important detail is to check the MOS note ODA: Quick Reference Matrix for Linux Release and Kernel by ODA Hardware Type and Version (Doc ID 2680219.1) and verify if your hardware is still compatible.

Another important detail is to check the MOS note ODA: Quick Reference Matrix for Linux Release and Kernel by ODA Hardware Type and Version (Doc ID 2680219.1) and verify if your hardware is still compatible.

Remember that in this process, the ODA will reboot several times, so, you need to inform your teams that databases will be unavailable during the process.

Actual environment

The environment is ODA X5-2, running the version 18.3. This same environment I reimaged last year, and you can see the steps that I made in a previous post. And it running bare metal (BM).

The first thing to do is to check the actual hardware about errors. If you have, please fix it before the start. Another important detail is to verify if your disks have smartctl warnings. To do that, execute smartctl -q errorsonly -H -l xerror /dev/DISK; If you have “SMART Health Status: WARNING: ascq=0x97 [asc=b, ascq=97]” report (or similar warning), please open an SR to replace the disks. Even the warning can lead to an error during the patch.

I recommend you to save outside of ODA some config files from BOTH nodes (to have quick access in case you need):

[root@odat1 ~]# mkdir /nfs/ODA_PATCH_18/bkp_files_odat1 [root@odat1 ~]# cp /etc/hosts /nfs/ODA_PATCH_18/bkp_files_odat1 [root@odat1 ~]# cp /etc/fstab /nfs/ODA_PATCH_18/bkp_files_odat1 [root@odat1 ~]# cp /etc/resolv.conf /nfs/ODA_PATCH_18/bkp_files_odat1 [root@odat1 ~]# cp /etc/sysconfig/network-scripts/* /nfs/ODA_PATCH_18/bkp_files_odat1/ cp: omitting directory `/etc/sysconfig/network-scripts/backupifcfgFiles' cp: omitting directory `/etc/sysconfig/network-scripts/bkupIfcfgOrig' [root@odat1 ~]# [root@odat1 ~]# cp /u01/app/18.0.0.0/grid/network/admin/listener* /nfs/ODA_PATCH_18/bkp_files_odat1/ [root@odat1 ~]#

Another detail before the start is to check the actual components and running versions. To do that we execute the odacli describe-component. If we need we just need to update the components with odacli update-storage -v 19.6.0.0.0. This will (if some firmware is applied) reboot the ODA.

The Patch Process

The upgrade from 18.8 to 19.6 has two main parts. The first is to upgrade the Linux from OEL 6 to OEL 7. The second is update ODA binaries (DCS and GI) itself.

The first part is critical and can occur that you need to roll back in case of failure (if you change some parts of Linux as an example – not recommended, but can occur). To be possible to restore, you need to go to MOS note ODA (Oracle Database Appliance): ODABR a System Backup/Restore Utility (Doc ID 2466177.1) and download the ODABR. This RPM allows the patch process to generate KVM snapshots (or clones) and roll back in case of need. You can do manual backups if needed (due lack free of pv space – will talk later).

Unfortunately for NFS, it does not support xattr attributes changes and this kind of backup can be very tricky.

The idea is just to have it installed at both nodes. And to do that we just use rpm -Uvh over the downloaded package.

19.6

Update Repository

The first step is to upload the unzipped patch (patch number 31010832) files at the internal ODA repository. This process is done using the odacli update-repository and you pass the full path to unzip patch files (here my files are in NFS folder). This generates one jobId that you can follow until it ends. Since is a big patch maybe you receive faulire due lack of space at /opt (DCS-10802:Insufficient disk space on file system: /tmp. Expected free space (MB): 22,467, available space (MB): 15,365). If you have free pv space you can increase it:

[root@odat1 ~]# lvextend -L +10G /dev/VolGroupSys/LogVolOpt Size of logical volume VolGroupSys/LogVolOpt changed from 80.00 GiB (2560 extents) to 90.00 GiB (2880 extents). Logical volume VolGroupSys/LogVolOpt successfully resized. [root@odat1 ~]# [root@odat1 ~]# resize2fs /dev/VolGroupSys/LogVolOpt resize2fs 1.42.9 (28-Dec-2013) Filesystem at /dev/VolGroupSys/LogVolOpt is mounted on /opt; on-line resizing required old_desc_blocks = 5, new_desc_blocks = 6 The filesystem on /dev/VolGroupSys/LogVolOpt is now 23592960 blocks long. [root@odat1 ~]#

Calling the upload of files:

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli update-repository -f /nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server1of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server2of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server3of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server4of4.zip

{

"jobId" : "e942a1a8-1305-4b79-aef0-eea8dad8cef0",

"status" : "Created",

"message" : "/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server1of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server2of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server3of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server4of4.zip",

"reports" : [ ],

"createTimestamp" : "July 25, 2020 16:33:03 PM CEST",

"resourceList" : [ ],

"description" : "Repository Update",

"updatedTime" : "July 25, 2020 16:33:03 PM CEST"

}

[root@odat1 19.6]#

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli describe-job -i "e942a1a8-1305-4b79-aef0-eea8dad8cef0"

Job details

----------------------------------------------------------------

ID: e942a1a8-1305-4b79-aef0-eea8dad8cef0

Description: Repository Update

Status: Success

Created: July 25, 2020 4:33:03 PM CEST

Message: /nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server1of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server2of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server3of4.zip,/nfs/ODA_PATCH_18/19.6/oda-sm-19.6.0.0.0-200420-server4of4.zip

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

Check AvailableSpace July 25, 2020 4:33:05 PM CEST July 25, 2020 4:33:05 PM CEST Success

Setting up ssh equivalance July 25, 2020 4:33:07 PM CEST July 25, 2020 4:33:08 PM CEST Success

Copy BundleFile July 25, 2020 4:33:09 PM CEST July 25, 2020 4:39:02 PM CEST Success

Validating CopiedFile July 25, 2020 4:39:02 PM CEST July 25, 2020 4:39:49 PM CEST Success

Unzip bundle July 25, 2020 4:39:49 PM CEST July 25, 2020 5:05:10 PM CEST Success

Unzip bundle July 25, 2020 5:05:10 PM CEST July 25, 2020 5:08:18 PM CEST Success

Delete PatchBundles July 25, 2020 5:08:26 PM CEST July 25, 2020 5:08:29 PM CEST Success

Removing ssh keys July 25, 2020 5:08:29 PM CEST July 25, 2020 5:22:23 PM CEST Success

[root@odat1 19.6]#

DCSAgent

The next step is to update the internal DCS agent for ODA 18.8. This is a simple step done using the odacli update-dcsagent. Check that the version 19.6.0.0 was specified as a parameter:

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli update-dcsagent -v 19.6.0.0.0

{

"jobId" : "45d46df3-fdd8-44bf-a0ad-872237db2f20",

"status" : "Created",

"message" : "Dcs agent will be restarted after the update. Please wait for 2-3 mins before executing the other commands",

"reports" : [ ],

"createTimestamp" : "July 25, 2020 17:23:54 PM CEST",

"resourceList" : [ ],

"description" : "DcsAgent patching",

"updatedTime" : "July 25, 2020 17:23:54 PM CEST"

}

[root@odat1 19.6]#

And again, you can check the jobId and wait it finish:

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli describe-job -i "45d46df3-fdd8-44bf-a0ad-872237db2f20"

Job details

----------------------------------------------------------------

ID: 45d46df3-fdd8-44bf-a0ad-872237db2f20

Description: DcsAgent patching

Status: Success

Created: July 25, 2020 5:23:54 PM CEST

Message:

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

dcs-agent upgrade to version 19.6.0.0.0 July 25, 2020 5:23:56 PM CEST July 25, 2020 5:25:52 PM CEST Success

dcs-agent upgrade to version 19.6.0.0.0 July 25, 2020 5:25:52 PM CEST July 25, 2020 5:27:36 PM CEST Success

Update System version July 25, 2020 5:27:40 PM CEST July 25, 2020 5:27:40 PM CEST Success

Update System version July 25, 2020 5:27:40 PM CEST July 25, 2020 5:27:40 PM CEST Success

[root@odat1 19.6]#

As in the previous post from 18.3 upgrade, don’t worry because this update will restart the DCS and for a moment the odacli will not work and can report errors (again, don’t worry, it returns):

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli describe-job -i "45d46df3-fdd8-44bf-a0ad-872237db2f20" DCS-10001:Internal error encountered: Fail to start hand shake to localhost:7070. [root@odat1 19.6]#

Create-prepatchreport – First Run

The next step is to check if is possible to upgrade the operating system. We do that using the odacli create-prepatchreport -v 19.6.0.0.0 -os. Check the “-os”. This is critical.

[root@odat1 19.6]# /opt/oracle/dcs/bin/odacli create-prepatchreport -v 19.6.0.0.0 -os

Job details

----------------------------------------------------------------

ID: 666f7269-7f9a-49b1-8742-2447e94fe54e

Description: Patch pre-checks for [OS]

Status: Created

Created: July 25, 2020 5:30:42 PM CEST

Message: Use 'odacli describe-prepatchreport -i 666f7269-7f9a-49b1-8742-2447e94fe54e' to check details of results

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

[root@odat1 19.6]#

And you can check the report:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-prepatchreport -i 666f7269-7f9a-49b1-8742-2447e94fe54e

Patch pre-check report

------------------------------------------------------------------------

Job ID: 666f7269-7f9a-49b1-8742-2447e94fe54e

Description: Patch pre-checks for [OS]

Status: FAILED

Created: July 25, 2020 5:30:42 PM CEST

Result: One or more pre-checks failed for [OS]

Node Name

---------------

odat1

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Failed Insufficient space to create LVM

snapshots on node: odat1. Expected

free space (GB): 190, available space

(GB): 164.

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Success Results stored in:

preupgrade checks '/root/preupgrade-results/

preupg_results-200725175724.tar.gz' .

Read complete report file

'/root/preupgrade/result.html' before

attempting OS upgrade.

Validate custom rpms installed Failed Custom RPMs installed. Please check

files

/root/oda-upgrade/

rpms-added-from-ThirdParty and/or

/root/oda-upgrade/

rpms-added-from-Oracle.

Scheduled jobs check Failed Scheduled jobs found. Disable

scheduled jobs before attempting OS

upgrade.

Node Name

---------------

odat2

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Failed Insufficient space to create LVM

snapshots on node: odat2. Expected

free space (GB): 190, available space

(GB): 164.

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Success Results stored in:

preupgrade checks '/root/preupgrade-results/

preupg_results-200725185704.tar.gz' .

Read complete report file

'/root/preupgrade/result.html' before

attempting OS upgrade.

Validate custom rpms installed Failed Custom RPMs installed. Please check

files

/root/oda-upgrade/

rpms-added-from-ThirdParty and/or

/root/oda-upgrade/

rpms-added-from-Oracle.

Scheduled jobs check Failed Scheduled jobs found. Disable

scheduled jobs before attempting OS

upgrade.

[root@odat1 ~]#

As you can see above FAILED.

Know issue – Lack of space

To ODABR be able to create the LVM snapshot you need at least 190GB of unused space (in each node). If you don’t have this, you can create a manual snapshot and use the “–force” during the call. But you need to run the patchreport at least one time.

Validate LVM free space Failed Insufficient space to create LVM

snapshots on node: odat1. Expected

free space (GB): 190, available space

(GB): 164.

One way to clean space is to reduce the /u01 at this moment. To do that, you need to stop all databases (suppose to already be stopped), stop TFA (fundamental), stop crs, has, and umount the mountpoint /u01. Maybe the reboot (I recommend) is needed to release some file usage.

To reduce, first do e2fsck:

[root@odat1 ~]# e2fsck -f /dev/mapper/VolGroupSys-LogVolU01 e2fsck 1.43-WIP (20-Jun-2013) Pass 1: Checking inodes, blocks, and sizes Pass 2: Checking directory structure Pass 3: Checking directory connectivity Pass 4: Checking reference counts Pass 5: Checking group summary information /dev/mapper/VolGroupSys-LogVolU01: 6682323/11141120 files (0.7% non-contiguous), 30107055/44564480 blocks [root@odat1 ~]#

After, you can reduce the filesystem with resize2fs:

[root@odat1 ~]# resize2fs /dev/mapper/VolGroupSys-LogVolU01 130G resize2fs 1.43-WIP (20-Jun-2013) Resizing the filesystem on /dev/mapper/VolGroupSys-LogVolU01 to 34078720 (4k) blocks. The filesystem on /dev/mapper/VolGroupSys-LogVolU01 is now 34078720 blocks long. [root@odat1 ~]#

And after reducing the logical volume:

[root@odat1 ~]# lvreduce -L 130G /dev/mapper/VolGroupSys-LogVolU01 WARNING: Reducing active logical volume to 130.00 GiB. THIS MAY DESTROY YOUR DATA (filesystem etc.) Do you really want to reduce VolGroupSys/LogVolU01? [y/n]: y Size of logical volume VolGroupSys/LogVolU01 changed from 170.00 GiB (5440 extents) to 130.00 GiB (4160 extents). Logical volume LogVolU01 successfully resized. [root@odat1 ~]# [root@odat1 ~]# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert LogVolOpt VolGroupSys -wi-ao---- 80.00g LogVolRoot VolGroupSys -wi-ao---- 50.00g LogVolSwap VolGroupSys -wi-ao---- 24.00g LogVolU01 VolGroupSys -wi-a----- 130.00g [root@odat1 ~]#

After that, you just mount the filesystem, enable crs and has, and reboot both nodes.

Know issue – Packages

Can occur that you installed some external packages. The need to be removed before the upgrade. The patch report informs it:

Validate custom rpms installed Failed Custom RPMs installed. Please check

files

/root/oda-upgrade/

rpms-added-from-ThirdParty and/or

/root/oda-upgrade/

rpms-added-from-Oracle.

Can be removed with a simple “rpm -e”. In my case was iotop ( I removed from both nodes):

[root@odat1 ~]# cat /root/oda-upgrade/rpms-added-from-Oracle iotop [root@odat1 ~]# [root@odat1 ~]# rpm -qa |grep iotop iotop-0.3.2-9.el6.noarch [root@odat1 ~]# rpm -e iotop-0.3.2-9.el6.noarch [root@odat1 ~]# cat /root/oda-upgrade/rpms-added-from-ThirdParty cat: /root/oda-upgrade/rpms-added-from-ThirdParty: No such file or directory [root@odat1 ~]#

Know issues – ODA Schedules

The O.S. upgrade needs the schedules to be disabled before. First, we list the schedules with odacli list-schedules:

[root@odat1 ~]# odacli list-schedules ID Name Description CronExpression Disabled ---------------------------------------- ------------------------- -------------------------------------------------- ------------------------------ -------- e27ebb8c-e80e-4a42-b165-4582bc90c1d1 metastore maintenance internal metastore maintenance 0 0 0 1/1 * ? * true a779445f-7a29-4d44-99ad-5a3dab0ddd7a AgentState metastore cleanup internal agentstateentry metastore maintenance 0 0 0 1/1 * ? * false 6603842a-a6fc-4f16-9aa1-127402a9c27e bom maintenance bom reports generation 0 0 1 ? * SUN * false 3eccdca9-72ee-4743-840d-9808d7e0967a Big File Upload Cleanup clean up expired big file uploads. 0 0 1 ? * SUN * false f1507855-d881-444f-90a1-73d820746374 feature_tracking_job Feature tracking job 0 0 20 ? * WED * false 6576b40b-6560-4adb-ad27-259ccd1ec069 Log files Cleanup Auto log file purge bases on policy 0 0 3 1/1 * ? * false [root@odat1 ~]#

After we disable all that is with column Disabled equal false:

[root@odat1 ~]# odacli update-schedule -d -i a779445f-7a29-4d44-99ad-5a3dab0ddd7a Update job schedule success [root@odat1 ~]# odacli update-schedule -d -i 6603842a-a6fc-4f16-9aa1-127402a9c27e Update job schedule success [root@odat1 ~]# odacli update-schedule -d -i 3eccdca9-72ee-4743-840d-9808d7e0967a Update job schedule success [root@odat1 ~]# odacli update-schedule -d -i f1507855-d881-444f-90a1-73d820746374 Update job schedule success [root@odat1 ~]# odacli update-schedule -d -i 6576b40b-6560-4adb-ad27-259ccd1ec069 Update job schedule success [root@odat1 ~]# odacli list-schedules ID Name Description CronExpression Disabled ---------------------------------------- ------------------------- -------------------------------------------------- ------------------------------ -------- e27ebb8c-e80e-4a42-b165-4582bc90c1d1 metastore maintenance internal metastore maintenance 0 0 0 1/1 * ? * true a779445f-7a29-4d44-99ad-5a3dab0ddd7a AgentState metastore cleanup internal agentstateentry metastore maintenance 0 0 0 1/1 * ? * true 6603842a-a6fc-4f16-9aa1-127402a9c27e bom maintenance bom reports generation 0 0 1 ? * SUN * true 3eccdca9-72ee-4743-840d-9808d7e0967a Big File Upload Cleanup clean up expired big file uploads. 0 0 1 ? * SUN * true f1507855-d881-444f-90a1-73d820746374 feature_tracking_job Feature tracking job 0 0 20 ? * WED * true 6576b40b-6560-4adb-ad27-259ccd1ec069 Log files Cleanup Auto log file purge bases on policy 0 0 3 1/1 * ? * true [root@odat1 ~]#

If you reboot the ODA node again before the next execution of the patch report, you need to disable the schedule again.

Create-prepatchreport – Success Run

After fixing the know issues, we can sun again the patchreport and see the success. We do that using the odacli create-prepatchreport -v 19.6.0.0.0 -os, and with odacli describe-prepatchreport:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli create-prepatchreport -v 19.6.0.0.0 -os

Job details

----------------------------------------------------------------

ID: 5da9f187-af89-4821-b7dc-7451da21761d

Description: Patch pre-checks for [OS]

Status: Created

Created: July 25, 2020 10:56:19 PM CEST

Message: Use 'odacli describe-prepatchreport -i 5da9f187-af89-4821-b7dc-7451da21761d' to check details of results

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

[root@odat1 ~]#

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-prepatchreport -i 5da9f187-af89-4821-b7dc-7451da21761d

Patch pre-check report

------------------------------------------------------------------------

Job ID: 5da9f187-af89-4821-b7dc-7451da21761d

Description: Patch pre-checks for [OS]

Status: RUNNING

Created: July 25, 2020 10:56:19 PM CEST

Result:

Node Name

---------------

odat1

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Success LVM free space: 204(GB) on node:odat1

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Success Results stored in:

preupgrade checks '/root/preupgrade-results/

preupg_results-200725233758.tar.gz' .

Read complete report file

'/root/preupgrade/result.html' before

attempting OS upgrade.

Validate custom rpms installed Scheduled

Scheduled jobs check Scheduled

Node Name

---------------

odat2

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Success LVM free space: 204(GB) on node:odat2

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Running

preupgrade checks

Validate custom rpms installed Scheduled

Scheduled jobs check Scheduled

[root@odat1 ~]#

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-prepatchreport -i 5da9f187-af89-4821-b7dc-7451da21761d

Patch pre-check report

------------------------------------------------------------------------

Job ID: 5da9f187-af89-4821-b7dc-7451da21761d

Description: Patch pre-checks for [OS]

Status: SUCCESS

Created: July 25, 2020 10:56:19 PM CEST

Result: All pre-checks succeeded

Node Name

---------------

odat1

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Success LVM free space: 204(GB) on node:odat1

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Success Results stored in:

preupgrade checks '/root/preupgrade-results/

preupg_results-200725233758.tar.gz' .

Read complete report file

'/root/preupgrade/result.html' before

attempting OS upgrade.

Validate custom rpms installed Success No additional RPMs found installed on

node:odat1.

Scheduled jobs check Success Verified scheduled jobs

Node Name

---------------

odat2

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__OS__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Validate if ODABR is installed Success Validated ODABR is installed

Validate if ODABR snapshots Success No ODABR snaps found on the node.

exist

Validate LVM free space Success LVM free space: 204(GB) on node:odat2

Space checks for OS upgrade Success Validated space checks.

Install OS upgrade software Success Extracted OS upgrade patches into

/root/oda-upgrade. Do not remove this

directory untill OS upgrade completes.

Verify OS upgrade by running Success Results stored in:

preupgrade checks '/root/preupgrade-results/

preupg_results-200726002222.tar.gz' .

Read complete report file

'/root/preupgrade/result.html' before

attempting OS upgrade.

Validate custom rpms installed Success No additional RPMs found installed on

node:odat2.

Scheduled jobs check Success Verified scheduled jobs

[root@odat1 ~]#

As you can see above, the report was OK, and no errors.

ILOM

To continue the patch is required to call the upgrade from console because it does not generate a job. It calls the process of O.S. itself. Since it does not restart that ILOM (due to patch), we can do ssh to ILOM (do not use ipmcitool) and call start /SP/console to start the process. This is done for BOTH nodes.

Update-server

Inside of the ILOM console and logged at a node, we can call the odacli update-server -v 19.6.0.0.0 -c os –local one node per time (just call the second node if the firs finish with success):

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-server -v 19.6.0.0.0 -c os --local

Verifying OS upgrade

Current OS base version: 6 is lessthan target OS base version: 7

OS needs to upgrade to 7.7

*** Created ODABR snapshot ***

****************************************************************************

* Depending on the hardware platform, the upgrade operation, including *

* node reboot, may take 30-60 minutes to complete. Individual steps in *

* the operation may not show progress messages for a while. Do not abort *

* upgrade using ctrl-c or by rebooting the system. *

****************************************************************************

run: cmd= '[/usr/bin/expect, /root/upgradeos.exp]'

output redirected to /root/odaUpgrade2020-07-26_00-45-44.0420.log

Running pre-upgrade checks.

.........

Running assessment of the system

.....................................................................

The assessment finished.

...

Checking actions and results

.......................................................................................................................................

The tarball with results is stored in '/root/preupgrade-results/preupg_results-200726005634.tar.gz' .

The latest assessment is stored in the '/root/preupgrade' directory.

.

2020-07-26--00:56:37 - Creating directory /root/preupgrade/hooks/oda/postupgrade

2020-07-26--00:56:38 - Done installing upgrade tools and running pre-upgrade checks.

.......................

Running redhat-upgrade-tool

Running redhat-upgrade-tool

Running upgrade setups

..................

Setting up system for upgrade.

.

Now the system is ready for upgrade OS higher version

Reboot the node to finish the upgrade

Now the system is ready for upgrade OS higher version

Reboot the node to finish the upgrade

Running redhat-upgrade-tool

Return code: 0

****************************************************************************

* OS upgrade is successful. The node will reboot now. *

****************************************************************************

You have new mail in /var/spool/mail/root

[root@odat1 ~]#

Broadcast message from root@odat1

(unknown) at 0:58 ...

The system is going down for reboot NOW!

As you can see above, the node will reboot after one time and complete the process:

[root@odat1 ~]#

Broadcast message from root@odat1

(unknown) at 0:58 ...

The system is going down for reboot NOW!

...

...

[ OK ] Created slice system-rdma\x2dload\x2dmodules.slice.

Starting Load RDMA modules from /etc/rdma/modules/infiniband.conf...

Starting Load RDMA modules from /etc/rdma/modules/rdma.conf...

Starting Load RDMA modules from /etc/rdma/modules/roce.conf...

[ OK ] Started Load RDMA modules from /etc/rdma/modules/infiniband.conf.

[FAILED[ 59.313816] ] upgrade-preFailed to start Load RDMA modules from /etc/rdma/modules/rdma.conf.[8180]:

Merge the copy with `/sysroot/bin'.

See 'systemctl status rdma-load-modules@rdma.service' for details.

[ 59.346424] upgrade-pre[8180]: Clean up duplicates in `/sysroot/usr/bin'.

[FAILED] Failed to start Load RDMA modules from /etc/rdma/modules/roce.conf.

[ 59.366246] upgrade-pre[8180]: Make a copy of `/sysroot/usr/sbin'.See 'systemctl status rdma-load-modules@roce.service' for details.

[ 59.376653] upgrade-pre[8180]: Merge the copy with `/sysroot/sbin'.

[ 59.401286] upgrade-pre[8180]: Clean up duplicates in `/sysroot/usr/sbin'.

[ 59.411810] upgrade-pre[8180]: Make a copy of `/sysroot/usr/lib'.

[ OK ] Reached target RDMA Hardware.

[ 60.810484] upgrade-pre[8180]: Merge the copy with `/sysroot/lib'.

...

...

[ 92.501335] upgrade[9171]: [23/779] (7%) installing tigervnc-icons-1.8.0-17.0.1.el7...

[ 92.539542] upgrade[9171]: [24/779] (8%) installing vim-filesystem-7.4.629-6.el7...

[ 92.576682] upgrade[9171]: [25/779] (8%) installing ipxe-roms-qemu-20180825-2.git133f4c.el7...

[ 92.650862] upgrade[9171]: [26/779] (8%) installing ncurses-base-5.9-14.20130511.el7_4...

[ 92.727926] upgrade[9171]: [27/779] (8%) installing nss-softokn-freebl-3.44.0-8.0.1.el7_7...

[ 92.815910] upgrade[9171]: [28/779] (8%) installing glibc-common-2.17-292.0.1.el7...

[ 100.296337] upgrade[9171]: [29/779] (8%) installing glibc-2.17-292.0.1.el7...

...

...

[ 562.308139] upgrade-post[57158]: Installing : perl-SNMP_Session-1.13-5.el7.noarch 317/325

[ 562.329945] upgrade-post[57158]: Installing : blktrace-1.0.5-9.el7.x86_64 318/325

[ 562.344964] upgrade-post[57158]: Installing : perl-Crypt-SSLeay-0.64-5.el7.x86_64 319/325

[ 562.359884] upgrade-post[57158]: Installing : perl-Perl4-CoreLibs-0.003-7.el7.noarch 320/325

[ 562.374875] upgrade-post[57158]: Installing : perl-Encode-Detect-1.01-13.el7.x86_64 321/325

[ 562.411242] upgrade-post[57158]: Installing : perl-File-Slurp-9999.19-6.el7.noarch 322/325

...

...

[ 649.278013] upgrade-post[57158]: Preparing... ########################################

[ 649.293346] upgrade-post[57158]: Updating / installing...

[ 649.396564] upgrade-post[57158]: oracle-hmp-hwmgmt-2.4.5.0.1-1.el7 ########################################

[ 649.415607] upgrade-post[57158]: Installing oracle-hmp-snmp-*.rpm

[ 649.499052] upgrade-post[57158]: Preparing... ########################################

[ 649.513352] upgrade-post[57158]: Updating / installing...

[ 649.539014] upgrade-post[57158]: oracle-hmp-snmp-2.4.5.0.1-1.el7 ########################################

...

...

[ 689.039337] upgrade-post[57158]: Installing oda-hw-mgmt-*.rpm

[ 689.049298] upgrade-post[57158]: Preparing... ########################################

[ 689.063309] upgrade-post[57158]: Pre Installation steps in progress ...

[ 689.072310] upgrade-post[57158]: Updating / installing...

...

...

[ 803.635767] upgrade-post[57158]: all upgrade scripts finished

[ 803.645130] upgrade-post[57158]: writing logs to disk and rebooting

[ 813.014640] reboot: Restarting system

...

...

[ OK ] Started LSB: Supports the direct execution of binary formats..

[ OK ] Started Xinetd A Powerful Replacement For Inetd.

[ OK ] Started Notify NFS peers of a restart.

Oracle Linux Server 7.7

Kernel 4.14.35-1902.11.3.1.el7uek.x86_64 on an x86_64

odat1 login:

The same upgrade call is done at node 2:

[root@odat2 ~]# /opt/oracle/dcs/bin/odacli update-server -v 19.6.0.0.0 -c os --local

Verifying OS upgrade

Current OS base version: 6 is lessthan target OS base version: 7

OS needs to upgrade to 7.7

*** Created ODABR snapshot ***

****************************************************************************

* Depending on the hardware platform, the upgrade operation, including *

* node reboot, may take 30-60 minutes to complete. Individual steps in *

* the operation may not show progress messages for a while. Do not abort *

* upgrade using ctrl-c or by rebooting the system. *

****************************************************************************

run: cmd= '[/usr/bin/expect, /root/upgradeos.exp]'

output redirected to /root/odaUpgrade2020-07-26_01-23-33.0734.log

Running pre-upgrade checks.

.........

Running assessment of the system

......................................................................

The assessment finished.

..

Checking actions and results

.........................................................................................................................................

The tarball with results is stored in '/root/preupgrade-results/preupg_results-200726013431.tar.gz' .

The latest assessment is stored in the '/root/preupgrade' directory.

.

2020-07-26--01:34:34 - Creating directory /root/preupgrade/hooks/oda/postupgrade

.

2020-07-26--01:34:35 - Done installing upgrade tools and running pre-upgrade checks.

...............

Running redhat-upgrade-tool

.

Running redhat-upgrade-tool

Running upgrade setups

...................

Setting up system for upgrade.

..

Now the system is ready for upgrade OS higher version

Reboot the node to finish the upgrade

Now the system is ready for upgrade OS higher version

Reboot the node to finish the upgrade

Running redhat-upgrade-tool

Return code: 0

****************************************************************************

* OS upgrade is successful. The node will reboot now. *

****************************************************************************

You have new mail in /var/spool/mail/root

[root@odat2 ~]#

Broadcast message from root@odat2

(unknown) at 1:36 ...

The system is going down for reboot NOW!

[ OK ] Started LSB: Supports the direct execution of binary formats..

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started Xinetd A Powerful Replacement For Inetd.

Oracle Linux Server 7.7

Kernel 4.14.35-1902.11.3.1.el7uek.x86_64 on an x86_64

odat2 login:

At /root of each server, you can check the file /root/odaUpgradeXXXXXXX.log with the verbose execution of the upgrade.

At this moment just the O.S. was upgraded. The GI was not touched and (if want), you can start databases. But I recommend finishing the process.

Post Upgrade

After upgrade finish in both nodes, we can check if everything was fine with command odacli update-server-postcheck.

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-server-postcheck -v 19.6.0.0.0 Upgrade post-check report ------------------------- Node Name --------------- odat1 Comp Pre-Check Status Comments ----- ------------------------------ -------- -------------------------------------- OS OS upgrade check SUCCESS OS has been upgraded to OL7 GI GI upgrade check INFO GI home needs to update to 19.6.0.0.200114 GI GI status check SUCCESS Clusterware is running on the node OS ODABR snapshot WARNING ODABR snapshot found. Run 'odabr delsnap' to delete. RPM Extra RPM check SUCCESS No extra RPMs found when OS was at OL6 Node Name --------------- odat2 Comp Pre-Check Status Comments ----- ------------------------------ -------- -------------------------------------- OS OS upgrade check SUCCESS OS has been upgraded to OL7 GI GI upgrade check INFO GI home needs to update to 19.6.0.0.200114 GI GI status check SUCCESS Clusterware is running on the node OS ODABR snapshot WARNING ODABR snapshot found. Run 'odabr delsnap' to delete. RPM Extra RPM check SUCCESS No extra RPMs found when OS was at OL6 [root@odat1 ~]#

Above we can see some warnings, the most important is the ODABR telling that snapshots can be deleted.

To do that we just call first odabr infosnap to check the existence of snapshots:

[root@odat1 ~]# /opt/odabr/odabr infosnap │▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒│ odabr - ODA node Backup Restore - Version: 2.0.1-60 Copyright Oracle, Inc. 2013, 2020 -------------------------------------------------------- Author: Ruggero Citton <ruggero.citton@oracle.com> RAC Pack, Cloud Innovation and Solution Engineering Team │▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒│ LVM snap name Status COW Size Data% ------------- ---------- ---------- ------ root_snap active 30.00 GiB 27.06% opt_snap active 60.00 GiB 3.80% u01_snap active 100.00 GiB 1.44% [root@odat1 ~]#

And after delete them:

[root@odat1 ~]# /opt/odabr/odabr delsnap INFO: 2020-07-26 09:09:09: Please check the logfile '/opt/odabr/out/log/odabr_24797.log' for more details INFO: 2020-07-26 09:09:09: Removing LVM snapshots INFO: 2020-07-26 09:09:09: ...removing LVM snapshot for 'opt' SUCCESS: 2020-07-26 09:09:09: ...snapshot for 'opt' removed successfully INFO: 2020-07-26 09:09:09: ...removing LVM snapshot for 'u01' SUCCESS: 2020-07-26 09:09:10: ...snapshot for 'u01' removed successfully INFO: 2020-07-26 09:09:10: ...removing LVM snapshot for 'root' SUCCESS: 2020-07-26 09:09:10: ...snapshot for 'root' removed successfully SUCCESS: 2020-07-26 09:09:10: Remove LVM snapshots done successfully [root@odat1 ~]# [root@odat1 ~]#

We do this in BOTH nodes.

DCSAdmin

The next step will be to update the dcsadmin using the command odacli update-dcsadmin:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-dcsadmin -v 19.6.0.0.0

{

"jobId" : "b4fc6b76-c815-4dd5-a8b7-ddbbd1bd77ae",

"status" : "Created",

"message" : null,

"reports" : [ ],

"createTimestamp" : "July 26, 2020 09:10:19 AM CEST",

"resourceList" : [ ],

"description" : "DcsAdmin patching",

"updatedTime" : "July 26, 2020 09:10:19 AM CEST"

}

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-job -i "b4fc6b76-c815-4dd5-a8b7-ddbbd1bd77ae"

Job details

----------------------------------------------------------------

ID: b4fc6b76-c815-4dd5-a8b7-ddbbd1bd77ae

Description: DcsAdmin patching

Status: Success

Created: July 26, 2020 9:10:19 AM CEST

Message:

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

Patch location validation July 26, 2020 9:10:21 AM CEST July 26, 2020 9:10:21 AM CEST Success

Patch location validation July 26, 2020 9:10:21 AM CEST July 26, 2020 9:10:21 AM CEST Success

dcsadmin upgrade July 26, 2020 9:10:23 AM CEST July 26, 2020 9:10:23 AM CEST Success

dcsadmin upgrade July 26, 2020 9:10:23 AM CEST July 26, 2020 9:10:23 AM CEST Success

Update System version July 26, 2020 9:10:24 AM CEST July 26, 2020 9:10:24 AM CEST Success

Update System version July 26, 2020 9:10:24 AM CEST July 26, 2020 9:10:24 AM CEST Success

[root@odat1 ~]#

DCSComponents

The next is to update the dcscomponents with command odacli update-dcscomponents. And here one detail. The commands will generate one jobID, but will be invalid and you need to use the command “odacli list-jobs” to see the jobs (will be just update SSH keys – two jobs):

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-dcscomponents -v 19.6.0.0.0

{

"jobId" : "235a6820-e390-42e1-a4e3-b058c8f9af96",

"status" : "Success",

"message" : null,

"reports" : null,

"createTimestamp" : "July 26, 2020 09:11:05 AM CEST",

"description" : "Job completed and is not part of Agent job list",

"updatedTime" : "July 26, 2020 09:11:06 AM CEST"

}

[root@odat1 ~]#

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-job -i "235a6820-e390-42e1-a4e3-b058c8f9af96"

DCS-10000:Resource Job with ID 235a6820-e390-42e1-a4e3-b058c8f9af96 is not found.

[root@odat1 ~]#

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli list-jobs

ID Description Created Status

---------------------------------------- --------------------------------------------------------------------------- ----------------------------------- ----------

…

666f7269-7f9a-49b1-8742-2447e94fe54e Patch pre-checks for [OS] July 25, 2020 5:30:42 PM CEST Failure

5da9f187-af89-4821-b7dc-7451da21761d Patch pre-checks for [OS] July 25, 2020 10:56:19 PM CEST Success

b4fc6b76-c815-4dd5-a8b7-ddbbd1bd77ae DcsAdmin patching July 26, 2020 9:10:19 AM CEST Success

f3bb0811-cb83-4b9c-a498-b1050a9767ba SSH keys update July 26, 2020 9:11:28 AM CEST Success

0031f81e-5bb8-47c9-8389-2ef266c90363 SSH key delete July 26, 2020 9:11:33 AM CEST Success

bde68cf5-f479-4e95-81b8-123639c8ef0f SSH keys update July 26, 2020 9:12:02 AM CEST Success

e1a2a798-4c7e-4ae6-b70c-1eb150b47680 SSH key delete July 26, 2020 9:12:12 AM CEST Failure

[root@odat1 ~]#

But as you can see above, there is one failure related to SSH deletion, but this is a known issue documented here.

If you describe the job you can see failure:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-job -i "e1a2a798-4c7e-4ae6-b70c-1eb150b47680"

Job details

----------------------------------------------------------------

ID: e1a2a798-4c7e-4ae6-b70c-1eb150b47680

Description: SSH key delete

Status: Failure

Created: July 26, 2020 9:12:12 AM CEST

Message: DCS-10110:Failed to complete the operation.

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

[root@odat1 ~]#

To solve, you need to remove all the “~root/.ssh/id_rsa*” files from both nodes:

[root@odat1 .ssh]# ssh odat2 Last login: Sun Jul 26 09:17:52 2020 from odat1 [root@odat2 ~]# [root@odat2 ~]# mv ~root/.ssh/id_rsa* /tmp [root@odat2 ~]# ls -l ~root/.ssh/ total 20 -rw-r--r-- 1 root root 1222 Jul 26 09:12 authorized_keys -rw------- 1 root root 7089 Jul 26 09:12 known_hosts -rw------- 1 root root 6702 Jul 26 09:11 known_hosts.old [root@odat2 ~]# logout Connection to odat2 closed. [root@odat1 .ssh]# [root@odat1 .ssh]# mv ~root/.ssh/id_rsa* /tmp [root@odat1 .ssh]# ls -l ~root/.ssh/ total 20 -rw-r--r-- 1 root root 1222 Jul 26 09:12 authorized_keys -rw------- 1 root root 7948 Jul 26 09:12 known_hosts -rw------- 1 root root 7561 Jul 26 09:11 known_hosts.old [root@odat1 .ssh]# ssh odat2 root@odat2's password: [root@odat1 .ssh]# cd [root@odat1 ~]#

And I recommend removing the lock files from oraInventory too (from both nodes):

[root@odat1 ~]# mv /u01/app/oraInventory/locks /tmp/grid-locks [root@odat1 ~]# [root@odat2 ~]# mv /u01/app/oraInventory/locks /tmp/grid-locks [root@odat2 ~]#

Create-prepatchreport

The next step is to verify if you can update it to 19.6 or no. The odacli will do a lot of tests for GI, and ILOM. To do that, we just need to call odacli create-prepatchreport, and after that use the odacli describe-prepatchreport to check the report:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli create-prepatchreport -s -v 19.6.0.0.0

Job details

----------------------------------------------------------------

ID: de5d169c-bebf-4441-9fa8-4e30efc971de

Description: Patch pre-checks for [ILOM, GI, ORACHKSERVER]

Status: Created

Created: July 26, 2020 9:26:05 AM CEST

Message: Use 'odacli describe-prepatchreport -i de5d169c-bebf-4441-9fa8-4e30efc971de' to check details of results

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-prepatchreport -i de5d169c-bebf-4441-9fa8-4e30efc971de

Patch pre-check report

------------------------------------------------------------------------

Job ID: de5d169c-bebf-4441-9fa8-4e30efc971de

Description: Patch pre-checks for [ILOM, GI, ORACHKSERVER]

Status: FAILED

Created: July 26, 2020 9:26:05 AM CEST

Result: One or more pre-checks failed for [ORACHK]

Node Name

---------------

odat1

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__ILOM__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Checking Ilom patch Version Success Successfully verified the versions

Patch location validation Success Successfully validated location

__GI__

Validate supported GI versions Success Validated minimum supported versions.

Validate available space Success Validated free space under /u01

Verify DB Home versions Success Verified DB Home versions

Validate patching locks Success Validated patching locks

Validate clones location exist Success Validated clones location

Validate ODABR snapshots exist Success No ODABR snaps found on the node.

__ORACHK__

Running orachk Failed Failed to run Orachk: Failed to run

orachk.

Node Name

---------------

odat2

Pre-Check Status Comments

------------------------------ -------- --------------------------------------

__ILOM__

Validate supported versions Success Validated minimum supported versions.

Validate patching tag Success Validated patching tag: 19.6.0.0.0.

Is patch location available Success Patch location is available.

Checking Ilom patch Version Success Successfully verified the versions

Patch location validation Success Successfully validated location

__GI__

Validate supported GI versions Success Validated minimum supported versions.

Validate available space Success Validated free space under /u01

Verify DB Home versions Success Verified DB Home versions

Validate patching locks Success Validated patching locks

Validate clones location exist Success Validated clones location

Validate ODABR snapshots exist Success No ODABR snaps found on the node.

__ORACHK__

Running orachk Failed Failed to run Orachk: Failed to run

orachk.

[root@odat1 ~]#

As you can see above, the orachk fails. But as documented at release notes, this can be ignored. From 19.6 to 19.7 I address this error, but since there is no database running this can be ignored. If you want to check what is the error, you can go at folder /opt/oracle/dcs/oracle.ahf/orachk/SERVER/<jobId> /orachk_odat1_<*>/ and check the HTML result.

Update-server

After the success of the report, we can call the odacli update-server. And here the magic occurs: GI, and ILOM are updated:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-server -v 19.6.0.0.0

{

"jobId" : "98623be2-f0ff-4ec6-be7b-79331eb32380",

"status" : "Created",

"message" : "Success of server update will trigger reboot of the node after 4-5 minutes. Please wait until the node reboots.",

"reports" : [ ],

"createTimestamp" : "July 26, 2020 09:30:44 AM CEST",

"resourceList" : [ ],

"description" : "Server Patching",

"updatedTime" : "July 26, 2020 09:30:44 AM CEST"

}

[root@odat1 ~]#

As you imagine, a jobIB is generated and we can follow it until it finishes:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-job -i "98623be2-f0ff-4ec6-be7b-79331eb32380"

Job details

----------------------------------------------------------------

ID: 98623be2-f0ff-4ec6-be7b-79331eb32380

Description: Server Patching

Status: Success

Created: July 26, 2020 9:30:44 AM CEST

Message:

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

Patch location validation July 26, 2020 9:31:08 AM CEST July 26, 2020 9:31:08 AM CEST Success

Patch location validation July 26, 2020 9:31:08 AM CEST July 26, 2020 9:31:08 AM CEST Success

dcs-controller upgrade July 26, 2020 9:31:10 AM CEST July 26, 2020 9:31:10 AM CEST Success

dcs-controller upgrade July 26, 2020 9:31:10 AM CEST July 26, 2020 9:31:10 AM CEST Success

Creating repositories using yum July 26, 2020 9:31:12 AM CEST July 26, 2020 9:31:14 AM CEST Success

Applying HMP Patches July 26, 2020 9:31:14 AM CEST July 26, 2020 9:31:15 AM CEST Success

Patch location validation July 26, 2020 9:31:17 AM CEST July 26, 2020 9:31:17 AM CEST Success

Patch location validation July 26, 2020 9:31:17 AM CEST July 26, 2020 9:31:17 AM CEST Success

oda-hw-mgmt upgrade July 26, 2020 9:31:19 AM CEST July 26, 2020 9:31:19 AM CEST Success

oda-hw-mgmt upgrade July 26, 2020 9:31:19 AM CEST July 26, 2020 9:31:20 AM CEST Success

OSS Patching July 26, 2020 9:31:21 AM CEST July 26, 2020 9:31:22 AM CEST Success

Applying Firmware Disk Patches July 26, 2020 9:33:10 AM CEST July 26, 2020 9:35:01 AM CEST Success

Applying Firmware Expander Patches July 26, 2020 9:35:16 AM CEST July 26, 2020 9:35:35 AM CEST Success

Applying Firmware Controller Patches July 26, 2020 9:35:48 AM CEST July 26, 2020 9:36:06 AM CEST Success

Checking Ilom patch Version July 26, 2020 9:36:08 AM CEST July 26, 2020 9:36:10 AM CEST Success

Checking Ilom patch Version July 26, 2020 9:36:10 AM CEST July 26, 2020 9:36:13 AM CEST Success

Patch location validation July 26, 2020 9:36:13 AM CEST July 26, 2020 9:36:14 AM CEST Success

Patch location validation July 26, 2020 9:36:13 AM CEST July 26, 2020 9:36:14 AM CEST Success

Save password in Wallet July 26, 2020 9:36:16 AM CEST July 26, 2020 9:36:16 AM CEST Success

Apply Ilom patch July 26, 2020 9:36:17 AM CEST July 26, 2020 9:36:18 AM CEST Success

Apply Ilom patch July 26, 2020 9:36:18 AM CEST July 26, 2020 9:36:19 AM CEST Success

Copying Flash Bios to Temp location July 26, 2020 9:36:19 AM CEST July 26, 2020 9:36:19 AM CEST Success

Copying Flash Bios to Temp location July 26, 2020 9:36:19 AM CEST July 26, 2020 9:36:19 AM CEST Success

Starting the clusterware July 26, 2020 9:38:29 AM CEST July 26, 2020 9:40:13 AM CEST Success

Creating GI home directories July 26, 2020 9:40:17 AM CEST July 26, 2020 9:40:17 AM CEST Success

Cloning Gi home July 26, 2020 9:40:17 AM CEST July 26, 2020 9:44:10 AM CEST Success

Cloning Gi home July 26, 2020 9:44:10 AM CEST July 26, 2020 9:48:05 AM CEST Success

Configuring GI July 26, 2020 9:48:05 AM CEST July 26, 2020 9:52:19 AM CEST Success

Running GI upgrade root scripts July 26, 2020 10:09:20 AM CEST July 26, 2020 10:21:17 AM CEST Success

Resetting DG compatibility July 26, 2020 10:21:42 AM CEST July 26, 2020 10:22:11 AM CEST Success

Running GI config assistants July 26, 2020 10:22:11 AM CEST July 26, 2020 10:24:24 AM CEST Success

restart oakd July 26, 2020 10:24:36 AM CEST July 26, 2020 10:24:48 AM CEST Success

Updating GiHome version July 26, 2020 10:24:49 AM CEST July 26, 2020 10:24:57 AM CEST Success

Updating GiHome version July 26, 2020 10:24:49 AM CEST July 26, 2020 10:24:57 AM CEST Success

Update System version July 26, 2020 10:25:18 AM CEST July 26, 2020 10:25:19 AM CEST Success

Update System version July 26, 2020 10:25:19 AM CEST July 26, 2020 10:25:19 AM CEST Success

preRebootNode Actions July 26, 2020 10:25:20 AM CEST July 26, 2020 10:26:17 AM CEST Success

preRebootNode Actions July 26, 2020 10:26:17 AM CEST July 26, 2020 10:27:08 AM CEST Success

Reboot Ilom July 26, 2020 10:27:08 AM CEST July 26, 2020 10:27:08 AM CEST Success

Reboot Ilom July 26, 2020 10:27:08 AM CEST July 26, 2020 10:27:09 AM CEST Success

[root@odat1 ~]#

In ODAX5-2 this process took almost 1 hour. And passed all the updates. If you follow the /opt/oracle/dcs/log/dcs-agent.log you can see more detail. At this moment, the GI was upgraded to 19.6.

After this jobID finishes, the ODA will reboot (between the end of the job and reboot can be around 10 minutes).

Update-storage

The last step is to patch the storage with command odacli update-storage. This is needed because the previous step does not updates the firmware of the disks as an example.

You can see from odacli describe-component that some part are not up to date:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-component

System Version

---------------

19.6.0.0.0

System node Name

---------------

odat1

Local System Version

---------------

19.6.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK 19.6.0.0.0 up-to-date

GI 19.6.0.0.200114 up-to-date

DB {

[ OraDB18000_home1 ] 18.3.0.0.180717 18.9.0.0.200114

[ OraDB12102_home1,OraDB12102_home2 ] 12.1.0.2.180717 12.1.0.2.200114

[ OraDB11204_home1,OraDB11204_home2 ] 11.2.0.4.180717 11.2.0.4.200114

}

DCSAGENT 19.6.0.0.0 up-to-date

ILOM 4.0.4.52.r132805 up-to-date

BIOS 30300200 up-to-date

OS 7.7 up-to-date

FIRMWARECONTROLLER {

[ c0 ] 4.650.00-7176 up-to-date

[ c1,c2 ] 13.00.00.00 up-to-date

}

FIRMWAREEXPANDER 001E up-to-date

FIRMWAREDISK {

[ c0d0,c0d1 ] A7E0 up-to-date

[ c1d0,c1d1,c1d2,c1d3,c1d4,c1d5,c1d6, PD51 up-to-date

c1d7,c1d8,c1d9,c1d10,c1d11,c1d12,c1d13,

c1d14,c1d15,c2d0,c2d1,c2d2,c2d3,c2d4,

c2d5,c2d6,c2d7,c2d8,c2d9,c2d10,c2d11,

c2d12,c2d13,c2d14,c2d15 ]

[ c1d16,c1d17,c1d18,c1d19,c1d20,c1d21, A29A up-to-date

c1d22,c1d23,c2d16,c2d17,c2d18,c2d19,

c2d20,c2d21,c2d22,c2d23 ]

}

HMP 2.4.5.0.1 up-to-date

System node Name

---------------

odat2

Local System Version

---------------

19.6.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK 19.6.0.0.0 up-to-date

GI 19.6.0.0.200114 up-to-date

DB {

[ OraDB18000_home1 ] 18.3.0.0.180717 18.9.0.0.200114

[ OraDB12102_home1,OraDB12102_home2 ] 12.1.0.2.180717 12.1.0.2.200114

[ OraDB11204_home1,OraDB11204_home2 ] 11.2.0.4.180717 11.2.0.4.200114

}

DCSAGENT 19.6.0.0.0 up-to-date

ILOM 4.0.4.52.r132805 up-to-date

BIOS 30300200 up-to-date

OS 7.7 up-to-date

FIRMWARECONTROLLER {

[ c0 ] 4.650.00-7176 up-to-date

[ c1,c2 ] 13.00.00.00 up-to-date

}

FIRMWAREEXPANDER 001E up-to-date

FIRMWAREDISK {

[ c0d0,c0d1 ] A7E0 up-to-date

[ c1d0,c1d1,c1d2,c1d3,c1d4,c1d5,c1d6, PD51 up-to-date

c1d7,c1d8,c1d9,c1d10,c1d11,c1d12,c1d13,

c1d14,c1d15,c2d0,c2d1,c2d2,c2d3,c2d4,

c2d5,c2d6,c2d7,c2d8,c2d9,c2d10,c2d11,

c2d12,c2d13,c2d14,c2d15 ]

[ c1d16,c1d17,c1d18,c1d19,c1d20,c1d21, A29A up-to-date

c1d22,c1d23,c2d16,c2d17,c2d18,c2d19,

c2d20,c2d21,c2d22,c2d23 ]

}

HMP 2.4.5.0.1 up-to-date

[root@odat1 ~]#

And we can update calling the odacli update-storage:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli update-storage -v 19.6.0.0.0

{

"jobId" : "081de564-2cc1-4cb9-b18e-b3d5c484c58d",

"status" : "Created",

"message" : "Success of Storage Update may trigger reboot of node after 4-5 minutes. Please wait till node restart",

"reports" : [ ],

"createTimestamp" : "July 26, 2020 10:42:51 AM CEST",

"resourceList" : [ ],

"description" : "Storage Firmware Patching",

"updatedTime" : "July 26, 2020 10:42:51 AM CEST"

}

[root@odat1 ~]#

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-job -i "081de564-2cc1-4cb9-b18e-b3d5c484c58d"

Job details

----------------------------------------------------------------

ID: 081de564-2cc1-4cb9-b18e-b3d5c484c58d

Description: Storage Firmware Patching

Status: Success

Created: July 26, 2020 10:42:51 AM CEST

Message:

Task Name Start Time End Time Status

---------------------------------------- ----------------------------------- ----------------------------------- ----------

Applying Firmware Disk Patches July 26, 2020 10:47:24 AM CEST July 26, 2020 10:50:27 AM CEST Success

Applying Firmware Controller Patches July 26, 2020 10:50:42 AM CEST July 26, 2020 10:51:00 AM CEST Success

preRebootNode Actions July 26, 2020 10:51:01 AM CEST July 26, 2020 10:51:01 AM CEST Success

preRebootNode Actions July 26, 2020 10:51:01 AM CEST July 26, 2020 10:51:01 AM CEST Success

Reboot Ilom July 26, 2020 10:51:01 AM CEST July 26, 2020 10:51:01 AM CEST Success

Reboot Ilom July 26, 2020 10:51:02 AM CEST July 26, 2020 10:51:02 AM CEST Success

[root@odat1 ~]#

After the job finish (and after around 10 minutes) the ODA will reboot automatically.

And you can see that now that everything is up to date:

[root@odat1 ~]# /opt/oracle/dcs/bin/odacli describe-component

System Version

---------------

19.6.0.0.0

System node Name

---------------

odat1

Local System Version

---------------

19.6.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK 19.6.0.0.0 up-to-date

GI 19.6.0.0.200114 up-to-date

DB {

[ OraDB18000_home1 ] 18.3.0.0.180717 18.9.0.0.200114

[ OraDB12102_home1,OraDB12102_home2 ] 12.1.0.2.180717 12.1.0.2.200114

[ OraDB11204_home1,OraDB11204_home2 ] 11.2.0.4.180717 11.2.0.4.200114

}

DCSAGENT 19.6.0.0.0 up-to-date

ILOM 4.0.4.52.r132805 up-to-date

BIOS 30300200 up-to-date

OS 7.7 up-to-date

FIRMWARECONTROLLER {

[ c0 ] 4.650.00-7176 up-to-date

[ c1,c2 ] 13.00.00.00 up-to-date

}

FIRMWAREEXPANDER 001E up-to-date

FIRMWAREDISK {

[ c0d0,c0d1 ] A7E0 up-to-date

[ c1d0,c1d1,c1d2,c1d3,c1d4,c1d5,c1d6, PD51 up-to-date

c1d7,c1d8,c1d9,c1d10,c1d11,c1d12,c1d13,

c1d14,c1d15,c2d0,c2d1,c2d2,c2d3,c2d4,

c2d5,c2d6,c2d7,c2d8,c2d9,c2d10,c2d11,

c2d12,c2d13,c2d14,c2d15 ]

[ c1d16,c1d17,c1d18,c1d19,c1d20,c1d21, A29A up-to-date

c1d22,c1d23,c2d16,c2d17,c2d18,c2d19,

c2d20,c2d21,c2d22,c2d23 ]

}

HMP 2.4.5.0.1 up-to-date

System node Name

---------------

odat2

Local System Version

---------------

19.6.0.0.0

Component Installed Version Available Version

---------------------------------------- -------------------- --------------------

OAK 19.6.0.0.0 up-to-date

GI 19.6.0.0.200114 up-to-date

DB {

[ OraDB18000_home1 ] 18.3.0.0.180717 18.9.0.0.200114

[ OraDB12102_home1,OraDB12102_home2 ] 12.1.0.2.180717 12.1.0.2.200114

[ OraDB11204_home1,OraDB11204_home2 ] 11.2.0.4.180717 11.2.0.4.200114

}

DCSAGENT 19.6.0.0.0 up-to-date

ILOM 4.0.4.52.r132805 up-to-date

BIOS 30300200 up-to-date

OS 7.7 up-to-date

FIRMWARECONTROLLER {

[ c0 ] 4.650.00-7176 up-to-date

[ c1,c2 ] 13.00.00.00 up-to-date

}

FIRMWAREEXPANDER 001E up-to-date

FIRMWAREDISK {

[ c0d0,c0d1 ] A7E0 up-to-date

[ c1d0,c1d1,c1d2,c1d3,c1d4,c1d5,c1d6, PD51 up-to-date

c1d7,c1d8,c1d9,c1d10,c1d11,c1d12,c1d13,

c1d14,c1d15,c2d0,c2d1,c2d2,c2d3,c2d4,

c2d5,c2d6,c2d7,c2d8,c2d9,c2d10,c2d11,

c2d12,c2d13,c2d14,c2d15 ]

[ c1d16,c1d17,c1d18,c1d19,c1d20,c1d21, A29A up-to-date

c1d22,c1d23,c2d16,c2d17,c2d18,c2d19,

c2d20,c2d21,c2d22,c2d23 ]

}

HMP 2.4.5.0.1 up-to-date

[root@odat1 ~]#

Disclaimer: “The postings on this site are my own and don’t necessarily represent my actual employer positions, strategies or opinions. The information here was edited to be useful for general purpose, specific data and identifications were removed to allow reach the generic audience and to be useful for the community. Post protected by copyright.”

Pingback: Patch ODA from 18.3 to 19.8. Part 3 – 19.6 to 19.7 | Fernando Simon

Pingback: A mid-September blog – Oracle Business Intelligence

Hi Fernando,

Thank you very much for providing such a details blog to upgrade ODA Machine.

Can you please clear my doubt. I am also planning to Upgrade my ODA Machine X7-2M from 18.3 to 19.6

I have resized /u01 mountpoint to 150 GB and now I have less than 190 GB in my PV,

I have reduce the size of my LVM /u01 to 100 GB by deleting some unwanted files. It will not cause an issue right.

As I am seeing below warning while running lvreduce command

WARNING: Reducing active logical volume to 130.00 GiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

[root@odat1 ~]# lvreduce -L 130G /dev/mapper/VolGroupSys-LogVolU01

WARNING: Reducing active logical volume to 130.00 GiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce VolGroupSys/LogVolU01? [y/n]: y

Size of logical volume VolGroupSys/LogVolU01 changed from 170.00 GiB (5440 extents) to 130.00 GiB (4160 extents).

Logical volume LogVolU01 successfully resized.

Hi,

The point is that before you reduce the logical volume of LVM, you need to reduce/shrink the filesystem before. If you don’t do that, you can corrupt and lose data.

One option is using the “–resizefs” for the lvreduce command.

But you can open teh SR and check how to properly do that in your case.

Regards.

Fernando Simon

Thanks

Thanks Fernando for reply.

Pingback: BrokeDBA: What's ODABR snapshot & how to efficiently use it to patch ODA from 18 to 19.x