In my previous post, I talked about why use the new parameters DB_FLASHBACK_LOG_DEST_SIZE and DB_FLASHBACK_LOG_DEST for Oracle 23ai. I spoke about how to configure them and the benefits. Here you will find additional details about these two parameters and what they change for internal views and the restore points.

Tag Archives: Oracle

23ai, new parameters DB_FLASHBACK_LOG_DEST_SIZE and DB_FLASHBACK_LOG_DEST

Oracle database has the Oracle Flashback Technology that allows you to view old images of your data without the need to restore your database. You can use restore points, restore tables, and rows, and do a lot of things. To use it (in a simple way), you need to enable the archivelog and flashback mode for your database and Oracle will create additional logs while you change the data.

Unfortunately, it is exactly these logs that create some issues. Jonathan Lewis already described this issue, and in resume, while changing the data you need to write more because you will use UNDO + Flashback logs. In essence, you write more every time.

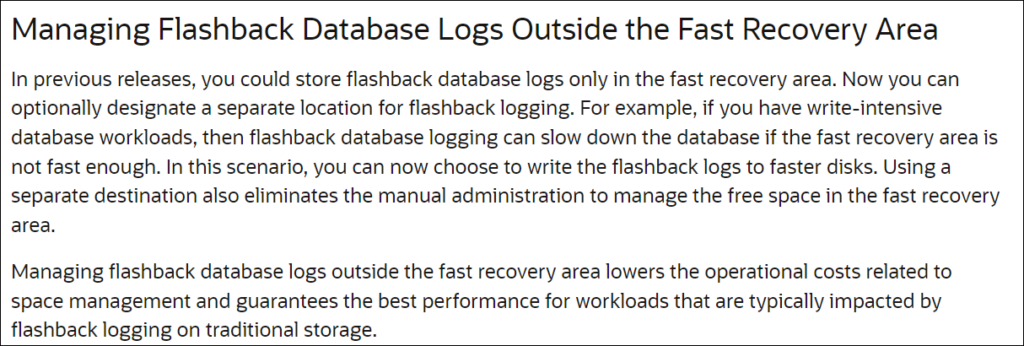

Until Oracle 23ai, it was not possible to change the place where you write these logs, (more or less) it will always be where you write your archivelogs (when using the fast recovery area). So, archivelogs and flashback logs are tight where they reside. Luckily this changed, and the new features of 23ai explain:

The idea is to put the flashback logs in a dedicated (and fast) disk to reduce the impact of writing them.

2023/2024

WOW, what a year for 2023. The first thing to say for 2023 is Thank You. First, thanks to my family for all the support.

I need to say Thank You to Jennifer and all of the Oracle ACE Program. Begin of 2023 I became Oracle ACE Director, and it was an amazing journey to reach it. So, Jennifer and the Oracle ACE Program, Thank You for letting me reach this level. But was not alone, you (the reader) who access my blog and social media helped a lot. It helped me access my posts and pushed me harder to improve my writing skills in every post (like the last one for DG PDB and the new command). Last but not least here, Thank You so much Rodrigo Jorge and Tim Chien for the indication to be ACE Director thank you.

More or less over the same area, this year I could back to Oracle Cloud World as a speaker (after 7 years). It is just THE biggest event for Oracle. So, Thank You Mike Dietrich and Rodrigo Jorge for the invite. I could talk and share my experience and be on stage with you too. As I heard, the session was classified in the top 12 for the whole OCW. Thank you too Tim Chien for the invite to speak as well, was amazing to share the stage with you too.

And talking about sessions I need to say Thank You to POUG, DOAG, and UKOUG for accepting my session to speak at your events. Meeting old (and making new) friends is simply amazing. Hope to see you soon during other sessions.

And for my work, was a stunning year too. Big project delivery flawless (core banking migration), and several others during the year. Thank You to my coworkers, and friends there.

So, for 2024 I simply wish that I could do and deliver the same that I made during 2023. 2023 was simply amazing, hope the same for 2024. And I wish you the same for you too.

Photo by Kajetan Sumila on Unsplash

21c, DG PDB, New Steps

When the DGPDB was released for 21c (at version 21.7) I wrote a blog post about how to use the feature (you can read it here). This was in August of 2022 and since that time, we got small changes and corrections, but with the update 21.12 (patch 35740258) we got new commands like “EDIT CONFIGURATION PREPARE DGPDB”.

Not just that, but Ludovico Caldara (Data Guard PM) recently wrote one blog post about new commands for Data Guard preparation that can be used with Broker. Is an evolution of the commands I covered in one previous blog post.

So, in this post, I will cover the new commands for DG PDB and the changes/improvements that appeared in the last version. It is a long post, but everything is covered here. No gaps or information are missing, all the steps, logs, and outputs are described and documented.

Exadata, REQUIRED_MIRROR_FREE_MB and GRID 19.19

I already wrote about the issue introduced with GI 19.16 in my previous post (click here to read) where (only at Exadata) more space was allocated/reserved by Oracle to guarantee mirror/rebalance. Fortunately, after some months of discussion, they rollbacked the change and released one patch that can be applied at GI 19.19.

The patch was released on 12 of June and it is the number 35285795 and can be only applied at GI 19.19. But to have your space back again there is one important rule: your mirroring needs to be HIGH. This is necessary because the “Smart Rebalance” that allows your disk to be dropped without losing the mirroring. I will write another post just to talk about it.

Exadata version 23.1.0.0.0 – Part 04

On 08/March/2023 the Oracle Exadata team released version 23.1.0.0.0 and this include a significant change, OEL 8. I already explained that in my first post that you can read here. In my previous posts, I already described how to patch how to patch storage and switch, and the dom0. In this post, I will discuss how to patch the domU.

What you can do

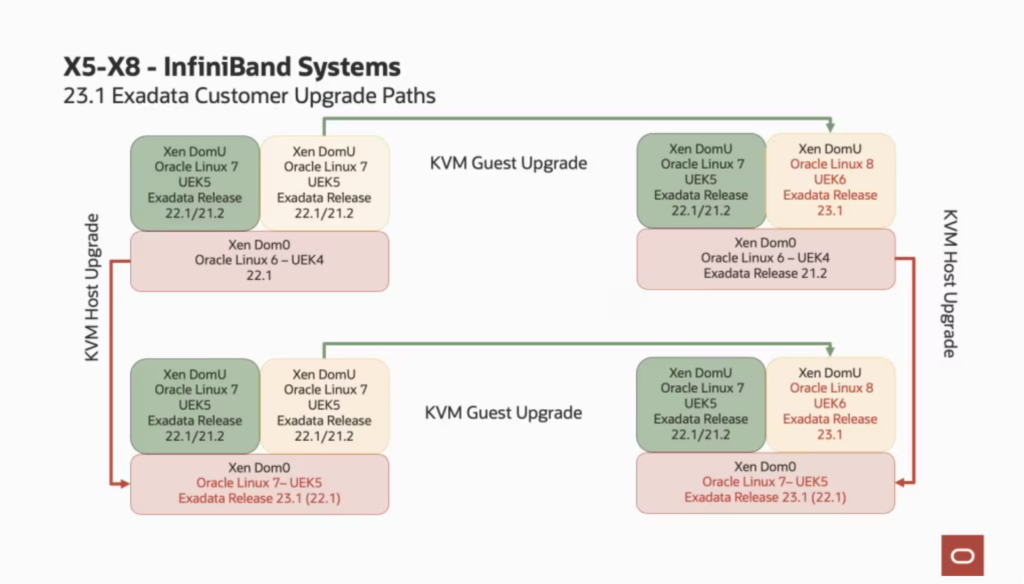

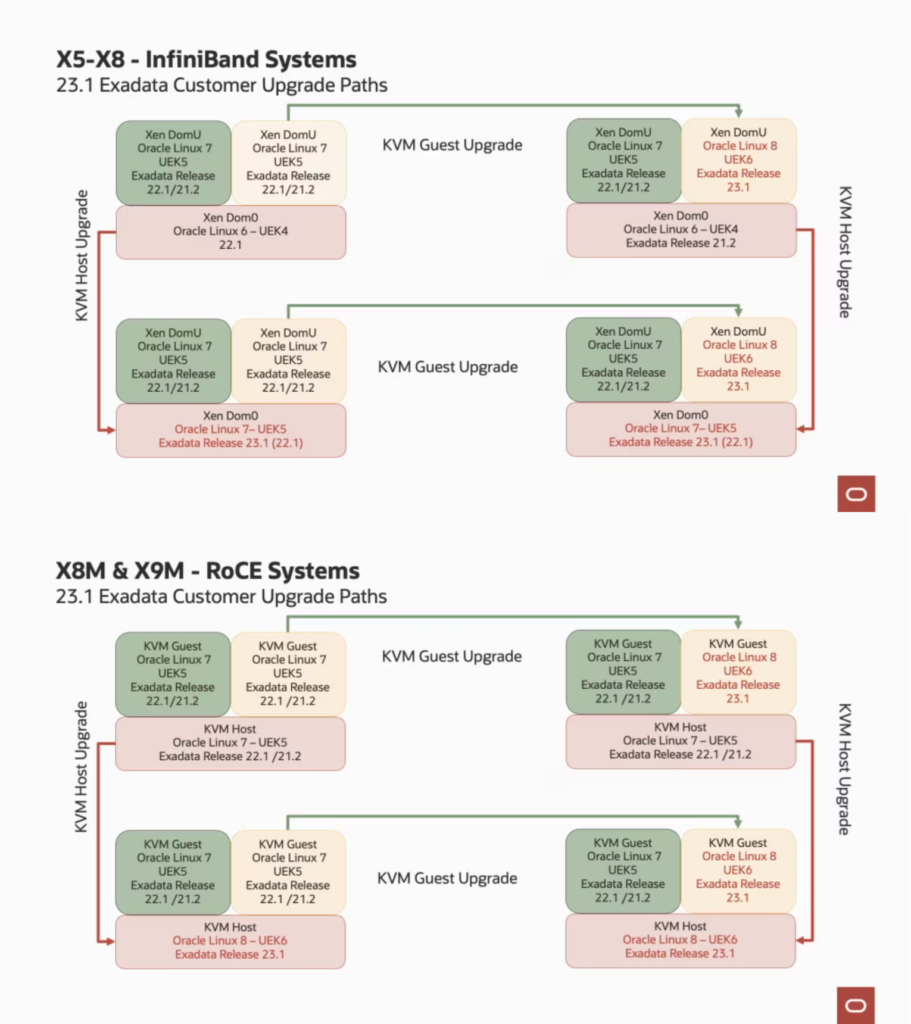

I already wrote this previously but is important to understand the upgrade paths that you can do: If you are running the old Exadata with InfiniBand, your dom0 will always be updated until Oracle Linux 7 with UEK5. For domU you can upgrade to the OEL 8. And you can upgrade in any order, first dom0 or domU. If you are running RoCE, your dom0 can run the latest OEL 8 UEK6. The blog post from Oracle made an excellent explanation about the upgrade paths and below you can see the images that are there (I used the image from their post).

Exadata version 23.1.0.0.0 – Part 03

On 08/March/2023 the Oracle Exadata team released version 23.1.0.0.0 and this include a significant change, OEL 8. I already explained that in my first post that you can read here. In my second post, I wrote about how to patch storage and switch. In this post, I will discuss how to patch the dom0.

What you can do

Due to the changes for OEL 8, is important to understand the upgrade paths that you can take. As I wrote in my first post: If you are running the old Exadata with InfiniBand, your dom0 will always be updated until Oracle Linux 7 with UEK5. For domU you can upgrade to the OEL 8. And you can upgrade in any order, first dom0 or domU. If you are running RoCE, your dom0 can run the latest OEL 8 UEK6. The blog post from Oracle made an excellent explanation about the upgrade paths and below you can see the images that are there (I used the image from their post).

So, since the environment that I am patching is Exadata with InfiniBand, my dom0 will be upgraded until the OEL7 running the UEK5. But the Exadata-related software will be upgraded to version 23.1. The domU will be upgraded to OEL8, with UEK6. So, basically will be this (I used the image from the Exadata Team post):

Here, I patched first the dom0 because if I patch it first, all the versions already released for domU will be compatible with him. I am upgrading, so, the dom0 running at 23.1 will be compatible with domU running at a lower version.

Exadata version 23.1.0.0.0 – Part 02

On 08/March/2023 the Oracle Exadata team released version 23.1.0.0.0 and this include a significant change, OEL 8. I already explained that in my first post that you can read here. Here I will show how to patch to the 23.1.0.0 version for switch and storage cells.

Patching

As you know, I am working with Exadata since 2010 and have already posted about how to upgrade to the 19x version, 18.x version, version 12.x (Portuguese only), and many other details for Oracle Engineered Systems. Fortunately, I had the opportunity to apply the 23.1 version over one environment and will show the details.

Here, my environment is:

- Exadata X6 for storage and dbnodes.

- InfiniBand Switches.

- Virtualized configuration (dom0 and domU).

- dom0 running over version 22.1.0.9.

- domU running over version 22.1.0.9.

- Grid Infrastructure running version 19.17.

Since I am running with dom0/domU, my base machine (from where I will call most of the patches) is the dom0. There, I have ssh passwordless/keyless to all other cells, dbnodes, domU, and switches.

Before you start the patch please check the readme for the patch and identify if you have everything in compliance. Do not start any patch if you meet the requirements. Even from a simple database version, grid, and switch versions. And as well, do not start the patch if your machine has HW errors. So, please read the note Exadata System Software 23.1.0.0.0 Update (32829291) (Doc ID 2772585.1).

Exadata version 23.1.0.0.0 – Part 01

On 08/March/2023 the Oracle Exadata team released version 23.1.0.0.0 and this include a significant change, OEL 8. But is not just that, other interesting requirements are there and I will discuss them below. I will show you how to patch to the 23.1 version and some other details as well. In this first part, I will just discuss one interesting point that you need to take care of before you start to patch. And probably is more important than you imagine.

Before you patch

The new version brings some requirements (over what you need to be running) to allow you to patch. For the Grid Infrastructure, you need to run 19.15 or a newer version. You can even run the 21c (21.6 or newer) version if you want. If you want to know how to do that, I already discussed how to upgrade both in previous posts (19c, and 21c).

For databases, the recommendation is the same, 19c or 21c. You can still run older versions (11,g, 12c, and 18) but they are already (or will be soon) under Market Driver Support. You can read the MOS note over that (here), but to be clear (now) only the 19c have premier support available.

And now things became quite interesting because the new 23.1 version is the first running with OEL 8. And if you check the supplemental README for the 23.1 version just the 19c support the database and GI are listed. So, be aware and check the compatibilities.

One important detail for this version is that you can only upgrade to 23.1 if your base Exadata running version is newer or equal to 21.2.10 (basically one year old only). If not, you need to upgrade to (at least) this version before you patch to 23.1. And this will be the same in the one-year future, it will be only possible to upgrade to 24.x if you will be running (at least) 23.1.

If you are running the old Exadata with InfiniBand, your dom0 will always be updated until Oracle Linux 7 with UEK5. For domU you can upgrade to the OEL 8. And you can upgrade in any order, first dom0 or domU. If you are running RoCE, your dom0 can run the latest OEL 8 UEK6. The blog post from Oracle made an excellent explanation about the upgrade paths and below you can see the images that are there (I used the image from their post).

So, as usual, the version includes everything, switches, storage, and database node. And while for switches and storages, the patches are quite normal, for virtualized environments the upgrades paths start to be a little more challenging to plan. I will explain, but (as hinted in the blog post) the upgrade of the Hosts and Guests independently and in any order. And is hard not because of the patch apply itself, but will be to create the plan. Remember the requirements for Oracle Database and GI? So, you can spend a lot of time patching others parts than the Exadata version.

But let’s put pieces together, the small lines written in several places. With this version 23.1, Oracle is telling you that you need to be running at least the Oracle Database 19c to be allowed to have a continuous upgrade for future releases (and possible usage) of Exadata. And whatever the machine version that you use, IB or RoCE network. You can’t anymore use GI older than 19.15, and the databases are enforced, as well, to be this version too. Imagine that you have some kind of incapability between 11g/12c and OEL 8, if you need to open one SR, you need to have/pay for that support, and will not be cheap.

And if you think the upcoming 23c (and that it will be the new LTS version) being in OEL 8 is a requirement. Imagine one year in the future, when the Exadata 24.x version will arrive, do you think that Oracle still supports 11g to the new OEL 9? I don’t think so.

And by the way, IMHO you should be running to 19c. 11G is from 2009, 12.1 from July 2013. So, they are old and out of support for good reasons. I understand the point that they are working on and the legacy applications that maybe you have. But the point is not just to support them, is the case to be possible to continue to upgrade/update your Exadata. Please do not postpone your database upgrades anymore, for the good sake of your Exadata.

Exadata, REQUIRED_MIRROR_FREE_MB and GRID 19.16

Starting with Grid Infrastructure/ASM 19.16 Oracle changed how the REQUIRED_MIRROR_FREE_MB is calculated and the impact is more than expected. Check below examples of the changes, and how this will impact you. This is valid for all GI/ASM starting with 19.16 and only for Exadata/ExaCC.

Please read my new post about this issue.

REQUIRED_MIRROR_FREE_MB

The REQUIRED_MIRROR_FREE_MB (according to 19c documentation) is:

“amount of space that must be available in a disk group to restore full redundancy after the worst failure that can be tolerated by the disk group without adding additional storage. This requirement ensures that there are sufficient failure groups to restore redundancy”.

And (at Exadata environment until 19.16) is calculated based on the disk redundancy that you have. If you choose the HIGH, the raw size of two disks (the largest in your diskgroup) is reserved; at NORMAL, is the raw size of one disk. At Exadata, it differs from other environments because does not consider the whole failgroup failure and the way that extends are written/spread (more info below and in another post).

But for now, understand that the required size is what you need to reserve (as raw space) at your diskgroup to ensure protection in case of disk failure. And it is directly related to the USABLE_FILE_MB because the space that you can allocate at your diskgroup (USABLE_FILE_MB) comes from (FREE_MB- REQUIRED_MIRROR_FREE_MB)/redundancy factor (3 for HIGH, 2 for NORMAL). So, when you increase the REQUIRED_MIRROR_FREE_MB you reduce the USABLE_FILE_MB. I will explain more later.